Compare commits

4 Commits

00619aa0fb

...

50deb4ef89

| Author | SHA1 | Date | |

|---|---|---|---|

| 50deb4ef89 | |||

| 240f1294ca | |||

| c92d46ed92 | |||

| ef34fb34fa |

91

README.md

91

README.md

|

|

@ -3,7 +3,7 @@ Models of Bayesian-like updating under constrained compute

|

|||

|

||||

This repository contains some implementations of models of bayesian-like updating under constrained compute. The world in which these models operate is the set of sequences from the [Online Encyclopedia of Integer Sequences](https://oeis.org/), which can be downloaded from [here](https://oeis.org/wiki/JSON_Format,_Compressed_Files).

|

||||

|

||||

|

||||

|

||||

|

||||

## Models

|

||||

|

||||

|

|

@ -23,15 +23,58 @@ As described [here](https://nunosempere.com/blog/2023/02/04/just-in-time-bayesia

|

|||

|

||||

|

||||

|

||||

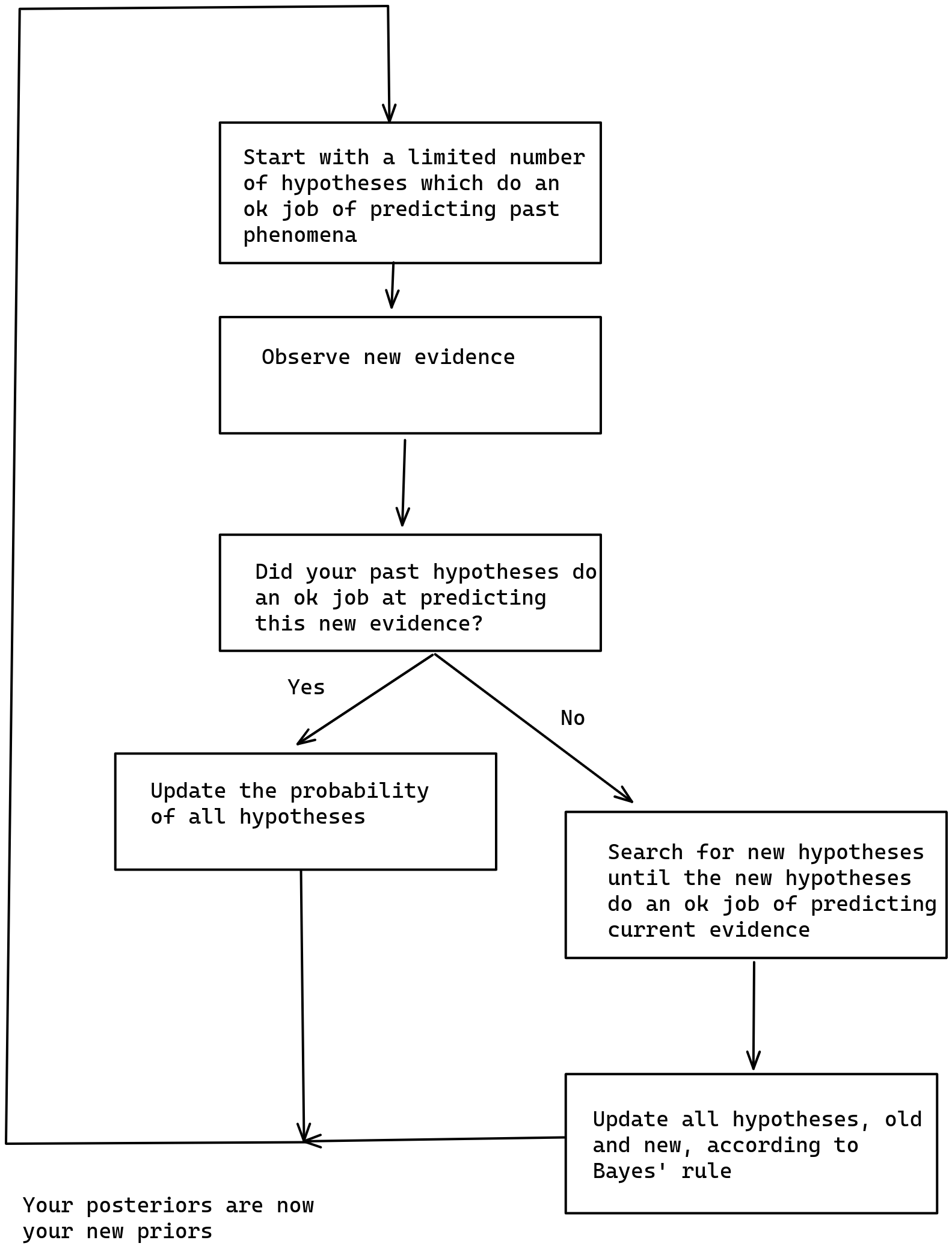

I think that the implementation here provides some value in terms of making fuzzy concepts explicit. For example, instead of "did your past hypotheses do an ok job at predicting this new evidence", I have code which looks at whether past predictions included the correct completion, and which expand the search for hypotheses if not.

|

||||

I think that the implementation here provides some value in terms of making fuzzy concepts explicit. For example, instead of "did your past hypotheses do an ok job at predicting this new evidence", I have code which looks at whether past predictions included the correct completion, and which expands the search for hypotheses if not.

|

||||

|

||||

I later elaborated on this type of scheme on [A computable version of Solomonoff induction](https://nunosempere.com/blog/2023/03/01/computable-solomonoff/).

|

||||

|

||||

### Infrabayesianism

|

||||

|

||||

Mini-infrabayesianism part I

|

||||

Caveat for this section: This represents my partial understanding of some parts of infrabayesianism, and ideally I'd want someone to check it. Errors may exist.

|

||||

|

||||

- Mini-infrabayesianism part II:

|

||||

#### Part I: Infrabayesianism in the sense of producing a deterministic policy that maximizes worst-case utility (minimizes worst-case loss)

|

||||

|

||||

In this example:

|

||||

|

||||

- environments are the possible OEIS sequences, shown one element at a time

|

||||

- the actions are observing some elements, and making predictions about what the next element will be condition

|

||||

- loss is the sum of the log scores after each observation.

|

||||

- Note that at some point you arrive at the correct hypothesis, and so your subsequent probabilities are 1.0 (100%), and your subsequent loss 0 (log(1))

|

||||

|

||||

Claim: If there are n sequences which start with s, and m sequences that start with s and continue with q, the action which minimizes loss is assigning a probability of m/n to completion q.

|

||||

|

||||

Proof: In this case, let #{xs} denote the number of OEIS sequences that start with sequence xs, and let ø denote the empty sequence, such that ${ø} is the total number of OEIS sequences. Then your loss for a sequence (e1, e2, ..., en)—where en is the element by which you've identified the sequence with certainty—is log(#{e1}/#{ø}) + log(#{e1e2}/#{e1}) + ... + log(#{e1...en}/#{e1...e(n-1)}). Because log(a) + log(b) = log(a×b), that simplifies to log(#{e1...en}/#{ø}). But there is one unique sequence which starts with e1...en, and hence #{e1...en} = 1. Therefore this procedure just assigns the same probability to each sequence, namely 1/(number of sequences).

|

||||

|

||||

Now suppose you have some policy that makes predictions that deviate from the above, i.e., you assign a different probability than 1/#{} to some sequence. Then there is some sequence to which you are assigning a lower probability. Hence the maximum loss is higher. Therefore the policy which minimizes loss is the one described above.

|

||||

|

||||

Note: In the infrabayesianism post, the authors look at some extension of expected values which allow us to compute the minmax. But in this case, the result of policies in an environment is deterministic, and we can compute the minmax directly.

|

||||

|

||||

Note: To some extent having the result of policies in an environment be deterministic, and also the same in all environments, defeats a bit of the point of infrabayesianism. So I see this step as building towards a full implementation of Infrabayesianism.

|

||||

|

||||

#### Part II: Infrabayesianism in the additional sense of having hypotheses only over parts of the environment, without representing the whole environment

|

||||

|

||||

Have the same scheme as in Part I, but this time the environment is two OEIS sequences interleaved together.

|

||||

|

||||

Some quick math: If one sequence represented as an ASCII string is 4 Kilobytes (obtained with `du -sh data/line`), and the whole of OEIS takes 70MB, then all possibilities for two OEIS sequences interleaved together is 70MB/4K * 70MB, or over 1 TB.

|

||||

|

||||

But you can imagine more mischevous environments, like: a1, a2, b1, a3, b2, c1, a4, b3, c2, d1, a4, b4, c3, d2, ..., or in triangular form:

|

||||

|

||||

```

|

||||

a1,

|

||||

a2, b1,

|

||||

a3, b2, c1,

|

||||

a4, b3, c2, d1,

|

||||

a4, b4, c3, d2, ...

|

||||

```

|

||||

|

||||

(where (ai), (bi), etc. are independently drawn OEIS sequences)

|

||||

|

||||

Then you can't represent this environment with any amount of compute, and yet by only having hypotheses over different positions, you can make predictions about what the next element will be when given a list of observations.

|

||||

|

||||

We are not there yet, but interleaving OEIS sequences already provides an example of this sort.

|

||||

|

||||

#### Part III: Capture some other important aspect of infrabayesianism (e.g., non-deterministic environments)

|

||||

|

||||

[To do]

|

||||

|

||||

## Built with

|

||||

|

||||

|

|

@ -44,26 +87,33 @@ Why nim? Because it is nice to use and [freaking fast](https://github.com/NunoSe

|

|||

|

||||

### Prerequisites

|

||||

|

||||

Install [nim](https://nim-lang.org/install.html) and make.

|

||||

|

||||

### Compilation

|

||||

Install [nim](https://nim-lang.org/install.html) and make. Then:

|

||||

|

||||

```

|

||||

git clone https://git.nunosempere.com/personal/compute-constrained-bayes.git

|

||||

cd compute-constrained-bayes

|

||||

cd src

|

||||

make deps ## get dependencies

|

||||

```

|

||||

|

||||

### Compilation

|

||||

|

||||

```

|

||||

make fast ## also make, or make build, for compiling it with debug info.

|

||||

./compute-constrained-bayes

|

||||

```

|

||||

|

||||

### Alternatively:

|

||||

|

||||

See [here](./outputs/aha.html) for a copy of the program's outputs.

|

||||

|

||||

## Contributions

|

||||

|

||||

Contributions are very welcome, particularly around:

|

||||

|

||||

- [ ] Making the code more nim-like, using nim's standard styles and libraries

|

||||

- [ ] Adding another example which is not logloss minimization for infrabayesianism

|

||||

- [ ]

|

||||

- [ ] Adding another example which is not logloss minimization for infrabayesianism?

|

||||

- [ ] Adding other features of infrabayesianism?

|

||||

|

||||

## Roadmap

|

||||

|

||||

|

|

@ -79,30 +129,9 @@ Contributions are very welcome, particularly around:

|

|||

- [x] Add the loop of: start with some small number of sequences, and if these aren't enough, read more.

|

||||

- [x] Clean-up

|

||||

- [ ] Infrabayesianism

|

||||

- [ ] Infrabayesianism x1: Predicting interleaved sequences.

|

||||

- [x] Infrabayesianism x1: Predicting interleaved sequences.

|

||||

- Yeah, actually, I think this just captures an implicit assumption of Bayesianism as actually practiced.

|

||||

- [x] Infrabayesianism x2: Deterministic game of producing a fixed deterministic prediction, and then the adversary picking whatever minimizes your loss

|

||||

- I am actually not sure of what the procedure is exactly for computing that loss. Do you minimize over subsequent rounds of the game, or only for the first round? Look this up.

|

||||

- [ ] Also maybe ask for help from e.g., Alex Mennen?

|

||||

- [x] Lol, minimizing loss for the case where your utility is the logloss is actually easy.

|

||||

|

||||

---

|

||||

|

||||

An implementation of Infrabayesianism over OEIS sequences.

|

||||

<https://oeis.org/wiki/JSON_Format,_Compressed_Files>

|

||||

|

||||

Or "Just-in-Time bayesianism", where getting a new hypothesis = getting a new sequence from OEIS which has the numbers you've seen so far.

|

||||

|

||||

Implementing Infrabayesianism as a game over OEIS sequences. Two parts:

|

||||

1. Prediction over interleaved sequences. I choose two OEIS sequences, and interleave them: a1, b1, a2, b2.

|

||||

- Now, you don't have hypothesis over the whole set, but two hypothesis over the

|

||||

- I could also have a chemistry like iteration:

|

||||

a1

|

||||

a2 b1

|

||||

a3 b2 c1

|

||||

a4 b3 c2 d1

|

||||

a5 b4 c3 d2 e1

|

||||

.................

|

||||

- And then it would just be computationally absurd to have hypotheses over the whole

|

||||

|

||||

2. Game where: You provide a deterministic procedure for estimating the probability of each OEIS sequence giving a list of trailing examples.

|

||||

|

|

|

|||

1

data/line

Normal file

1

data/line

Normal file

|

|

@ -0,0 +1 @@

|

|||

A000015 ,1,2,3,4,5,7,7,8,9,11,11,13,13,16,16,16,17,19,19,23,23,23,23,25,25,27,27,29,29,31,31,32,37,37,37,37,37,41,41,41,41,43,43,47,47,47,47,49,49,53,53,53,53,59,59,59,59,59,59,61,61,64,64,64,67,67,67,71,71,71,71,73,

|

||||

572

outputs/aha.html

Normal file

572

outputs/aha.html

Normal file

|

|

@ -0,0 +1,572 @@

|

|||

<?xml version="1.0" encoding="UTF-8" ?>

|

||||

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

|

||||

<!-- This file was created with the aha Ansi HTML Adapter. https://github.com/theZiz/aha -->

|

||||

<html xmlns="http://www.w3.org/1999/xhtml">

|

||||

<head>

|

||||

<meta http-equiv="Content-Type" content="application/xml+xhtml; charset=UTF-8" />

|

||||

<title>stdin</title>

|

||||

</head>

|

||||

<body>

|

||||

<pre>

|

||||

$ unbuffer make run | aha > aha.html

|

||||

./compute_constrained_bayes --verbosity:0

|

||||

|

||||

## Full prediction with access to all hypotheses (~Solomonoff)

|

||||

## Initial sequence: @["1", "2", "3"]

|

||||

continuation_probabilities=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4"</span>, <span style="color:blue;">0.5031144781144781</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.1727272727272727</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"6"</span>, <span style="color:blue;">0.07878787878787878</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2"</span>, <span style="color:blue;">0.0505050505050505</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"3"</span>, <span style="color:blue;">0.04882154882154882</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"7"</span>, <span style="color:blue;">0.02803030303030303</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1"</span>, <span style="color:blue;">0.02474747474747475</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8"</span>, <span style="color:blue;">0.02163299663299663</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"9"</span>, <span style="color:blue;">0.01060606060606061</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"10"</span>, <span style="color:blue;">0.009175084175084175</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"11"</span>, <span style="color:blue;">0.008838383838383838</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"12"</span>, <span style="color:blue;">0.008501683501683501</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"16"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"14"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"0"</span>, <span style="color:blue;">0.003282828282828283</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"15"</span>, <span style="color:blue;">0.00260942760942761</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"13"</span>, <span style="color:blue;">0.002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"18"</span>, <span style="color:blue;">0.001262626262626263</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"23"</span>, <span style="color:blue;">0.000925925925925926</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"24"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"22"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"41"</span>, <span style="color:blue;">0.0007575757575757576</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"28"</span>, <span style="color:blue;">0.0006734006734006734</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.0005892255892255892</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"211"</span>, <span style="color:blue;">0.0005050505050505051</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"30"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"26"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"35"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"25"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4567"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-2"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-1"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-4"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"40"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"60"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"32"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"81"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"64"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"38"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"56"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"33"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"69"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"27"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"39"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"128"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"130"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"55"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"47"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"65"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"74"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"83"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"92"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"124"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"36"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"789"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2436"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"401"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"43"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"58"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"34"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"107"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"380"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-3"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"119"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"456"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8787"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"48"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"127"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"469"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"57"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"85"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"617"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-16"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1080"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"72"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"95"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"101"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"661"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"37"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2310"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"62"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"111213"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"44"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"99"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1767"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123543"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"173"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"21"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"42"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"144689999986441"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"54"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"512"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"371"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"52"</span>, <span style="color:blue;">8.417508417508418e-05</span>)

|

||||

]

|

||||

|

||||

## Predictions with increasingly many hypotheses

|

||||

Showing predictions with increasingly many hypotheses after seeing @["1", "2", "3", "23"]

|

||||

Predictions with 10% of the hypotheses

|

||||

predictions=@[]

|

||||

Predictions with 20% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.5</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.5</span>)]

|

||||

Predictions with 30% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.3333333333333333</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.3333333333333333</span>), <span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.3333333333333333</span>)]

|

||||

Predictions with 40% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.25</span>)]

|

||||

Predictions with 50% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.25</span>), <span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.25</span>)]

|

||||

Predictions with 60% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.2</span>)]

|

||||

Predictions with 70% of the hypotheses

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.2</span>), <span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.2</span>)]

|

||||

Predictions with 80% of the hypotheses

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.125</span>)

|

||||

]

|

||||

Predictions with 90% of the hypotheses

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.125</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.125</span>)

|

||||

]

|

||||

Predictions with 100% of the hypotheses

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"11"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"43"</span>, <span style="color:blue;">0.09090909090909091</span>)

|

||||

]

|

||||

|

||||

## Prediction with limited number of hypotheses (~JIT-Bayes)

|

||||

### Prediction after seeing 3 observations: @["1", "2", "3"]

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4"</span>, <span style="color:blue;">0.375</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.25</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"6"</span>, <span style="color:blue;">0.2083333333333333</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8"</span>, <span style="color:blue;">0.04166666666666666</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"10"</span>, <span style="color:blue;">0.04166666666666666</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"3"</span>, <span style="color:blue;">0.04166666666666666</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"7"</span>, <span style="color:blue;">0.04166666666666666</span>)

|

||||

]

|

||||

Correct continuation, 23 not found in set of hypotheses of size 1000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 23 not found in set of hypotheses of size 31000/362901. Increasing size of the set of hypotheses.

|

||||

Increased number of hypotheses to 61000, and found 1 concordant hypotheses. Continuing

|

||||

### Prediction after seeing 4 observations: @["1", "2", "3", "23"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation, 11 not found in set of hypotheses of size 61000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 91000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 121000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 151000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 181000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 211000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 241000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 271000/362901. Increasing size of the set of hypotheses.

|

||||

Correct continuation, 11 not found in set of hypotheses of size 301000/362901. Increasing size of the set of hypotheses.

|

||||

Increased number of hypotheses to 331000, and found 1 concordant hypotheses. Continuing

|

||||

### Prediction after seeing 5 observations: @["1", "2", "3", "23", "11"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"18"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 18

|

||||

It was assigned a probability of 1.0

|

||||

### Prediction after seeing 6 observations: @["1", "2", "3", "23", "11", "18"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"77"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 77

|

||||

It was assigned a probability of 1.0

|

||||

### Prediction after seeing 7 observations: @["1", "2", "3", "23", "11", "18", "77"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"46"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 46

|

||||

It was assigned a probability of 1.0

|

||||

### Prediction after seeing 8 observations: @["1", "2", "3", "23", "11", "18", "77", "46"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"84"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 84

|

||||

It was assigned a probability of 1.0

|

||||

|

||||

## Mini-infra-bayesianism over environments, where your utility in an environment is just the log-loss in the predictions you make until you become certain that you are in that environment.

|

||||

### Prediction after seeing 3 observations: @["1", "2", "3"]

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4"</span>, <span style="color:blue;">0.5031144781144781</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.1727272727272727</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"6"</span>, <span style="color:blue;">0.07878787878787878</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2"</span>, <span style="color:blue;">0.0505050505050505</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"3"</span>, <span style="color:blue;">0.04882154882154882</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"7"</span>, <span style="color:blue;">0.02803030303030303</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1"</span>, <span style="color:blue;">0.02474747474747475</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8"</span>, <span style="color:blue;">0.02163299663299663</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"9"</span>, <span style="color:blue;">0.01060606060606061</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"10"</span>, <span style="color:blue;">0.009175084175084175</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"11"</span>, <span style="color:blue;">0.008838383838383838</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"12"</span>, <span style="color:blue;">0.008501683501683501</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"16"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"14"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"0"</span>, <span style="color:blue;">0.003282828282828283</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"15"</span>, <span style="color:blue;">0.00260942760942761</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"13"</span>, <span style="color:blue;">0.002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"18"</span>, <span style="color:blue;">0.001262626262626263</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"23"</span>, <span style="color:blue;">0.000925925925925926</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"24"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"22"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"41"</span>, <span style="color:blue;">0.0007575757575757576</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"28"</span>, <span style="color:blue;">0.0006734006734006734</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.0005892255892255892</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"211"</span>, <span style="color:blue;">0.0005050505050505051</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"30"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"26"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"35"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"25"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4567"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-2"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-1"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-4"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"40"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"60"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"32"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"81"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"64"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"38"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"56"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"33"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"69"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"27"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"39"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"128"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"130"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"55"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"47"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"65"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"74"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"83"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"92"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"124"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"36"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"789"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2436"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"401"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"43"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"58"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"34"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"107"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"380"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-3"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"119"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"456"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8787"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"48"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"127"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"469"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"57"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"85"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"617"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-16"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1080"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"72"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"95"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"101"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"661"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"37"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2310"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"62"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"111213"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"44"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"99"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1767"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123543"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"173"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"21"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"42"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"144689999986441"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"54"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"512"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"371"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"52"</span>, <span style="color:blue;">8.417508417508418e-05</span>)

|

||||

]

|

||||

Correct continuation was 23

|

||||

It was assigned a probability of 0.000925925925925926

|

||||

And hence a loss of -6.984716320118265

|

||||

Total loss is: -6.984716320118265

|

||||

### Prediction after seeing 4 observations: @["1", "2", "3", "23"]

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"49"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"323"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20880467999847912034355032910540"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"59"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"11"</span>, <span style="color:blue;">0.09090909090909091</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"43"</span>, <span style="color:blue;">0.09090909090909091</span>)

|

||||

]

|

||||

Correct continuation was 11

|

||||

It was assigned a probability of 0.09090909090909091

|

||||

And hence a loss of -2.397895272798371

|

||||

Total loss is: -9.382611592916636

|

||||

### Prediction after seeing 5 observations: @["1", "2", "3", "23", "11"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"18"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 18

|

||||

It was assigned a probability of 1.0

|

||||

And hence a loss of 0.0

|

||||

Total loss is: -9.382611592916636

|

||||

### Prediction after seeing 6 observations: @["1", "2", "3", "23", "11", "18"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"77"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 77

|

||||

It was assigned a probability of 1.0

|

||||

And hence a loss of 0.0

|

||||

Total loss is: -9.382611592916636

|

||||

### Prediction after seeing 7 observations: @["1", "2", "3", "23", "11", "18", "77"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"46"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 46

|

||||

It was assigned a probability of 1.0

|

||||

And hence a loss of 0.0

|

||||

Total loss is: -9.382611592916636

|

||||

### Prediction after seeing 8 observations: @["1", "2", "3", "23", "11", "18", "77", "46"]

|

||||

predictions=@[<span style="color:teal;"></span>(<span style="color:green;">"84"</span>, <span style="color:blue;">1.0</span>)]

|

||||

Correct continuation was 84

|

||||

It was assigned a probability of 1.0

|

||||

And hence a loss of 0.0

|

||||

Total loss is: -9.382611592916636

|

||||

|

||||

## Mini-infra-bayesianism over environments, where your utility in an environment is just the log-loss in the predictions you make until you become certain that you are in that environment. This time with a twist: You don't have hypotheses over the sequences you observe, but rather over their odd and even position, i.e., you think that you observe interleaved OEIS sequences, (a1, b1, a2, b2, a3, b3). See the README.md for more.

|

||||

### Prediction after seeing 6 observations: @["1", "2", "2", "11", "3", "13"]

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4"</span>, <span style="color:blue;">0.5031144781144781</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"5"</span>, <span style="color:blue;">0.1727272727272727</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"6"</span>, <span style="color:blue;">0.07878787878787878</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2"</span>, <span style="color:blue;">0.0505050505050505</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"3"</span>, <span style="color:blue;">0.04882154882154882</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"7"</span>, <span style="color:blue;">0.02803030303030303</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1"</span>, <span style="color:blue;">0.02474747474747475</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8"</span>, <span style="color:blue;">0.02163299663299663</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"9"</span>, <span style="color:blue;">0.01060606060606061</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"10"</span>, <span style="color:blue;">0.009175084175084175</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"11"</span>, <span style="color:blue;">0.008838383838383838</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"12"</span>, <span style="color:blue;">0.008501683501683501</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"16"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"14"</span>, <span style="color:blue;">0.003787878787878788</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"0"</span>, <span style="color:blue;">0.003282828282828283</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"15"</span>, <span style="color:blue;">0.00260942760942761</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"13"</span>, <span style="color:blue;">0.002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"18"</span>, <span style="color:blue;">0.001262626262626263</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"20"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.001178451178451178</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"23"</span>, <span style="color:blue;">0.000925925925925926</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"24"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"22"</span>, <span style="color:blue;">0.0008417508417508417</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"41"</span>, <span style="color:blue;">0.0007575757575757576</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"28"</span>, <span style="color:blue;">0.0006734006734006734</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.0005892255892255892</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"211"</span>, <span style="color:blue;">0.0005050505050505051</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"30"</span>, <span style="color:blue;">0.0004208754208754209</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"26"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"35"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"25"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"4567"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-2"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-1"</span>, <span style="color:blue;">0.0003367003367003367</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-4"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"40"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"60"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"32"</span>, <span style="color:blue;">0.0002525252525252525</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"81"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"64"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"38"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"56"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"33"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"69"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"27"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"39"</span>, <span style="color:blue;">0.0001683501683501684</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"128"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"130"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"55"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"47"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"65"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"74"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"83"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"92"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"124"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"36"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"789"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2436"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"401"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"43"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"58"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"34"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"107"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"380"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-3"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"119"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"456"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"8787"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"48"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"127"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"469"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"57"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"85"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"617"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"-16"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1080"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"72"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"95"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"101"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"661"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"37"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"2310"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"62"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"111213"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"44"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"99"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"1767"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"123543"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"173"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"21"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"42"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"144689999986441"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"54"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"512"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"371"</span>, <span style="color:blue;">8.417508417508418e-05</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"52"</span>, <span style="color:blue;">8.417508417508418e-05</span>)

|

||||

]

|

||||

Correct continuation was 23

|

||||

It was assigned a probability of 0.000925925925925926

|

||||

And hence a loss of -6.984716320118265

|

||||

Total loss is: -6.984716320118265

|

||||

### Prediction after seeing 7 observations: @["1", "2", "2", "11", "3", "13", "23"]

|

||||

predictions=@[

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"17"</span>, <span style="color:blue;">0.4035087719298245</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"19"</span>, <span style="color:blue;">0.1228070175438596</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"23"</span>, <span style="color:blue;">0.1228070175438596</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"29"</span>, <span style="color:blue;">0.07017543859649122</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"31"</span>, <span style="color:blue;">0.07017543859649122</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"24"</span>, <span style="color:blue;">0.03508771929824561</span>),

|

||||

<span style="color:teal;"></span>(<span style="color:green;">"7"</span>, <span style="color:blue;">0.03508771929824561</span>),

|

||||