savepoint: accuracy twitter + make yearly indexes accurate.

This commit is contained in:

parent

dd9538f970

commit

7eb7a65824

|

|

@ -1,7 +1,7 @@

|

|||

## In 2019...

|

||||

|

||||

- [EA Mental Health Survey: Results and Analysis.](https://nunosempere.com/2019/06/13/ea-mental-health)

|

||||

- [Why do social movements fail: Two concrete examples.](https://nunosempere.com/2019/10/04/social-movements)

|

||||

- [Shapley values: Better than counterfactuals](https://nunosempere.com/2019/10/10/shapley-values-better-than-counterfactuals)

|

||||

- [[Part 1] Amplifying generalist research via forecasting – models of impact and challenges](https://nunosempere.com/2019/12/19/amplifying-general-research-via-forecasting-i)

|

||||

- [[Part 2] Amplifying generalist research via forecasting – results from a preliminary exploration](https://nunosempere.com/2019/12/20/amplifying-general-research-via-forecasting-ii)

|

||||

- [EA Mental Health Survey: Results and Analysis.](https://nunosempere.com/blog/2019/06/13/ea-mental-health)

|

||||

- [Why do social movements fail: Two concrete examples.](https://nunosempere.com/blog/2019/10/04/social-movements)

|

||||

- [Shapley values: Better than counterfactuals](https://nunosempere.com/blog/2019/10/10/shapley-values-better-than-counterfactuals)

|

||||

- [[Part 1] Amplifying generalist research via forecasting – models of impact and challenges](https://nunosempere.com/blog/2019/12/19/amplifying-general-research-via-forecasting-i)

|

||||

- [[Part 2] Amplifying generalist research via forecasting – results from a preliminary exploration](https://nunosempere.com/blog/2019/12/20/amplifying-general-research-via-forecasting-ii)

|

||||

|

|

|

|||

|

|

@ -1,20 +1,20 @@

|

|||

## In 2020...

|

||||

|

||||

- [A review of two free online MIT Global Poverty courses](https://nunosempere.com/2020/01/15/mit-edx-review)

|

||||

- [A review of two books on survey-making](https://nunosempere.com/2020/03/01/survey-making)

|

||||

- [Shapley Values II: Philantropic Coordination Theory & other miscellanea.](https://nunosempere.com/2020/03/10/shapley-values-ii)

|

||||

- [New Cause Proposal: International Supply Chain Accountability](https://nunosempere.com/2020/04/01/international-supply-chain-accountability)

|

||||

- [Forecasting Newsletter: April 2020](https://nunosempere.com/2020/04/30/forecasting-newsletter-2020-04)

|

||||

- [Forecasting Newsletter: May 2020.](https://nunosempere.com/2020/05/31/forecasting-newsletter-2020-05)

|

||||

- [Forecasting Newsletter: June 2020.](https://nunosempere.com/2020/07/01/forecasting-newsletter-2020-06)

|

||||

- [Forecasting Newsletter: July 2020.](https://nunosempere.com/2020/08/01/forecasting-newsletter-2020-07)

|

||||

- [Forecasting Newsletter: August 2020. ](https://nunosempere.com/2020/09/01/forecasting-newsletter-august-2020)

|

||||

- [Forecasting Newsletter: September 2020. ](https://nunosempere.com/2020/10/01/forecasting-newsletter-september-2020)

|

||||

- [Forecasting Newsletter: October 2020.](https://nunosempere.com/2020/11/01/forecasting-newsletter-october-2020)

|

||||

- [Incentive Problems With Current Forecasting Competitions.](https://nunosempere.com/2020/11/10/incentive-problems-with-current-forecasting-competitions)

|

||||

- [Announcing the Forecasting Innovation Prize](https://nunosempere.com/2020/11/15/announcing-the-forecasting-innovation-prize)

|

||||

- [Predicting the Value of Small Altruistic Projects: A Proof of Concept Experiment.](https://nunosempere.com/2020/11/22/predicting-the-value-of-small-altruistic-projects-a-proof-of)

|

||||

- [An experiment to evaluate the value of one researcher's work](https://nunosempere.com/2020/12/01/an-experiment-to-evaluate-the-value-of-one-researcher-s-work)

|

||||

- [Forecasting Newsletter: November 2020.](https://nunosempere.com/2020/12/01/forecasting-newsletter-november-2020)

|

||||

- [What are good rubrics or rubric elements to evaluate and predict impact?](https://nunosempere.com/2020/12/03/what-are-good-rubrics-or-rubric-elements-to-evaluate-and)

|

||||

- [Big List of Cause Candidates](https://nunosempere.com/2020/12/25/big-list-of-cause-candidates)

|

||||

- [A review of two free online MIT Global Poverty courses](https://nunosempere.com/blog/2020/01/15/mit-edx-review)

|

||||

- [A review of two books on survey-making](https://nunosempere.com/blog/2020/03/01/survey-making)

|

||||

- [Shapley Values II: Philantropic Coordination Theory & other miscellanea.](https://nunosempere.com/blog/2020/03/10/shapley-values-ii)

|

||||

- [New Cause Proposal: International Supply Chain Accountability](https://nunosempere.com/blog/2020/04/01/international-supply-chain-accountability)

|

||||

- [Forecasting Newsletter: April 2020](https://nunosempere.com/blog/2020/04/30/forecasting-newsletter-2020-04)

|

||||

- [Forecasting Newsletter: May 2020.](https://nunosempere.com/blog/2020/05/31/forecasting-newsletter-2020-05)

|

||||

- [Forecasting Newsletter: June 2020.](https://nunosempere.com/blog/2020/07/01/forecasting-newsletter-2020-06)

|

||||

- [Forecasting Newsletter: July 2020.](https://nunosempere.com/blog/2020/08/01/forecasting-newsletter-2020-07)

|

||||

- [Forecasting Newsletter: August 2020. ](https://nunosempere.com/blog/2020/09/01/forecasting-newsletter-august-2020)

|

||||

- [Forecasting Newsletter: September 2020. ](https://nunosempere.com/blog/2020/10/01/forecasting-newsletter-september-2020)

|

||||

- [Forecasting Newsletter: October 2020.](https://nunosempere.com/blog/2020/11/01/forecasting-newsletter-october-2020)

|

||||

- [Incentive Problems With Current Forecasting Competitions.](https://nunosempere.com/blog/2020/11/10/incentive-problems-with-current-forecasting-competitions)

|

||||

- [Announcing the Forecasting Innovation Prize](https://nunosempere.com/blog/2020/11/15/announcing-the-forecasting-innovation-prize)

|

||||

- [Predicting the Value of Small Altruistic Projects: A Proof of Concept Experiment.](https://nunosempere.com/blog/2020/11/22/predicting-the-value-of-small-altruistic-projects-a-proof-of)

|

||||

- [An experiment to evaluate the value of one researcher's work](https://nunosempere.com/blog/2020/12/01/an-experiment-to-evaluate-the-value-of-one-researcher-s-work)

|

||||

- [Forecasting Newsletter: November 2020.](https://nunosempere.com/blog/2020/12/01/forecasting-newsletter-november-2020)

|

||||

- [What are good rubrics or rubric elements to evaluate and predict impact?](https://nunosempere.com/blog/2020/12/03/what-are-good-rubrics-or-rubric-elements-to-evaluate-and)

|

||||

- [Big List of Cause Candidates](https://nunosempere.com/blog/2020/12/25/big-list-of-cause-candidates)

|

||||

|

|

|

|||

|

|

@ -1,30 +1,30 @@

|

|||

## In 2021...

|

||||

|

||||

- [Forecasting Newsletter: December 2020](https://nunosempere.com/2021/01/01/forecasting-newsletter-december-2020)

|

||||

- [2020: Forecasting in Review](https://nunosempere.com/2021/01/10/2020-forecasting-in-review)

|

||||

- [A Funnel for Cause Candidates](https://nunosempere.com/2021/01/13/a-funnel-for-cause-candidates)

|

||||

- [Forecasting Newsletter: January 2021](https://nunosempere.com/2021/02/01/forecasting-newsletter-january-2021)

|

||||

- [Forecasting Prize Results](https://nunosempere.com/2021/02/19/forecasting-prize-results)

|

||||

- [Forecasting Newsletter: February 2021](https://nunosempere.com/2021/03/01/forecasting-newsletter-february-2021)

|

||||

- [Introducing Metaforecast: A Forecast Aggregator and Search Tool](https://nunosempere.com/2021/03/07/introducing-metaforecast-a-forecast-aggregator-and-search)

|

||||

- [Relative Impact of the First 10 EA Forum Prize Winners](https://nunosempere.com/2021/03/16/relative-impact-of-the-first-10-ea-forum-prize-winners)

|

||||

- [Forecasting Newsletter: March 2021](https://nunosempere.com/2021/04/01/forecasting-newsletter-march-2021)

|

||||

- [Forecasting Newsletter: April 2021](https://nunosempere.com/2021/05/01/forecasting-newsletter-april-2021)

|

||||

- [Forecasting Newsletter: May 2021](https://nunosempere.com/2021/06/01/forecasting-newsletter-may-2021)

|

||||

- [2018-2019 Long-Term Future Fund Grantees: How did they do?](https://nunosempere.com/2021/06/16/2018-2019-long-term-future-fund-grantees-how-did-they-do)

|

||||

- [What should the norms around privacy and evaluation in the EA community be?](https://nunosempere.com/2021/06/16/what-should-the-norms-around-privacy-and-evaluation-in-the)

|

||||

- [Shallow evaluations of longtermist organizations](https://nunosempere.com/2021/06/24/shallow-evaluations-of-longtermist-organizations)

|

||||

- [Forecasting Newsletter: June 2021](https://nunosempere.com/2021/07/01/forecasting-newsletter-june-2021)

|

||||

- [Forecasting Newsletter: July 2021](https://nunosempere.com/2021/08/01/forecasting-newsletter-july-2021)

|

||||

- [Forecasting Newsletter: August 2021](https://nunosempere.com/2021/09/01/forecasting-newsletter-august-2021)

|

||||

- [Frank Feedback Given To Very Junior Researchers](https://nunosempere.com/2021/09/01/frank-feedback-given-to-very-junior-researchers)

|

||||

- [Building Blocks of Utility Maximization](https://nunosempere.com/2021/09/20/building-blocks-of-utility-maximization)

|

||||

- [Forecasting Newsletter: September 2021.](https://nunosempere.com/2021/10/01/forecasting-newsletter-september-2021)

|

||||

- [An estimate of the value of Metaculus questions](https://nunosempere.com/2021/10/22/an-estimate-of-the-value-of-metaculus-questions)

|

||||

- [Forecasting Newsletter: October 2021.](https://nunosempere.com/2021/11/02/forecasting-newsletter-october-2021)

|

||||

- [A Model of Patient Spending and Movement Building](https://nunosempere.com/2021/11/08/a-model-of-patient-spending-and-movement-building)

|

||||

- [Simple comparison polling to create utility functions](https://nunosempere.com/2021/11/15/simple-comparison-polling-to-create-utility-functions)

|

||||

- [Pathways to impact for forecasting and evaluation](https://nunosempere.com/2021/11/25/pathways-to-impact-for-forecasting-and-evaluation)

|

||||

- [Forecasting Newsletter: November 2021](https://nunosempere.com/2021/12/02/forecasting-newsletter-november-2021)

|

||||

- [External Evaluation of the EA Wiki](https://nunosempere.com/2021/12/13/external-evaluation-of-the-ea-wiki)

|

||||

- [Prediction Markets in The Corporate Setting](https://nunosempere.com/2021/12/31/prediction-markets-in-the-corporate-setting)

|

||||

- [Forecasting Newsletter: December 2020](https://nunosempere.com/blog/2021/01/01/forecasting-newsletter-december-2020)

|

||||

- [2020: Forecasting in Review](https://nunosempere.com/blog/2021/01/10/2020-forecasting-in-review)

|

||||

- [A Funnel for Cause Candidates](https://nunosempere.com/blog/2021/01/13/a-funnel-for-cause-candidates)

|

||||

- [Forecasting Newsletter: January 2021](https://nunosempere.com/blog/2021/02/01/forecasting-newsletter-january-2021)

|

||||

- [Forecasting Prize Results](https://nunosempere.com/blog/2021/02/19/forecasting-prize-results)

|

||||

- [Forecasting Newsletter: February 2021](https://nunosempere.com/blog/2021/03/01/forecasting-newsletter-february-2021)

|

||||

- [Introducing Metaforecast: A Forecast Aggregator and Search Tool](https://nunosempere.com/blog/2021/03/07/introducing-metaforecast-a-forecast-aggregator-and-search)

|

||||

- [Relative Impact of the First 10 EA Forum Prize Winners](https://nunosempere.com/blog/2021/03/16/relative-impact-of-the-first-10-ea-forum-prize-winners)

|

||||

- [Forecasting Newsletter: March 2021](https://nunosempere.com/blog/2021/04/01/forecasting-newsletter-march-2021)

|

||||

- [Forecasting Newsletter: April 2021](https://nunosempere.com/blog/2021/05/01/forecasting-newsletter-april-2021)

|

||||

- [Forecasting Newsletter: May 2021](https://nunosempere.com/blog/2021/06/01/forecasting-newsletter-may-2021)

|

||||

- [2018-2019 Long-Term Future Fund Grantees: How did they do?](https://nunosempere.com/blog/2021/06/16/2018-2019-long-term-future-fund-grantees-how-did-they-do)

|

||||

- [What should the norms around privacy and evaluation in the EA community be?](https://nunosempere.com/blog/2021/06/16/what-should-the-norms-around-privacy-and-evaluation-in-the)

|

||||

- [Shallow evaluations of longtermist organizations](https://nunosempere.com/blog/2021/06/24/shallow-evaluations-of-longtermist-organizations)

|

||||

- [Forecasting Newsletter: June 2021](https://nunosempere.com/blog/2021/07/01/forecasting-newsletter-june-2021)

|

||||

- [Forecasting Newsletter: July 2021](https://nunosempere.com/blog/2021/08/01/forecasting-newsletter-july-2021)

|

||||

- [Forecasting Newsletter: August 2021](https://nunosempere.com/blog/2021/09/01/forecasting-newsletter-august-2021)

|

||||

- [Frank Feedback Given To Very Junior Researchers](https://nunosempere.com/blog/2021/09/01/frank-feedback-given-to-very-junior-researchers)

|

||||

- [Building Blocks of Utility Maximization](https://nunosempere.com/blog/2021/09/20/building-blocks-of-utility-maximization)

|

||||

- [Forecasting Newsletter: September 2021.](https://nunosempere.com/blog/2021/10/01/forecasting-newsletter-september-2021)

|

||||

- [An estimate of the value of Metaculus questions](https://nunosempere.com/blog/2021/10/22/an-estimate-of-the-value-of-metaculus-questions)

|

||||

- [Forecasting Newsletter: October 2021.](https://nunosempere.com/blog/2021/11/02/forecasting-newsletter-october-2021)

|

||||

- [A Model of Patient Spending and Movement Building](https://nunosempere.com/blog/2021/11/08/a-model-of-patient-spending-and-movement-building)

|

||||

- [Simple comparison polling to create utility functions](https://nunosempere.com/blog/2021/11/15/simple-comparison-polling-to-create-utility-functions)

|

||||

- [Pathways to impact for forecasting and evaluation](https://nunosempere.com/blog/2021/11/25/pathways-to-impact-for-forecasting-and-evaluation)

|

||||

- [Forecasting Newsletter: November 2021](https://nunosempere.com/blog/2021/12/02/forecasting-newsletter-november-2021)

|

||||

- [External Evaluation of the EA Wiki](https://nunosempere.com/blog/2021/12/13/external-evaluation-of-the-ea-wiki)

|

||||

- [Prediction Markets in The Corporate Setting](https://nunosempere.com/blog/2021/12/31/prediction-markets-in-the-corporate-setting)

|

||||

|

|

|

|||

|

|

@ -1,59 +1,59 @@

|

|||

## In 2022...

|

||||

|

||||

- [Forecasting Newsletter: December 2021](https://nunosempere.com/2022/01/10/forecasting-newsletter-december-2021)

|

||||

- [Forecasting Newsletter: Looking back at 2021.](https://nunosempere.com/2022/01/27/forecasting-newsletter-looking-back-at-2021)

|

||||

- [Forecasting Newsletter: January 2022](https://nunosempere.com/2022/02/03/forecasting-newsletter-january-2022)

|

||||

- [Splitting the timeline as an extinction risk intervention](https://nunosempere.com/2022/02/06/splitting-the-timeline-as-an-extinction-risk-intervention)

|

||||

- [We are giving $10k as forecasting micro-grants](https://nunosempere.com/2022/02/08/we-are-giving-usd10k-as-forecasting-micro-grants)

|

||||

- [Five steps for quantifying speculative interventions](https://nunosempere.com/2022/02/18/five-steps-for-quantifying-speculative-interventions)

|

||||

- [Forecasting Newsletter: February 2022](https://nunosempere.com/2022/03/05/forecasting-newsletter-february-2022)

|

||||

- [Samotsvety Nuclear Risk Forecasts — March 2022](https://nunosempere.com/2022/03/10/samotsvety-nuclear-risk-forecasts-march-2022)

|

||||

- [Valuing research works by eliciting comparisons from EA researchers](https://nunosempere.com/2022/03/17/valuing-research-works-by-eliciting-comparisons-from-ea)

|

||||

- [Forecasting Newsletter: April 2222](https://nunosempere.com/2022/04/01/forecasting-newsletter-april-2222)

|

||||

- [Forecasting Newsletter: March 2022](https://nunosempere.com/2022/04/05/forecasting-newsletter-march-2022)

|

||||

- [A quick note on the value of donations](https://nunosempere.com/2022/04/06/note-donations)

|

||||

- [Open Philanthopy allocation by cause area](https://nunosempere.com/2022/04/07/openphil-allocation)

|

||||

- [Better scoring rules](https://nunosempere.com/2022/04/16/optimal-scoring)

|

||||

- [Simple Squiggle](https://nunosempere.com/2022/04/17/simple-squiggle)

|

||||

- [EA Forum Lowdown: April 2022](https://nunosempere.com/2022/05/01/ea-forum-lowdown-april-2022)

|

||||

- [Forecasting Newsletter: April 2022](https://nunosempere.com/2022/05/10/forecasting-newsletter-april-2022)

|

||||

- [Infinite Ethics 101: Stochastic and Statewise Dominance as a Backup Decision Theory when Expected Values Fail](https://nunosempere.com/2022/05/20/infinite-ethics-101)

|

||||

- [Forecasting Newsletter: May 2022](https://nunosempere.com/2022/06/03/forecasting-newsletter-may-2022)

|

||||

- [The Tragedy of Calisto and Melibea](https://nunosempere.com/2022/06/14/the-tragedy-of-calisto-and-melibea)

|

||||

- [A Critical Review of Open Philanthropy’s Bet On Criminal Justice Reform](https://nunosempere.com/2022/06/16/criminal-justice)

|

||||

- [Cancellation insurance](https://nunosempere.com/2022/07/04/cancellation-insurance)

|

||||

- [I will bet on your success on Manifold Markets](https://nunosempere.com/2022/07/05/i-will-bet-on-your-success-or-failure)

|

||||

- [The Maximum Vindictiveness Strategy](https://nunosempere.com/2022/07/09/maximum-vindictiveness-strategy)

|

||||

- [Forecasting Newsletter: June 2022](https://nunosempere.com/2022/07/12/forecasting-newsletter-june-2022)

|

||||

- [Some thoughts on Turing.jl](https://nunosempere.com/2022/07/23/thoughts-on-turing-julia)

|

||||

- [How much would I have to run to lose 20 kilograms?](https://nunosempere.com/2022/07/27/how-much-to-run-to-lose-20-kilograms)

|

||||

- [$1,000 Squiggle Experimentation Challenge](https://nunosempere.com/2022/08/04/usd1-000-squiggle-experimentation-challenge)

|

||||

- [Forecasting Newsletter: July 2022](https://nunosempere.com/2022/08/08/forecasting-newsletter-july-2022)

|

||||

- [A concern about the "evolutionary anchor" of Ajeya Cotra's report](https://nunosempere.com/2022/08/10/evolutionary-anchor)

|

||||

- [What do Americans think 'cutlery' means?](https://nunosempere.com/2022/08/18/what-do-americans-mean-by-cutlery)

|

||||

- [Introduction to Fermi estimates](https://nunosempere.com/2022/08/20/fermi-introduction)

|

||||

- [A comment on Cox's theorem and probabilistic inductivism.](https://nunosempere.com/2022/08/31/on-cox-s-theorem-and-probabilistic-induction)

|

||||

- [Simple estimation examples in Squiggle](https://nunosempere.com/2022/09/02/simple-estimation-examples-in-squiggle)

|

||||

- [Forecasting Newsletter: August 2022.](https://nunosempere.com/2022/09/10/forecasting-newsletter-august-2022)

|

||||

- [Distribution of salaries in Spain](https://nunosempere.com/2022/09/11/salary-ranges-spain)

|

||||

- [An experiment eliciting relative estimates for Open Philanthropy’s 2018 AI safety grants](https://nunosempere.com/2022/09/12/an-experiment-eliciting-relative-estimates-for-open)

|

||||

- [Use distributions to more parsimoniously estimate impact](https://nunosempere.com/2022/09/15/use-distributions-to-more-parsimoniously-estimate-impact)

|

||||

- [Utilitarianism: An Incomplete Approach](https://nunosempere.com/2022/09/19/utilitarianism-an-incomplete-approach)

|

||||

- [$5k challenge to quantify the impact of 80,000 hours' top career paths](https://nunosempere.com/2022/09/23/usd5k-challenge-to-quantify-the-impact-of-80-000-hours-top)

|

||||

- [Use a less coarse analysis of AMF beneficiary age and consider counterfactual deaths](https://nunosempere.com/2022/09/28/granular-AMF)

|

||||

- [Samotsvety Nuclear Risk update October 2022](https://nunosempere.com/2022/10/03/samotsvety-nuclear-risk-update-october-2022)

|

||||

- [Five slightly more hardcore Squiggle models.](https://nunosempere.com/2022/10/10/five-slightly-more-hardcore-squiggle-models)

|

||||

- [Forecasting Newsletter: September 2022.](https://nunosempere.com/2022/10/12/forecasting-newsletter-september-2022)

|

||||

- [Sometimes you give to the commons, and sometimes you take from the commons](https://nunosempere.com/2022/10/17/the-commons)

|

||||

- [Brief evaluations of top-10 billionnaires](https://nunosempere.com/2022/10/21/brief-evaluations-of-top-10-billionnaires)

|

||||

- [Are flimsy evaluations worth it?](https://nunosempere.com/2022/10/27/are-flimsy-evaluations-worth-it)

|

||||

- [Brief thoughts on my personal research strategy](https://nunosempere.com/2022/10/31/brief-thoughts-personal-strategy)

|

||||

- [Metaforecast late 2022 update: GraphQL API, Charts, better infrastructure behind the scenes.](https://nunosempere.com/2022/11/04/metaforecast-late-2022-update)

|

||||

- [Tracking the money flows in forecasting](https://nunosempere.com/2022/11/06/forecasting-money-flows)

|

||||

- [Forecasting Newsletter for October 2022](https://nunosempere.com/2022/11/15/forecasting-newsletter-for-october-2022)

|

||||

- [Some data on the stock of EA™ funding ](https://nunosempere.com/2022/11/20/brief-update-ea-funding)

|

||||

- [List of past fraudsters similar to SBF](https://nunosempere.com/2022/11/28/list-of-past-fraudsters-similar-to-sbf)

|

||||

- [Goodhart's law and aligning politics with human flourishing ](https://nunosempere.com/2022/12/05/goodhart-politics)

|

||||

- [COVID-19 in rural Balochistan, Pakistan: Two interviews from May 2020](https://nunosempere.com/2022/12/16/covid-19-in-rural-balochistan-pakistan-two-interviews-from-1)

|

||||

- [Hacking on rose](https://nunosempere.com/2022/12/20/hacking-on-rose)

|

||||

- [A basic argument for AI risk](https://nunosempere.com/2022/12/23/ai-risk-rohin-shah)

|

||||

- [Forecasting Newsletter: December 2021](https://nunosempere.com/blog/2022/01/10/forecasting-newsletter-december-2021)

|

||||

- [Forecasting Newsletter: Looking back at 2021.](https://nunosempere.com/blog/2022/01/27/forecasting-newsletter-looking-back-at-2021)

|

||||

- [Forecasting Newsletter: January 2022](https://nunosempere.com/blog/2022/02/03/forecasting-newsletter-january-2022)

|

||||

- [Splitting the timeline as an extinction risk intervention](https://nunosempere.com/blog/2022/02/06/splitting-the-timeline-as-an-extinction-risk-intervention)

|

||||

- [We are giving $10k as forecasting micro-grants](https://nunosempere.com/blog/2022/02/08/we-are-giving-usd10k-as-forecasting-micro-grants)

|

||||

- [Five steps for quantifying speculative interventions](https://nunosempere.com/blog/2022/02/18/five-steps-for-quantifying-speculative-interventions)

|

||||

- [Forecasting Newsletter: February 2022](https://nunosempere.com/blog/2022/03/05/forecasting-newsletter-february-2022)

|

||||

- [Samotsvety Nuclear Risk Forecasts — March 2022](https://nunosempere.com/blog/2022/03/10/samotsvety-nuclear-risk-forecasts-march-2022)

|

||||

- [Valuing research works by eliciting comparisons from EA researchers](https://nunosempere.com/blog/2022/03/17/valuing-research-works-by-eliciting-comparisons-from-ea)

|

||||

- [Forecasting Newsletter: April 2222](https://nunosempere.com/blog/2022/04/01/forecasting-newsletter-april-2222)

|

||||

- [Forecasting Newsletter: March 2022](https://nunosempere.com/blog/2022/04/05/forecasting-newsletter-march-2022)

|

||||

- [A quick note on the value of donations](https://nunosempere.com/blog/2022/04/06/note-donations)

|

||||

- [Open Philanthopy allocation by cause area](https://nunosempere.com/blog/2022/04/07/openphil-allocation)

|

||||

- [Better scoring rules](https://nunosempere.com/blog/2022/04/16/optimal-scoring)

|

||||

- [Simple Squiggle](https://nunosempere.com/blog/2022/04/17/simple-squiggle)

|

||||

- [EA Forum Lowdown: April 2022](https://nunosempere.com/blog/2022/05/01/ea-forum-lowdown-april-2022)

|

||||

- [Forecasting Newsletter: April 2022](https://nunosempere.com/blog/2022/05/10/forecasting-newsletter-april-2022)

|

||||

- [Infinite Ethics 101: Stochastic and Statewise Dominance as a Backup Decision Theory when Expected Values Fail](https://nunosempere.com/blog/2022/05/20/infinite-ethics-101)

|

||||

- [Forecasting Newsletter: May 2022](https://nunosempere.com/blog/2022/06/03/forecasting-newsletter-may-2022)

|

||||

- [The Tragedy of Calisto and Melibea](https://nunosempere.com/blog/2022/06/14/the-tragedy-of-calisto-and-melibea)

|

||||

- [A Critical Review of Open Philanthropy’s Bet On Criminal Justice Reform](https://nunosempere.com/blog/2022/06/16/criminal-justice)

|

||||

- [Cancellation insurance](https://nunosempere.com/blog/2022/07/04/cancellation-insurance)

|

||||

- [I will bet on your success on Manifold Markets](https://nunosempere.com/blog/2022/07/05/i-will-bet-on-your-success-or-failure)

|

||||

- [The Maximum Vindictiveness Strategy](https://nunosempere.com/blog/2022/07/09/maximum-vindictiveness-strategy)

|

||||

- [Forecasting Newsletter: June 2022](https://nunosempere.com/blog/2022/07/12/forecasting-newsletter-june-2022)

|

||||

- [Some thoughts on Turing.jl](https://nunosempere.com/blog/2022/07/23/thoughts-on-turing-julia)

|

||||

- [How much would I have to run to lose 20 kilograms?](https://nunosempere.com/blog/2022/07/27/how-much-to-run-to-lose-20-kilograms)

|

||||

- [$1,000 Squiggle Experimentation Challenge](https://nunosempere.com/blog/2022/08/04/usd1-000-squiggle-experimentation-challenge)

|

||||

- [Forecasting Newsletter: July 2022](https://nunosempere.com/blog/2022/08/08/forecasting-newsletter-july-2022)

|

||||

- [A concern about the "evolutionary anchor" of Ajeya Cotra's report](https://nunosempere.com/blog/2022/08/10/evolutionary-anchor)

|

||||

- [What do Americans think 'cutlery' means?](https://nunosempere.com/blog/2022/08/18/what-do-americans-mean-by-cutlery)

|

||||

- [Introduction to Fermi estimates](https://nunosempere.com/blog/2022/08/20/fermi-introduction)

|

||||

- [A comment on Cox's theorem and probabilistic inductivism.](https://nunosempere.com/blog/2022/08/31/on-cox-s-theorem-and-probabilistic-induction)

|

||||

- [Simple estimation examples in Squiggle](https://nunosempere.com/blog/2022/09/02/simple-estimation-examples-in-squiggle)

|

||||

- [Forecasting Newsletter: August 2022.](https://nunosempere.com/blog/2022/09/10/forecasting-newsletter-august-2022)

|

||||

- [Distribution of salaries in Spain](https://nunosempere.com/blog/2022/09/11/salary-ranges-spain)

|

||||

- [An experiment eliciting relative estimates for Open Philanthropy’s 2018 AI safety grants](https://nunosempere.com/blog/2022/09/12/an-experiment-eliciting-relative-estimates-for-open)

|

||||

- [Use distributions to more parsimoniously estimate impact](https://nunosempere.com/blog/2022/09/15/use-distributions-to-more-parsimoniously-estimate-impact)

|

||||

- [Utilitarianism: An Incomplete Approach](https://nunosempere.com/blog/2022/09/19/utilitarianism-an-incomplete-approach)

|

||||

- [$5k challenge to quantify the impact of 80,000 hours' top career paths](https://nunosempere.com/blog/2022/09/23/usd5k-challenge-to-quantify-the-impact-of-80-000-hours-top)

|

||||

- [Use a less coarse analysis of AMF beneficiary age and consider counterfactual deaths](https://nunosempere.com/blog/2022/09/28/granular-AMF)

|

||||

- [Samotsvety Nuclear Risk update October 2022](https://nunosempere.com/blog/2022/10/03/samotsvety-nuclear-risk-update-october-2022)

|

||||

- [Five slightly more hardcore Squiggle models.](https://nunosempere.com/blog/2022/10/10/five-slightly-more-hardcore-squiggle-models)

|

||||

- [Forecasting Newsletter: September 2022.](https://nunosempere.com/blog/2022/10/12/forecasting-newsletter-september-2022)

|

||||

- [Sometimes you give to the commons, and sometimes you take from the commons](https://nunosempere.com/blog/2022/10/17/the-commons)

|

||||

- [Brief evaluations of top-10 billionnaires](https://nunosempere.com/blog/2022/10/21/brief-evaluations-of-top-10-billionnaires)

|

||||

- [Are flimsy evaluations worth it?](https://nunosempere.com/blog/2022/10/27/are-flimsy-evaluations-worth-it)

|

||||

- [Brief thoughts on my personal research strategy](https://nunosempere.com/blog/2022/10/31/brief-thoughts-personal-strategy)

|

||||

- [Metaforecast late 2022 update: GraphQL API, Charts, better infrastructure behind the scenes.](https://nunosempere.com/blog/2022/11/04/metaforecast-late-2022-update)

|

||||

- [Tracking the money flows in forecasting](https://nunosempere.com/blog/2022/11/06/forecasting-money-flows)

|

||||

- [Forecasting Newsletter for October 2022](https://nunosempere.com/blog/2022/11/15/forecasting-newsletter-for-october-2022)

|

||||

- [Some data on the stock of EA™ funding ](https://nunosempere.com/blog/2022/11/20/brief-update-ea-funding)

|

||||

- [List of past fraudsters similar to SBF](https://nunosempere.com/blog/2022/11/28/list-of-past-fraudsters-similar-to-sbf)

|

||||

- [Goodhart's law and aligning politics with human flourishing ](https://nunosempere.com/blog/2022/12/05/goodhart-politics)

|

||||

- [COVID-19 in rural Balochistan, Pakistan: Two interviews from May 2020](https://nunosempere.com/blog/2022/12/16/covid-19-in-rural-balochistan-pakistan-two-interviews-from-1)

|

||||

- [Hacking on rose](https://nunosempere.com/blog/2022/12/20/hacking-on-rose)

|

||||

- [A basic argument for AI risk](https://nunosempere.com/blog/2022/12/23/ai-risk-rohin-shah)

|

||||

|

|

|

|||

|

|

@ -46,7 +46,7 @@ As for suggestions, my main one would be to add more analytical clarity, and to

|

|||

|

||||

The forecast is conditional on scaling laws continuing as they have, and on the various analytical assumptions not introducing too much error. And it's not a forecast of when models will be transformative, but of an upper bound, because as we mentioned at the beginning, indistinguishability is a sufficient but not a necessary condition for transformative AI. The authors point this out at the beginning, but I think this could be pointed out more obviously.

|

||||

|

||||

The text also generally needs an editor (e.g., use the first person plural, as there are two authors). As I was reading it, I felt the compulsion to rewrite it in better prose. But I didn't think that it was worth it for me to do that, or to point out style mistakes—besides my wish for greater clarity—because you can just hire an editor for this. And also, an alert reader should be able to extract the core of what you are saying even though prose could be improved. I did write down some impressions as I was reading in a different document, though.

|

||||

The text also generally needs an editor (e.g., use the first person plural, as there are two authors). As I was reading it, I felt the compulsion to rewrite it in better prose. But I did not think that it was worth it for me to do that, or to point out style mistakes—besides my wish for greater clarity—because you can just hire an editor for this. And also, an alert reader should be able to extract the core of what you are saying even though prose could be improved. I did write down some impressions as I was reading in a different document, though.

|

||||

|

||||

Overall I liked it, and would recommend that it be published. It's the kind of thing that, even if one thinks that it is not enough for a forecast on its own, seems like it would be a valuable input into other forecasts.

|

||||

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

Software I am making available to my corner of the internet

|

||||

Webpages I am making available to my corner of the internet

|

||||

===========================================================

|

||||

|

||||

Here is a list of internet services that I make freely available to friends and allies, broadly defined—if you are reading this, you qualify. These are ordered roughly in order of usefulness.

|

||||

|

|

|

|||

Binary file not shown.

|

After Width: | Height: | Size: 31 KiB |

|

|

@ -0,0 +1,111 @@

|

|||

<h1>Incorporate keeping track of accuracy into X (previously Twitter)</h1>

|

||||

|

||||

<p><strong>tl;dr</strong>: Incorporate keeping track of accuracy into X<sup id="fnref:1"><a href="#fn:1" rel="footnote">1</a></sup>. This contributes to the goal of making X the chief source of information, and strengthens humanity by providing better epistemic incentives and better mechanisms to separate the wheat from the chaff in terms of getting at the truth together.</p>

|

||||

|

||||

<h2>Why do this?</h2>

|

||||

|

||||

<p><img src="https://images.nunosempere.com/blog/2023/08/19/keeping-track-of-accuracy-on-twitter/michael-dragon.jpg" alt="St Michael Killing the Dragon - public domain, via Wikimedia commons" style="width: 30% !important"/></p>

|

||||

|

||||

|

||||

<ul>

|

||||

<li>Because it can be done</li>

|

||||

<li>Because keeping track of accuracy allows people to separate the wheat from the chaff at scale, which would make humanity more powerful, more <a href="https://nunosempere.com/blog/2023/07/19/better-harder-faster-stronger/">formidable</a>.</li>

|

||||

<li>Because it is an asymmetric weapon, like community notes, that help the good guys who are trying to get at what is true much more than the bad guys who are either not trying to do that or are bad at it.</li>

|

||||

<li>Because you can’t get better at learning true things if you aren’t trying, and current social media platforms are, for the most part, not incentivizing that trying.</li>

|

||||

<li>Because rival organizations—like the New York Times, Instagram Threads, or school textbooks—would be made more obsolete by this kind of functionality.</li>

|

||||

</ul>

|

||||

|

||||

|

||||

<h2>Core functionality</h2>

|

||||

|

||||

<p>I think that you can distill the core of keeping track of accuracy to three elements<sup id="fnref:2"><a href="#fn:2" rel="footnote">2</a></sup>: predict, resolve, and tally. You can see a minimal implementation of this functionality in <60 lines of bash <a href="https://github.com/NunoSempere/PredictResolveTally/tree/master">here</a>.</p>

|

||||

|

||||

<h3>predict</h3>

|

||||

|

||||

<p>make a prediction. This prediction could take the form of</p>

|

||||

|

||||

<ol>

|

||||

<li>a yes/no sentence, like “By 2030, I say that Tesla will be worth $1T”</li>

|

||||

<li>a probability, like “I say that there is a 70% chance that by 2030, Tesla will be worth $1T”</li>

|

||||

<li>a range, like “I think that by 2030, Tesla’s market cap will be worth between $800B and $5T”</li>

|

||||

<li>a probability distribution, like “Here is my probability distribution over how likely each possible market cap of Tesla is by 2030”</li>

|

||||

<li>more complicated options, e.g., a forecasting function that gives an estimate of market cap at every point in time.</li>

|

||||

</ol>

|

||||

|

||||

|

||||

<p>I think that the sweet spot is on #2: asking for probabilities. #1 doesn’t capture that we normally have uncertainty about events—e.g., in the recent superconductor debacle, we were not completely sure one way or the other until the end—, and it is tricky to have a system which scores both #3-#5 and #2. Particularly at scale, I would lean towards recommending using probabilities rather than something more ambitious, at first.</p>

|

||||

|

||||

<p>Note that each example gave both a statement that was being predicted, and a date by which the prediction is resolved.</p>

|

||||

|

||||

<h3>resolve</h3>

|

||||

|

||||

<p>Once the date of resolution has been reached, a prediction can be marked as true/false/ambiguous. Ambiguous resolutions are bad, because the people who have put effort into making a prediction feel like their time has been wasted, so it is good to minimize them.</p>

|

||||

|

||||

<p>You can have a few distinct methods of resolution. Here are a few:</p>

|

||||

|

||||

<ul>

|

||||

<li>Every question has a question creator, who resolves it</li>

|

||||

<li>Each person creates and resolves their own predictions</li>

|

||||

<li>You have a community-notes style mechanism for resolving questions</li>

|

||||

<li>You have a jury of randomly chosen peers who resolves the prediction</li>

|

||||

<li>You have a jury of previously trusted members, who resolves the question</li>

|

||||

<li>You can use a <a href="https://en.wikipedia.org/wiki/Keynesian_beauty_contest">Keynesian Beauty Contest</a>, like Kleros or UMA, where judges are rewarded for agreeing with the majority opinion of other judges. This disincentivizes correct resolutions for unpopular-but-true questions, so I would hesitate before using it.</li>

|

||||

</ul>

|

||||

|

||||

|

||||

<p>Note that you can have resolution methods that can be challeged, like the lower court/court of appeals/supreme court system in the US. For example, you could have a system where initially a question is resolved by a small number of randomly chosen jurors, but if someone gives a strong signal that they object to the resolution—e.g., if they pay for it, or if they spend one of a few “appeals” tokens—then the question is resolved by a larger pool of jurors.</p>

|

||||

|

||||

<p>Note that the resolution method will shape the flavour of your prediction functionality, and constrain the types of questions that people can forecast on. You can have a more anarchic system, where everyone can instantly create a question and predict on it. Then, people will create many more questions, but perhaps they will have a bias towards resolving questions in their own favour, and you will have slightly duplicate questions. Then you will get something closer to <a href="https://manifold.markets/">Manifold Markets</a>. Or you could have a mechanism where people propose questions and these are made robust to corner cases in their resolution criteria by volunteers, and then later resolved by a jury of volunteers. Then you will get something like <a href="https://www.metaculus.com/">Metaculus</a>, where you have fewer questions but these are of higher quality and have more reliable resolutions.</p>

|

||||

|

||||

<p>Ultimately, I’m not saying that the resolution method is unimportant. But I think there is a temptation to nerd out too much about the specifics, and having some resolution method that is transparently outlined and shipping it quickly seems much better than getting stuck at this step.</p>

|

||||

|

||||

<h3>tally</h3>

|

||||

|

||||

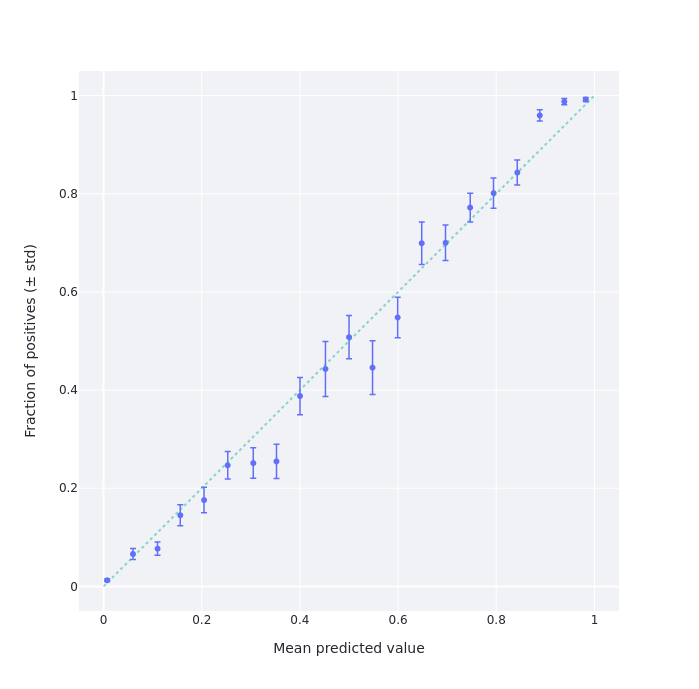

<p>Lastly, present the information about what proportion of people’s predictions come true. E.g., of the times I have predicted a 60% likelihood of something, how often has it come true? Ditto for other percentages. These are normally binned to produce a calibration chart, like the following:</p>

|

||||

|

||||

<p><img src="https://images.nunosempere.com/blog/2023/08/19/keeping-track-of-accuracy-on-twitter/calibrationChart2.png" alt="my calibration chart from Good Judgment Open" /></p>

|

||||

|

||||

<p>On top of that starting point, you can also do more elaborate things:</p>

|

||||

|

||||

<ul>

|

||||

<li>You can have a summary statistic—a proper scoring rule, like the Brier score or a log score—that summarizes how good you are at prediction “in general”. Possibly this might involve comparing your performance to the performance of people who predicted in the same questions.</li>

|

||||

<li>Previously, you could have allowed people to bet against each other. Then, their profits would indicate how good they are. I think this might be too complicated at Twitter style, at least at first.</li>

|

||||

</ul>

|

||||

|

||||

|

||||

<p><a href="https://arxiv.org/abs/2106.11248">Here</a> is a review of some mistakes people have previously made when scoring these kinds of forecasts. For example, if you have some per-question accuracy reward, people will gravitate towards forecasting on easier rather than on more useful questions. These kinds of considerations are important, particularly since they will determine who will be at the top of some scoring leaderboard, if there is any such. Generally, <a href="https://arxiv.org/abs/1803.04585">Goodhart’s law</a> is going to be a problem here. But again, having <em>some</em> tallying mechanism seems way better than the current information environment.</p>

|

||||

|

||||

<p>Once you have some tallying—whether a calibration chart, a score from a proper scoring rule, or some profit it Musk-Bucks<sup id="fnref:3"><a href="#fn:3" rel="footnote">3</a></sup>, such a tally could:</p>

|

||||

|

||||

<ul>

|

||||

<li>be semi-prominently displayed so that people can look to it when deciding how much to trust an account,</li>

|

||||

<li>be used by X’s algorithm to show more accurate accounts a bit more at the margin,</li>

|

||||

<li>provide an incentive for people to be accurate,</li>

|

||||

<li>provide a way for people who want to become more accurate to track their performance</li>

|

||||

</ul>

|

||||

|

||||

|

||||

<p>When dealing with catastrophes, wars, discoveries, and generally with events that challenge humanity’s ability to figure out what is going on, having these mechanisms in place would help humanity make better decisions about who to listen to: to listen not to who is loudest but to who is most right.</p>

|

||||

|

||||

<h2>Conclusion</h2>

|

||||

|

||||

<p>X can do this. It would help with its goal of outcompeting other sources of information, and it would do this fair and square by improving humanity’s collective ability to get at the truth. I don’t know what other challenges and plans Musk has in store for X, but I would strongly consider adding this functionality to it.</p>

|

||||

|

||||

<p>

|

||||

<section id='isso-thread'>

|

||||

<noscript>javascript needs to be activated to view comments.</noscript>

|

||||

</section>

|

||||

</p>

|

||||

|

||||

<div class="footnotes">

|

||||

<hr/>

|

||||

<ol>

|

||||

<li id="fn:1">

|

||||

previously Twitter<a href="#fnref:1" rev="footnote">↩</a></li>

|

||||

<li id="fn:2">

|

||||

Ok, four, if we count question creation and prediction as distinct. But I like <a href="https://bw.vern.cc/worm/wiki/Parahuman_Response_Team">PRT</a> as an acronym.<a href="#fnref:2" rev="footnote">↩</a></li>

|

||||

<li id="fn:3">

|

||||

Using real dollars is probably illegal/too regulated in America.<a href="#fnref:3" rev="footnote">↩</a></li>

|

||||

</ol>

|

||||

</div>

|

||||

|

||||

|

|

@ -0,0 +1,28 @@

|

|||

Twitter Improvement Proposal: Incorporate Prediction Markets, Give a Death Blow to Punditry

|

||||

===========================================================================================

|

||||

|

||||

**tl;dr**: Incorporate prediction markets into Twitter, give a death blow to punditry.

|

||||

|

||||

## The core idea

|

||||

|

||||

A prediction market is...

|

||||

|

||||

## Why do this?

|

||||

|

||||

Because it will usher humanity in an era of epistemic greatness.

|

||||

|

||||

## Caveats and downsides

|

||||

|

||||

Play money, though maybe with goods and services

|

||||

|

||||

Give 1000 doublons to all semi-active accounts on the 22/02/2022

|

||||

|

||||

## How to go about this?

|

||||

|

||||

One possiblity might be to acquihire [Manifold Markets](https://manifold.markets/) for something like $20-$50M. They are a team of competent engineers with a fair share of ex-Googlers, who have been doing a good job at building a prediction platform from scratch, and iterating on it. So one possible step might be to have the Manifold guys come up with demo functionality, and then pair them with a team who understands how one would go about doing this at Twitter-like scale.

|

||||

|

||||

|

||||

|

||||

However, I am not really cognizant of the technical challenges here, and it's possible that might not be the best approach ¯\_(ツ)_/¯

|

||||

|

||||

## In conclusion

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 17 MiB |

89

blog/2023/08/19/keep-track-of-accuracy-on-twitter/index.md

Normal file

89

blog/2023/08/19/keep-track-of-accuracy-on-twitter/index.md

Normal file

|

|

@ -0,0 +1,89 @@

|

|||

Incorporate keeping track of accuracy into X (previously Twitter)

|

||||

====

|

||||

|

||||

**tl;dr**: Incorporate keeping track of accuracy into X[^1]. This contributes to the goal of making X the chief source of information, and strengthens humanity by providing better epistemic incentives and better mechanisms to separate the wheat from the chaff in terms of getting at the truth together.

|

||||

|

||||

[^1]: previously Twitter

|

||||

|

||||

## Why do this?

|

||||

|

||||

<p><img src="https://images.nunosempere.com/blog/2023/08/19/keeping-track-of-accuracy-on-twitter/michael-dragon.png" alt="St Michael Killing the Dragon - public domain, via Wikimedia commons" style="width: 30% !important"/></p>

|

||||

|

||||

- Because it can be done

|

||||

- Because keeping track of accuracy allows people to separate the wheat from the chaff at scale, which would make humanity more powerful, more [formidable](https://nunosempere.com/blog/2023/07/19/better-harder-faster-stronger/).

|

||||

- Because it is an asymmetric weapon, like community notes, that help the good guys who are trying to get at what is true much more than the bad guys who are either not trying to do that or are bad at it.

|

||||

- Because you can't get better at learning true things if you aren't trying, and current social media platforms are, for the most part, not incentivizing that trying.

|

||||

- Because rival organizations---like the New York Times, Instagram Threads, or school textbooks---would be made more obsolete by this kind of functionality.

|

||||

|

||||

## Core functionality

|

||||

|

||||

I think that you can distill the core of keeping track of accuracy to three elements[^2]: predict, resolve, and tally. You can see a minimal implementation of this functionality in <60 lines of bash [here](https://github.com/NunoSempere/PredictResolveTally/tree/master).

|

||||

|

||||

[^2]: Ok, four, if we count question creation and prediction as distinct. But I like [PRT](https://bw.vern.cc/worm/wiki/Parahuman_Response_Team) as an acronym.

|

||||

|

||||

### predict

|

||||

|

||||

make a prediction. This prediction could take the form of

|

||||

|

||||

1. a yes/no sentence, like "By 2030, I say that Tesla will be worth $1T"

|

||||

2. a probability, like "I say that there is a 70% chance that by 2030, Tesla will be worth $1T"

|

||||

3. a range, like "I think that by 2030, Tesla's market cap will be worth between $800B and $5T"

|

||||

4. a probability distribution, like "Here is my probability distribution over how likely each possible market cap of Tesla is by 2030"

|

||||

5. more complicated options, e.g., a forecasting function that gives an estimate of market cap at every point in time.

|

||||

|

||||

I think that the sweet spot is on #2: asking for probabilities. #1 doesn't capture that we normally have uncertainty about events—e.g., in the recent superconductor debacle, we were not completely sure one way or the other until the end—, and it is tricky to have a system which scores both #3-#5 and #2. Particularly at scale, I would lean towards recommending using probabilities rather than something more ambitious, at first.

|

||||

|

||||

Note that each example gave both a statement that was being predicted, and a date by which the prediction is resolved.

|

||||

|

||||

### resolve

|

||||

|

||||

Once the date of resolution has been reached, a prediction can be marked as true/false/ambiguous. Ambiguous resolutions are bad, because the people who have put effort into making a prediction feel like their time has been wasted, so it is good to minimize them.

|

||||

|

||||

You can have a few distinct methods of resolution. Here are a few:

|

||||

|

||||

- Every question has a question creator, who resolves it

|

||||

- Each person creates and resolves their own predictions

|

||||

- You have a community-notes style mechanism for resolving questions

|

||||

- You have a jury of randomly chosen peers who resolves the prediction

|

||||

- You have a jury of previously trusted members, who resolves the question

|

||||

- You can use a [Keynesian Beauty Contest](https://en.wikipedia.org/wiki/Keynesian_beauty_contest), like Kleros or UMA, where judges are rewarded for agreeing with the majority opinion of other judges. This disincentivizes correct resolutions for unpopular-but-true questions, so I would hesitate before using it.

|

||||

|

||||

Note that you can have resolution methods that can be challeged, like the lower court/court of appeals/supreme court system in the US. For example, you could have a system where initially a question is resolved by a small number of randomly chosen jurors, but if someone gives a strong signal that they object to the resolution—e.g., if they pay for it, or if they spend one of a few "appeals" tokens—then the question is resolved by a larger pool of jurors.

|

||||

|

||||

Note that the resolution method will shape the flavour of your prediction functionality, and constrain the types of questions that people can forecast on. You can have a more anarchic system, where everyone can instantly create a question and predict on it. Then, people will create many more questions, but perhaps they will have a bias towards resolving questions in their own favour, and you will have slightly duplicate questions. Then you will get something closer to [Manifold Markets](https://manifold.markets/). Or you could have a mechanism where people propose questions and these are made robust to corner cases in their resolution criteria by volunteers, and then later resolved by a jury of volunteers. Then you will get something like [Metaculus](https://www.metaculus.com/), where you have fewer questions but these are of higher quality and have more reliable resolutions.

|

||||

|

||||

Ultimately, I'm not saying that the resolution method is unimportant. But I think there is a temptation to nerd out too much about the specifics, and having some resolution method that is transparently outlined and shipping it quickly seems much better than getting stuck at this step.

|

||||

|

||||

### tally

|

||||

|

||||

Lastly, present the information about what proportion of people's predictions come true. E.g., of the times I have predicted a 60% likelihood of something, how often has it come true? Ditto for other percentages. These are normally binned to produce a calibration chart, like the following:

|

||||

|

||||

|

||||

|

||||

On top of that starting point, you can also do more elaborate things:

|

||||

|

||||

- You can have a summary statistic—a proper scoring rule, like the Brier score or a log score—that summarizes how good you are at prediction "in general". Possibly this might involve comparing your performance to the performance of people who predicted in the same questions.

|

||||

- Previously, you could have allowed people to bet against each other. Then, their profits would indicate how good they are. I think this might be too complicated at Twitter style, at least at first.

|

||||

|

||||

[Here](https://arxiv.org/abs/2106.11248) is a review of some mistakes people have previously made when scoring these kinds of forecasts. For example, if you have some per-question accuracy reward, people will gravitate towards forecasting on easier rather than on more useful questions. These kinds of considerations are important, particularly since they will determine who will be at the top of some scoring leaderboard, if there is any such. Generally, [Goodhart's law](https://arxiv.org/abs/1803.04585) is going to be a problem here. But again, having *some* tallying mechanism seems way better than the current information environment.

|

||||

|

||||

Once you have some tallying—whether a calibration chart, a score from a proper scoring rule, or some profit it Musk-Bucks[^3], such a tally could:

|

||||

|

||||

[^3]: Using real dollars is probably illegal/too regulated in America.

|

||||

|

||||

- be semi-prominently displayed so that people can look to it when deciding how much to trust an account,

|

||||

- be used by X's algorithm to show more accurate accounts a bit more at the margin,

|

||||

- provide an incentive for people to be accurate,

|

||||

- provide a way for people who want to become more accurate to track their performance

|

||||

|

||||

When dealing with catastrophes, wars, discoveries, and generally with events that challenge humanity's ability to figure out what is going on, having these mechanisms in place would help humanity make better decisions about who to listen to: to listen not to who is loudest but to who is most right.

|

||||

|

||||

## Conclusion

|

||||

|

||||

X can do this. It would help with its goal of outcompeting other sources of information, and it would do this fair and square by improving humanity's collective ability to get at the truth. I don't know what other challenges and plans Musk has in store for X, but I would strongly consider adding this functionality to it.

|

||||

|

||||

<p>

|

||||

<section id='isso-thread'>

|

||||

<noscript>javascript needs to be activated to view comments.</noscript>

|

||||

</section>

|

||||

</p>

|

||||

|

|

@ -1,31 +1,39 @@

|

|||

## In 2023...

|

||||

|

||||

- [Forecasting Newsletter for November and December 2022](https://nunosempere.com/2023/01/07/forecasting-newsletter-november-december-2022)

|

||||

- [Can GPT-3 produce new ideas? Partially automating Robin Hanson and others](https://nunosempere.com/2023/01/11/can-gpt-produce-ideas)

|

||||

- [Prevalence of belief in "human biodiversity" amongst self-reported EA respondents in the 2020 SlateStarCodex Survey](https://nunosempere.com/2023/01/16/hbd-ea)

|

||||

- [Interim Update on QURI's Work on EA Cause Area Candidates](https://nunosempere.com/2023/01/19/interim-update-cause-candidates)

|

||||

- [There will always be a Voigt-Kampff test](https://nunosempere.com/2023/01/21/there-will-always-be-a-voigt-kampff-test)

|

||||

- [My highly personal skepticism braindump on existential risk from artificial intelligence.](https://nunosempere.com/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk)

|

||||

- [An in-progress experiment to test how Laplace’s rule of succession performs in practice.](https://nunosempere.com/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of)

|

||||

- [Effective Altruism No Longer an Expanding Empire.](https://nunosempere.com/2023/01/30/ea-no-longer-expanding-empire)

|

||||

- [no matter where you stand](https://nunosempere.com/2023/02/03/no-matter-where-you-stand)

|

||||

- [Just-in-time Bayesianism](https://nunosempere.com/2023/02/04/just-in-time-bayesianism)

|

||||

- [Impact markets as a mechanism for not loosing your edge](https://nunosempere.com/2023/02/07/impact-markets-sharpen-your-edge)

|

||||

- [Straightforwardly eliciting probabilities from GPT-3](https://nunosempere.com/2023/02/09/straightforwardly-eliciting-probabilities-from-gpt-3)

|

||||

- [Inflation-proof assets](https://nunosempere.com/2023/02/11/inflation-proof-assets)

|

||||

- [A Bayesian Adjustment to Rethink Priorities' Welfare Range Estimates](https://nunosempere.com/2023/02/19/bayesian-adjustment-to-rethink-priorities-welfare-range-estimates)

|

||||

- [A computable version of Solomonoff induction](https://nunosempere.com/2023/03/01/computable-solomonoff)

|

||||

- [Use of “I'd bet” on the EA Forum is mostly metaphorical](https://nunosempere.com/2023/03/02/metaphorical-bets)

|

||||

- [Winners of the Squiggle Experimentation and 80,000 Hours Quantification Challenges](https://nunosempere.com/2023/03/08/winners-of-the-squiggle-experimentation-and-80-000-hours)

|

||||

- [What happens in Aaron Sorkin's *The Newsroom*](https://nunosempere.com/2023/03/10/aaron-sorkins-newsroom)

|

||||

- [Estimation for sanity checks](https://nunosempere.com/2023/03/10/estimation-sanity-checks)

|

||||

- [Find a beta distribution that fits your desired confidence interval](https://nunosempere.com/2023/03/15/fit-beta)

|

||||

- [Some estimation work in the horizon](https://nunosempere.com/2023/03/20/estimation-in-the-horizon)

|

||||

- [Soothing software](https://nunosempere.com/2023/03/27/soothing-software)

|

||||

- [What is forecasting?](https://nunosempere.com/2023/04/03/what-is-forecasting)

|

||||

- [Things you should buy, quantified](https://nunosempere.com/2023/04/06/things-you-should-buy-quantified)

|

||||

- [General discussion thread](https://nunosempere.com/2023/04/08/general-discussion-april)

|

||||

- [A Soothing Frontend for the Effective Altruism Forum ](https://nunosempere.com/2023/04/18/forum-frontend)

|

||||

- [A flaw in a simple version of worldview diversification](https://nunosempere.com/2023/04/25/worldview-diversification)

|

||||

- [Review of Epoch's *Scaling transformative autoregressive models*](https://nunosempere.com/2023/04/28/expert-review-epoch-direct-approach)

|

||||

- [Updating in the face of anthropic effects is possible](https://nunosempere.com/2023/05/11/updating-under-anthropic-effects)

|

||||

- [Forecasting Newsletter for November and December 2022](https://nunosempere.com/blog/2023/01/07/forecasting-newsletter-november-december-2022)

|

||||

- [Can GPT-3 produce new ideas? Partially automating Robin Hanson and others](https://nunosempere.com/blog/2023/01/11/can-gpt-produce-ideas)

|

||||

- [Prevalence of belief in "human biodiversity" amongst self-reported EA respondents in the 2020 SlateStarCodex Survey](https://nunosempere.com/blog/2023/01/16/hbd-ea)

|

||||

- [Interim Update on QURI's Work on EA Cause Area Candidates](https://nunosempere.com/blog/2023/01/19/interim-update-cause-candidates)

|

||||

- [There will always be a Voigt-Kampff test](https://nunosempere.com/blog/2023/01/21/there-will-always-be-a-voigt-kampff-test)

|

||||

- [My highly personal skepticism braindump on existential risk from artificial intelligence.](https://nunosempere.com/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk)

|

||||

- [An in-progress experiment to test how Laplace’s rule of succession performs in practice.](https://nunosempere.com/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of)

|

||||

- [Effective Altruism No Longer an Expanding Empire.](https://nunosempere.com/blog/2023/01/30/ea-no-longer-expanding-empire)

|

||||

- [no matter where you stand](https://nunosempere.com/blog/2023/02/03/no-matter-where-you-stand)

|

||||

- [Just-in-time Bayesianism](https://nunosempere.com/blog/2023/02/04/just-in-time-bayesianism)

|

||||

- [Impact markets as a mechanism for not loosing your edge](https://nunosempere.com/blog/2023/02/07/impact-markets-sharpen-your-edge)

|

||||

- [Straightforwardly eliciting probabilities from GPT-3](https://nunosempere.com/blog/2023/02/09/straightforwardly-eliciting-probabilities-from-gpt-3)

|

||||

- [Inflation-proof assets](https://nunosempere.com/blog/2023/02/11/inflation-proof-assets)

|

||||

- [A Bayesian Adjustment to Rethink Priorities' Welfare Range Estimates](https://nunosempere.com/blog/2023/02/19/bayesian-adjustment-to-rethink-priorities-welfare-range-estimates)

|

||||

- [A computable version of Solomonoff induction](https://nunosempere.com/blog/2023/03/01/computable-solomonoff)

|

||||

- [Use of “I'd bet” on the EA Forum is mostly metaphorical](https://nunosempere.com/blog/2023/03/02/metaphorical-bets)

|

||||

- [Winners of the Squiggle Experimentation and 80,000 Hours Quantification Challenges](https://nunosempere.com/blog/2023/03/08/winners-of-the-squiggle-experimentation-and-80-000-hours)

|

||||

- [What happens in Aaron Sorkin's *The Newsroom*](https://nunosempere.com/blog/2023/03/10/aaron-sorkins-newsroom)

|

||||

- [Estimation for sanity checks](https://nunosempere.com/blog/2023/03/10/estimation-sanity-checks)

|

||||

- [Find a beta distribution that fits your desired confidence interval](https://nunosempere.com/blog/2023/03/15/fit-beta)

|

||||

- [Some estimation work in the horizon](https://nunosempere.com/blog/2023/03/20/estimation-in-the-horizon)

|

||||

- [Soothing software](https://nunosempere.com/blog/2023/03/27/soothing-software)

|

||||

- [What is forecasting?](https://nunosempere.com/blog/2023/04/03/what-is-forecasting)

|

||||

- [Things you should buy, quantified](https://nunosempere.com/blog/2023/04/06/things-you-should-buy-quantified)

|

||||

- [General discussion thread](https://nunosempere.com/blog/2023/04/08/general-discussion-april)

|

||||

- [A Soothing Frontend for the Effective Altruism Forum ](https://nunosempere.com/blog/2023/04/18/forum-frontend)

|

||||

- [A flaw in a simple version of worldview diversification](https://nunosempere.com/blog/2023/04/25/worldview-diversification)

|

||||

- [Review of Epoch's *Scaling transformative autoregressive models*](https://nunosempere.com/blog/2023/04/28/expert-review-epoch-direct-approach)

|

||||

- [Updating in the face of anthropic effects is possible](https://nunosempere.com/blog/2023/05/11/updating-under-anthropic-effects)

|

||||

- [Relative values for animal suffering and ACE Top Charities](https://nunosempere.com/blog/2023/05/29/relative-value-animals)

|

||||

- [People's choices determine a partial ordering over people's desirability](https://nunosempere.com/blog/2023/06/17/ordering-romance)

|

||||

- [Betting and consent](https://nunosempere.com/blog/2023/06/26/betting-consent)

|

||||

- [Some melancholy about the value of my work depending on decisions by others beyond my control](https://nunosempere.com/blog/2023/07/13/melancholy)

|

||||

- [Why are we not harder, better, faster, stronger?](https://nunosempere.com/blog/2023/07/19/better-harder-faster-stronger)

|

||||

- [squiggle.c](https://nunosempere.com/blog/2023/08/01/squiggle.c)

|

||||

- [Webpages I am making available to my corner of the internet](https://nunosempere.com/blog/2023/08/14/software-i-am-hosting)

|

||||

- [Incorporate keeping track of accuracy into X (previously Twitter)](https://nunosempere.com/blog/2023/08/19/keep-track-of-accuracy-on-twitter)

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ for dir in */*/*

|

|||

do

|

||||

index_path="$(pwd)/$dir/index.md"

|

||||

title="$(cat $index_path | head -n 1)"

|

||||

url="https://nunosempere.com/$year/$dir"

|

||||

url="https://nunosempere.com/blog/$year/$dir"

|

||||

# echo $dir

|

||||

# echo $index_path

|

||||

# echo $title

|

||||

|

|

|

|||

Loading…

Reference in New Issue

Block a user