Compare commits

1 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 159a6e5edc |

|

|

@ -1,89 +0,0 @@

|

||||||

# On an RCT for ESPR.

|

|

||||||

|

|

||||||

## Introduction

|

|

||||||

|

|

||||||

> There is a certain valuable way of thinking, which is not yet taught in schools, in this present day. This certain way of thinking is not taught systematically at all. It is just absorbed by people who grow up reading books like Surely You’re Joking, Mr. Feynman or who have an unusually great teacher in high school.

|

|

||||||

>

|

|

||||||

> Most famously, this certain way of thinking has to do with science, and with the experimental method. The part of science where you go out and look at the universe instead of just making things up. The part where you say “Oops” and give up on a bad theory when the experiments don’t support it.

|

|

||||||

>

|

|

||||||

> But this certain way of thinking extends beyond that. It is deeper and more universal than a pair of goggles you put on when you enter a laboratory and take off when you leave. It applies to daily life, though this part is subtler and more difficult. But if you can’t say “Oops” and give up when it looks like something isn’t working, you have no choice but to keep shooting yourself in the foot. You have to keep reloading the shotgun and you have to keep pulling the trigger. You know people like this. And somewhere, someplace in your life you’d rather not think about, you are people like this. It would be nice if there was a certain way of thinking that could help us stop doing that.

|

|

||||||

|

|

||||||

\- Eliezer Yudkowsky, https://www.lesswrong.com/rationality/preface

|

|

||||||

|

|

||||||

## The evidence on CFAR's workshops.

|

|

||||||

|

|

||||||

The evidence for/against CFAR in general is of interest here, because I take it as likely that it is very much correlated with the evidence on ESPR. For example, if reading programs in India show that dividing students by initial level improves their learning outcome, then you'd expect similar processes to be at play in Kenya. Thus, if the evidence on CFAR were robust, we might be able to afford being less rigorous when it comes to ESPR.

|

|

||||||

|

|

||||||

I've mainly studied [CFAR's 2015 Longitudinal Study](http://www.rationality.org/studies/2015-longitudinal-study) together with the more recent [Case Studies](http://rationality.org/studies/2016-case-studies) and the [2017 CFAR Impact report](http://www.rationality.org/resources/updates/2017/cfar-2017-impact-report). Here, I will make some comments about them, but will not review their findings.

|

|

||||||

|

|

||||||

The first study notes that a control group would be a difficult thing to implement, noting it would require finding people who would like to come to the program and forbidding them to do so. The study tries to compensate for the lack of a control by being statistically clever. This study seems to be as rigorous as you can get without a RCT.

|

|

||||||

|

|

||||||

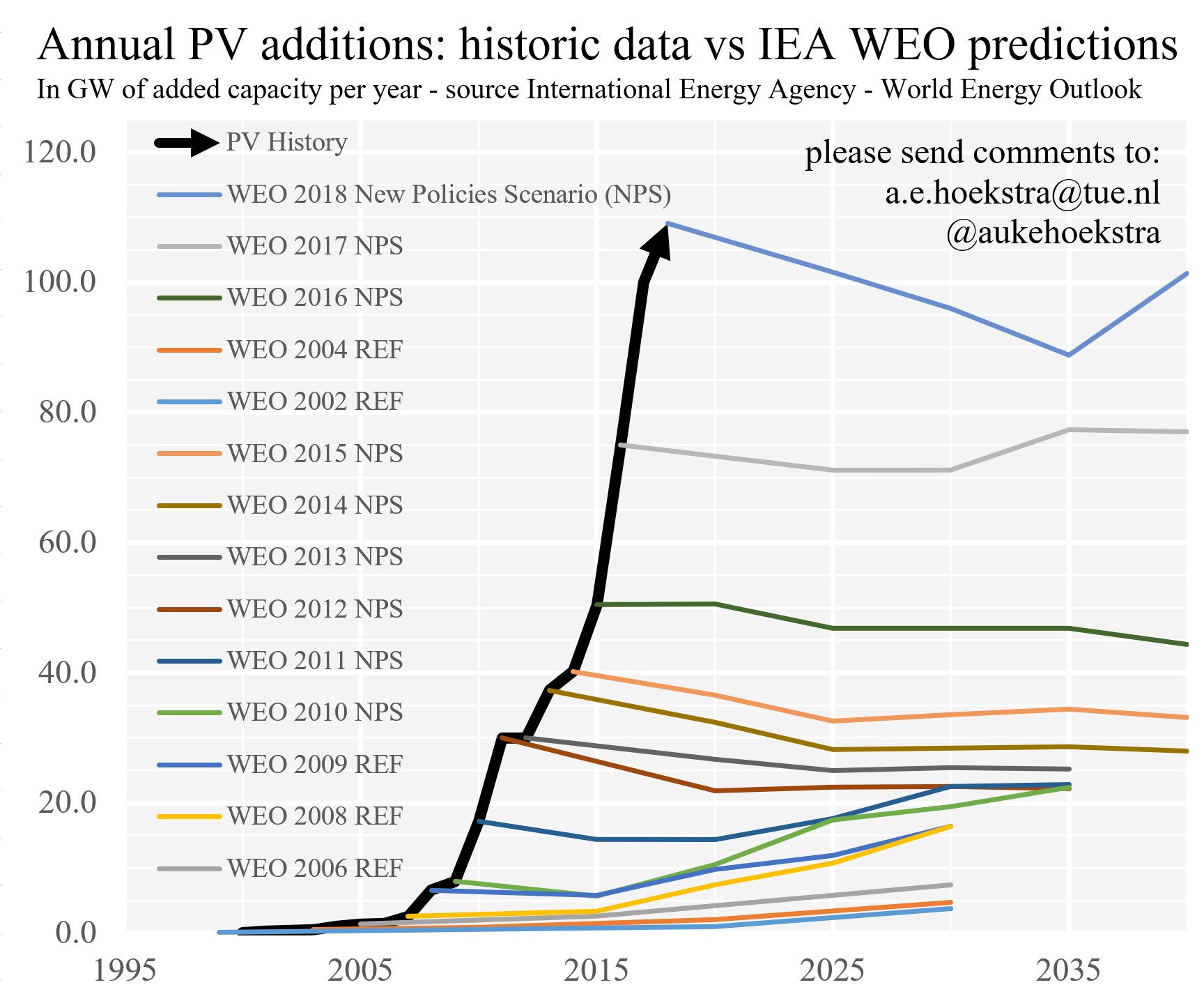

But I feel like that is only partially sufficient. The magnitude of the effect found could be wildly overestimated; MIT's Abdul Latif Jameel Poverty Action Lab provides the following slides [1]:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

I find them scary; depending on the method used to test your effect, you can get an effect size that is 4-5 times as great as the effect you find with an RCT, or about as great, in the other direction. The effects the CFAR study finds, f.ex. the one most prominently displayed in CFAR's webpage, an increased life satisfaction of 0.17 standard deviations (i.e., going from 50 to 56.75%) are small enough for me to worry about such inconveniences.

|

|

||||||

|

|

||||||

Thus, I feel that an RCT could be delayed on the strength of the evidence that CFAR currently has, including its logical model (see below), but not indefinitely. In particular, if CFAR had plans for more ambitious expansion, it would be a good idea to run an RCT before. If MIT's JPAL, didn't specialize on poverty interventions, I would suggest teaming up with them, and it seems like a good idea to try anyways. JPAL would provide strategies like the following: we can randomly admit people for either this year or the next, and take as the control the group which has been left waiting. It is not clear to me why this hasn't been done yet.

|

|

||||||

|

|

||||||

With regards to the second and third documents, I feel that they provide powerful intuitions for why CFAR's logical model is not totally bullshit. This would be something like: CFAR students are taught rationality techniques + have an environment in which they can question their current decisions and consider potentially better choices = they go on to do more good in the world, f.ex. by switching careers. From the Case Studies mentioned above:

|

|

||||||

|

|

||||||

> Eric (Bruylant) described the mindset of people at CFAR as “the exact opposite of learned helplessness”, and found that experiencing more of this mindset, in combination with an increased ability to see what was going on with his mind, was particularly helpful for making this shift.

|

|

||||||

|

|

||||||

Yet William MacAskill's book, *Doing Good Better*, is full with examples of NGOs with great sounding premises, e.g., Roundabout Water Solutions, which were woefully uneffective. Note that Arbital, one of CFAR's success stories, has now failed. Additionally, when reading CFAR's own [Rationality Checklist](http://www.rationality.org/resources/rationality-checklist), I notice that to acquire the mental movements mentioned seems more like a long term project, and less like a skill acquirable in 4 days. This is something which CFAR itself also underscores.

|

|

||||||

|

|

||||||

Furthermore, asking alumni to estimate the impact does not seem at all like a good idea to estimate impact, particularly when these people are sympathetic to CFAR, i.e., . To get a better idea of why, take the outside view and substitute CFAR for Center for Non Violent Communication: CNVC.

|

|

||||||

|

|

||||||

[1]: Obtained from MIT's course *Evaluating Social Programs* (Week 3), accessible at https://courses.edx.org/courses/course-v1:MITx+JPAL101x+2T2018/course/.

|

|

||||||

|

|

||||||

## Outside view: The evidence on Non Violent Communication (NVC).

|

|

||||||

|

|

||||||

The [Center for NonViolent Communication](https://www.cnvc.org/about-us/projects/nvc-research) provides a list of all the research about NVC known to them, of which Juncadella \([2016](https://www.cnvc.org/sites/default/files/NVC_Research_Files/Carme_Mampel_Juncadella.pdf)\) provides an overview up to 2013, after which not much else has been undertaken. From this review: *"Eleven of the 13 studies used quantitative desings. Seven used a control group and 4 a pre-post testing comparison. Of the 7 studies that used a control group, none used a random assignation of participants. In five, the treatment and control were assigned by researcher action and criteria, and in two, the assignment protocol is not reported"*.

|

|

||||||

|

|

||||||

The main problems the research presents is that it is a little bit chaotic: although Steckal, (1994) provides a measuring instrument whose consistency seems to have been validated, every researcher seems to use their own instruments, and investigate an slightly different question, i.e., for different demographics, in different settings, with different workshop lengths. All in all, there seems to be a positive effect, but its value is very uncertain.

|

|

||||||

|

|

||||||

NVC is also supported by testimonial evidence that is both extremely copious and extremely effusive, to be found in Marshall Rosenberg's book *Non Violent Communication: A Language of Life*, and in their webpage. Additionally, the logical model also appears consistent and robust: by providing a way to connect with our emotions and needs, and those of others, NVC workshops provide participants with the skills necessary to relate with others, reduce tension, etc. At any point

|

|

||||||

|

|

||||||

Given the above, what probability do I assign to NVC being full of bullshit? i.e., that the \~$3,000 courses it offers are only more expensive, not significantly more effective than the $15 book? Actually quite high. NVC seems to have a certain disdain of practical solutions: f.e.x, in Q4 from the measure developed by Steckal "When I listen to another in a caring way, I like to analyze or interpret their problems", an affirmative answer is scored negatively.

|

|

||||||

|

|

||||||

Sense of community. Prediction Spain. Scrap whole section?

|

|

||||||

|

|

||||||

## ESPR as distinct from CFAR.

|

|

||||||

|

|

||||||

It must be noted that ESPR gets little love from the main organization, being mainly run by volunteers, with some instructors coming in to give classes. Eventually, it might make sense to institute espr as a different organization with a focus on Europe instead of as an American side project.

|

|

||||||

|

|

||||||

## ESPR's Logical model.

|

|

||||||

I think that the logical model underpinning ESPR is fundamentally solid, i.e., as solid as CFAR's, given that it's pretty solid. In the words of a student which came back this year as a Junior Counselor:

|

|

||||||

|

|

||||||

> [Teaches] ESPR smart people not to make stupid mistakes. Examples: betting, prediction markets decrease overconfidence. Units of exchange class decreases likelihood of spending time, money, other currency in counterproductive ways. The whole asking for examples thing prevents people from hiding behind abstract terms and to pretend to understand something when they don't. Some of this is learned in classes. A lot of good techniques from just interacting with people at espr.

|

|

||||||

>

|

|

||||||

> I've had conversations with otherwise really smart people and thought “you wouldn't be stuck with those beliefs if you'd gone though two weeks of espr”

|

|

||||||

>

|

|

||||||

> ESPR also increases self-awareness. A lot of espr classes / techniques / culture involves noticing things that happen in your head. This is good for avoiding stupid mistakes and also for getting better at accomplishing things.

|

|

||||||

>

|

|

||||||

> It is nice to be surrounded by very smart. ambitious people. This might be less relevant for people who do competitions like IMO or go to very selective universities. Personally, it is a fucking awesome and rare experience every time I meet someone really smart with a bearable personality in the real world. Being around lots of those people at espr was awesome. Espr might have made a lot of participants consider options they wouldn't seriously have before talking to the instructors like founding a startup, working on ai alignment, everything that galit talked about etc

|

|

||||||

>

|

|

||||||

> espr also increased positive impact participants will have on the world in the future by introducing them to effective altruism ideas. I think last year’s batch would have been affected more by this because I remember there being more on x-risk and prioritizing causes and stuff [1].

|

|

||||||

|

|

||||||

> I spent 15 mins

|

|

||||||

> =)

|

|

||||||

|

|

||||||

Additionally, ESPR gives some of it's alumni the opportunity to come back as Junior Counselors, which take on a possition of some responsibility, and keep improving their own rationality skills.

|

|

||||||

|

|

||||||

[1]. This year, being in Edimburgh, we didn't bring in an FHI person to give a talk. We did have an AI risk panel, and ea/x-risk were important (~10%) focus of conversations. However, I will make a note to bring someone from the FHI next year. We also continued grappling with the boundaries between presenting an important problem and indoctrinating and mindfucking impressionable young persons.

|

|

||||||

|

|

||||||

## Perverse incentives

|

|

||||||

|

|

||||||

As with CFAR's, I think that alumni profiles in the following section provide useful intuitions. However, while perhaps narratively compelling, there is no control group, which is supremely shitty. **These profiles may not allow us to falsify any hypothesis**, i.e., to meaningfully change our priors, because these students come from a pool of incredibly bright applicants. The evidence is weak in that with the current evidence, I would feel uncomfortable saying that ESPR should be scaled up.

|

|

||||||

|

|

||||||

To the extent that OpenPhilantropy prefers these and other weak forms of evidence *now*, rather than stronger evidence two-three years later, OpenPhilantropy might be giving ESPR perverse incentives. Note that with 20-30 students per year, even after we start an RCT, there must pass a number of years before we can amass some meaningful statistical power (see the power calculations). On the other hand, taking a process of iterated improvement as an admission of failure would also be pretty shitty.

|

|

||||||

|

|

||||||

The questions designing a RCT poses are hard, but the bigger problem is that there's an incentive to not ask them at all. But that would be agaist CFAR's ethos, as outlined in the introduction.

|

|

||||||

|

|

||||||

## Alternatives to espr: The cheapest option.

|

|

||||||

One question which interests me is: what is the cheapest version of the program which is still cost effective? What happens if you just record the classes, send them to bright people, and answer their questions? What if you set up a course on edx? Interventions based on universities and highschools are likely to be much cheaper, given that neither board nor flight, nor classrooms would have to be paid for. Is there a low-cost, scalable approach?

|

|

||||||

|

|

||||||

I'm told that some of the cfar instructors have strong intuitions that in-person teaching is much more effective, based on their own experience and perhaps also on a 2012 small rct, which is either unpublished or unfindable.

|

|

||||||

|

|

||||||

Still, I want to test this assumption, because, almost by definition, to do so would be pretty cheap. As a plus, we can take the population who takes the cheaper course to be a second control group.

|

|

||||||

|

|

@ -1,180 +0,0 @@

|

||||||

# Power calculations

|

|

||||||

|

|

||||||

Using R we will do some power calculations

|

|

||||||

Necessary library pwr, loads with library(pwr)

|

|

||||||

Necessary function: pwr.t2n.test

|

|

||||||

See: https://www.statmethods.net/stats/power.html

|

|

||||||

|

|

||||||

Optimistic: We reach everyone

|

|

||||||

Pessimistic: We reach 66% of treatment and control group.

|

|

||||||

|

|

||||||

## Year 1, pessimistic projections

|

|

||||||

ith n-treatment=20, n-control = 20, power = 0.9,sig.level= 0.05, power = 0.9, minimal detectable effect in standard deviations (d) = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 20

|

|

||||||

n2 = 20

|

|

||||||

d = 1.051997

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Year 1, optimistic projections

|

|

||||||

With n_treatment=30, n_control = 60, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 30

|

|

||||||

n2 = 60

|

|

||||||

d = 0.7328756

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

With n = ?, power = 0.9,sig.level= 0.05, power = 0.9, minimal detectable effect = 0.5

|

|

||||||

|

|

||||||

Two-sample t test power calculation

|

|

||||||

|

|

||||||

n = 85.03128

|

|

||||||

d = 0.5

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

NOTE: n is number in *each* group

|

|

||||||

|

|

||||||

|

|

||||||

## Year 2, pessimistic projections

|

|

||||||

With n_treatment=40, n_control = 40, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 40

|

|

||||||

n2 = 40

|

|

||||||

d = 0.7339255

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Year 2, optimistic projections

|

|

||||||

With n_treatment=60, n_control = 120, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 60

|

|

||||||

n2 = 120

|

|

||||||

d = 0.5153056

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

|

|

||||||

## Year 3, pessimistic projections

|

|

||||||

With n_treatment=60, n_control = 60, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 60

|

|

||||||

n2 = 60

|

|

||||||

d = 0.5967207

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Year 3, optimistic projections

|

|

||||||

With n_treatment=90, n_control = 180, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 90

|

|

||||||

n2 = 180

|

|

||||||

d = 0.4200132

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Year 4, pessimistic projections

|

|

||||||

With n_treatment=80, n_control = 80, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 80

|

|

||||||

n2 = 80

|

|

||||||

d = 0.5156619

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Year 4, optimistic projections

|

|

||||||

With n_treatment=120, n_control = 240, power = 0.9,sig.level= 0.05, minimal detectable effect = ?

|

|

||||||

|

|

||||||

t test power calculation

|

|

||||||

|

|

||||||

n1 = 120

|

|

||||||

n2 = 240

|

|

||||||

d = 0.3633959

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

## Population necessary to detect an effect size of 0.2 with significance level = 0.05 and power = 0.9

|

|

||||||

|

|

||||||

Here the free variable was d= minimal detectable effect

|

|

||||||

With n = ?, power = 0.9,sig.level= 0.05, power = 0.9, minimal detectable effect = 0.2

|

|

||||||

|

|

||||||

Two-sample t test power calculation

|

|

||||||

|

|

||||||

n = 526.3332

|

|

||||||

d = 0.2

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

NOTE: n is number in *each* group

|

|

||||||

|

|

||||||

here the free variable was n, the population of the treatment group

|

|

||||||

son = population of the treatmente group = population of the control group

|

|

||||||

necessary to detect an effect of 0.2

|

|

||||||

|

|

||||||

## Population necessary to detect an effect size of 0.5 with significance level = 0.05 and power = 0.9

|

|

||||||

|

|

||||||

Two-sample t test power calculation

|

|

||||||

|

|

||||||

n = 85.03128

|

|

||||||

d = 0.5

|

|

||||||

sig.level = 0.05

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

NOTE: n is number in *each* group

|

|

||||||

|

|

||||||

## Population necessary to detect an effect size of 0.2 with significance level = 0.10 and power = 0.9

|

|

||||||

|

|

||||||

Two-sample t test power calculation

|

|

||||||

|

|

||||||

n = 428.8664

|

|

||||||

d = 0.2

|

|

||||||

sig.level = 0.1

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

NOTE: n is number in *each* group

|

|

||||||

|

|

||||||

|

|

||||||

## Population necessary to detect an effect size of 0.5 with significance level = 0.10 and power = 0.9

|

|

||||||

|

|

||||||

Two-sample t test power calculation

|

|

||||||

|

|

||||||

n = 69.19719

|

|

||||||

d = 0.5

|

|

||||||

sig.level = 0.1

|

|

||||||

power = 0.9

|

|

||||||

alternative = two.sided

|

|

||||||

|

|

||||||

NOTE: n is number in *each* group

|

|

||||||

|

|

||||||

|

|

||||||

## Conclusions.

|

|

||||||

Even after 4 years, under the most optimistic population projections (i.e., every participant answers our surveys every year, and 60 students who didn't get selected also do), we wouldn't have enough power to detect an effect size of 0.2 standard deviations with significance level = 0.05. However, it seems feasible to detect the kinds of effects which would justify the upward of $150.000 / year costs of ESPR within 3 years. The minimum effect which justifies the costs of ESPR should be determined beforehand, as should the axis along which we measure. I would also suggest to expand the RCT to SPARC once its feasibility has been tested at ESPR.

|

|

||||||

|

|

||||||

|

|

@ -1,212 +0,0 @@

|

||||||

# Measurements

|

|

||||||

|

|

||||||

Note: This is a work in progress. The end result would be to create a several survey such as [this](https://docs.google.com/forms/d/1RRKImKZKePSvdWu6aj2zOngSa9PJMfcSH9eCxy3XdfQ/viewform?edit_requested=true), to be taken before the camp, x months after the camp and 2 years after the camp.

|

|

||||||

|

|

||||||

## Difficulties

|

|

||||||

|

|

||||||

The changes which through ESPR could be induced in the students are, in some sense, fuzzy and soft. There is some tension between measuring what is easiest to measure and measuring what we're actually interested in, and we firmly choose the second kind. For example, when measuring openness, we don't care about questions such as:

|

|

||||||

|

|

||||||

I see Myself as Someone Who...

|

|

||||||

- Is original, comes up with new ideas

|

|

||||||

- Is curious about many different things

|

|

||||||

- Is ingenious, a deep thinker

|

|

||||||

- Has an active imagination

|

|

||||||

- Is inventive

|

|

||||||

- Values artistic, aesthetic experiences

|

|

||||||

- Prefers work that is routine

|

|

||||||

- Likes to reflect, play with ideas

|

|

||||||

- Has few artistic interests

|

|

||||||

- Is sophisticated in art, music, or literature

|

|

||||||

|

|

||||||

From John, O. P., & Srivastava, S. (1999). The Big-Five trait taxonomy: History, measurement, and theoretical perspectives. In L. A. Pervin & O. P. John (Eds.), *Handbook of personality: Theory and research* (Vol. 2, pp. 102–138). New York: Guilford Press.

|

|

||||||

|

|

||||||

But instead want to ask things such as:

|

|

||||||

- What was the last time you tried out something new?

|

|

||||||

- How often do you try something new?

|

|

||||||

- How much have you explored vs specialized in the last year?

|

|

||||||

- What was the last time you did something which you thought had a <=5% chance of succeeding?

|

|

||||||

|

|

||||||

Note that the 2015 CFAR Longitudinal study takes a different approach:

|

|

||||||

> "We relied heavily on existing measures which have been validated and used by psychology researchers, especially in the areas of well-being and personality. These measures typically are not a perfect match for what we care about, but we expected them to be sufficiently correlated with what we care about for them to be worth using"

|

|

||||||

|

|

||||||

For example, they used the questions written above, but they'd be insufficient to capture the effects of CoZE, one of the highest impact activities in a CFAR Workshop.

|

|

||||||

|

|

||||||

## Things we want to measure.

|

|

||||||

**- and ways to measure them.**

|

|

||||||

|

|

||||||

Recommend a song which lasts roughly as long as it should take to complete the survey.

|

|

||||||

|

|

||||||

Every time you lie or exaggerate, a kitten dies. By answering this survey, you help make the world a better place.

|

|

||||||

|

|

||||||

If you find yourself fatigued by the length of the survey feel free to take a break and come back. It is also preferable to just go to the end and turn in what you have. Some questions are

|

|

||||||

explicitly marked 'bonus' or 'optional' meaning they are especially skippable.

|

|

||||||

|

|

||||||

1. Demographic information:

|

|

||||||

Ask for consent for aggregation / doing a study on this. ✓

|

|

||||||

Can we include your survey data in a public dataset? ✓

|

|

||||||

Ask for the email. Followup survey. ✓

|

|

||||||

Ikea: birthdate: dd.mm.yyyy + initials + first letter of the country you were born with.

|

|

||||||

Age / Sex assigned at birth / Gender / Country (if many, the one you most identify with) / Ethnic group (most identify) / sexual orientation ✓

|

|

||||||

|

|

||||||

1. Choices influenced by espr.

|

|

||||||

- Average prestigiousness of the universities to which the apply / to which they get in.

|

|

||||||

- % people who are not going to university.

|

|

||||||

- Do you feel like you've made a life-changing choice in the last year?

|

|

||||||

If you have: Write a brief tweet.

|

|

||||||

- Do you feel like your life has significantly changed in the last year?

|

|

||||||

If you have: Write a brief tweet.

|

|

||||||

- Do you feel like the course of your life has significantly changed in the last year?

|

|

||||||

If you have: Write a brief tweet.

|

|

||||||

|

|

||||||

1. Self-Confidence/ Modern Survival Skills.

|

|

||||||

- I think I could do pretty well in a Zombie Apocalypse. ✓

|

|

||||||

- It wouldn't bother me excessively if I woke up in a random city in the world with nothing but my clothes on. ✓

|

|

||||||

|

|

||||||

1. Decisiveness.

|

|

||||||

- To what extent do you agree with the statement: I am a decisive person.

|

|

||||||

- To what extent would your friends agree with the statement: You are a decisive person.

|

|

||||||

- What was the last time you did something which you thought had <=5% of succeeding? Why did you attempt it? Also, describe it. ✓

|

|

||||||

- To what extent do you struggle with doing things you've decided to do? ✓

|

|

||||||

|

|

||||||

1. Openess to new experiences?

|

|

||||||

- What was the last time you tried out something new? ✓

|

|

||||||

- How often do you try something new?

|

|

||||||

|

|

||||||

1. People, connections.

|

|

||||||

- What is the approximate number of people who you interacted with in the past week? ✓

|

|

||||||

- What is the approximate number of people you'd be willing to confide in about something personal? ✓

|

|

||||||

- What is the approximate number of people who would let you crash at their place if you needed somewhere to stay? ✓

|

|

||||||

Their numerical responses were then capped at 300, log transformed, and averaged into a single measure of social support.

|

|

||||||

|

|

||||||

1. Attitudes towards EA.

|

|

||||||

There is no right or wrong answers. Our philosophical positions are very diverse, and even include nietzschean philosophy.

|

|

||||||

- Do you know what Effective Altruism is

|

|

||||||

- Yes / No but I've heard of it / No.✓

|

|

||||||

- Do you self-identify as an Effective Altruist?✓

|

|

||||||

- Has Effective Altruism caused you to make donations you otherwise wouldn't?✓

|

|

||||||

- Do you expect Effective Altruism to cause you to make donations in the future which you otherwise won't?✓

|

|

||||||

- If that is the case, what % of your earnings do you expect to donate to ea charities (like Against Malaria Foundation, Malaria Consortium, Schistosomiasis Control Initiative, Evidence Action's Deworm the World Initiative, GiveWell, 80.000 hours, etc) over your life?

|

|

||||||

- Ask this privately

|

|

||||||

- What's your overall opinion of Effective Altruism? ✓

|

|

||||||

- If you had to distribute 1 billion dollars to different charities, on the basis of which criteria would you do it?

|

|

||||||

|

|

||||||

1. Attitudes towards existential risk.

|

|

||||||

- Are you familiar with the term "existential risk"?

|

|

||||||

- Without searching the internet, looking at Wikipedia, etc., how would you describe the concept in a short tweet?

|

|

||||||

- If you had heard about it, how much of a threat do you think it poses?

|

|

||||||

- In percentage points, how likely do you judge it that your career will in some way be related to existential risk? And directly related?

|

|

||||||

|

|

||||||

1. Attitudes towards AI Safety

|

|

||||||

- Are you familiar with the field of AI Safety?

|

|

||||||

- Have you read any papers related to the field?

|

|

||||||

- If you knew about it beforehand, how much of a threat do you think it poses?

|

|

||||||

- How would you describe the concept in a short tweet? ✓

|

|

||||||

- In percentage points, how likely do you judge it that your career will in some way be related to AI Safety? And directly related? ✓

|

|

||||||

|

|

||||||

|

|

||||||

**Sofware upgrade**

|

|

||||||

|

|

||||||

1. Introspective power. Internal Design. Habits.

|

|

||||||

- I undestand myself ✓

|

|

||||||

- I have fiddled with the different parts of myself.

|

|

||||||

- I work to change the parts of myself which I don't like. ✓

|

|

||||||

- I purposefully create habits. ✓

|

|

||||||

- When was the last time you did this? ✓

|

|

||||||

|

|

||||||

1. Position towards emotions.

|

|

||||||

- Emotions as your allies. ✓

|

|

||||||

- To what extent do you agree with the following:

|

|

||||||

- Emotions are my allies, ✓

|

|

||||||

- Emotions often give me useful information. ✓

|

|

||||||

- Emotions often hinder me, ✓

|

|

||||||

- I would prefer to feel less. ✓

|

|

||||||

- I often ignore my emotions. ✓

|

|

||||||

- I am in touch with my emotions. ✓

|

|

||||||

|

|

||||||

1. Life optimization

|

|

||||||

- I have in place mechanisms for constant, iterated improvement of my life.✓

|

|

||||||

- Write a short tweet about it.

|

|

||||||

- Units of exchange: I often explicitly consider the tradeoffs between money, time, prestige, etc., when making decisions.

|

|

||||||

- When was the last time you've done that (if ever)

|

|

||||||

- Write a short tweet about it.

|

|

||||||

- Think about your current set of skills, your habits, the things you spend your time on, how you interact with other people, the intellectual questions that you find engaging, the goals you’re aiming towards, and the challenges that you’re currently facing going forward. Next, think about how you were one year ago on each of these dimensions. How different are you now from how you were one year ago?

|

|

||||||

- Not at all different / Slightly different / Somewhat different / Very different / Extremely different. ✓

|

|

||||||

- [optional] In about one tweet, what is one difference that stands out as being particularly large or significant? ✓

|

|

||||||

- Can you think of any changes that you’ve made in the past month to your daily routines or habits in order to make things go better? These can be tiny changes (e.g., adjusted the curtains on my bedroom window so that less light comes in while I’m sleeping) or large ones. Spend about 60 seconds recalling as many examples of these kinds of changes as you can and listing them here. (If you want to skip this question, leave it blank. If you spend the 60 seconds and no specific examples come to mind, write "none.") ✓

|

|

||||||

-

|

|

||||||

|

|

||||||

1. Mental illness.

|

|

||||||

I actually don't care about the "Post-espr depression".

|

|

||||||

While having a mental illness sucks, there is no right or wrong answer. Some of the best people I know face depression, aspergers, etc.

|

|

||||||

- Have you been diagnosed with a mental illness?

|

|

||||||

- Do you think you have a mental illness?

|

|

||||||

- If so, which?

|

|

||||||

|

|

||||||

1. Goal clarity

|

|

||||||

With regards to my goals,

|

|

||||||

- I know what my goals are. ✓

|

|

||||||

- I feel that different parts of myself are aligned. ✓

|

|

||||||

- I feel that the different parts of myself are more aligned than 1y ago. ✓

|

|

||||||

- when an internal conflict arises, I have adequate tools to resolve it. ✓

|

|

||||||

|N: I copied the first person from somewhere else.

|

|

||||||

|

|

||||||

1. Communication

|

|

||||||

- I can nonviolently communicate with the people I care about.

|

|

||||||

Too abstract.

|

|

||||||

- When I talk to people, they perceive that I'm speaking in good faith. ✓

|

|

||||||

- I successfully assert my needs to others. ✓

|

|

||||||

- The last time I had a discussion, it was resolved gracefully. ✓

|

|

||||||

- When I debate with people, there is often a satisfying conclusion.

|

|

||||||

|

|

||||||

1. Stupid mistakes.

|

|

||||||

- How often do you make stupid mistakes?

|

|

||||||

- When was the last stupid mistake you made? ✓

|

|

||||||

- Write a short tweet about it.

|

|

||||||

- Did you implement any measures to avoid making that specific stupid mistake in the future? ✓

|

|

||||||

- If so, write a short tweet about it. ✓

|

|

||||||

|

|

||||||

1. Life satisfaction

|

|

||||||

- How satisfied are you with your life as a whole?

|

|

||||||

- To what extent do you agree with the statement: I am winning at life? ✓

|

|

||||||

- Stuckness: I feel like my life is stuck

|

|

||||||

|

|

||||||

1. Effective Approaches to Working on Projects

|

|

||||||

When I decide that I want to do something (like doing a project, developing a new regular practice, or changing some part of my lifestyle), I … ✓

|

|

||||||

- plan out what specific tasks I will need to do to accomplish it.

|

|

||||||

- try to think in advance about what obstacles I might face, and how I can get past them.

|

|

||||||

- seek out information about other people who have attempted similar projects to learn about what they did.

|

|

||||||

- end up getting it done.

|

|

||||||

The four items were averaged into a single measure of effective approaches to projects.

|

|

||||||

|

|

||||||

1. Probabilities / Calibration.

|

|

||||||

- Are you comfortable using probabilities? Do you use them in your daily life / When was the last time you explicitly assigned a probability to something?

|

|

||||||

- To what extent do you agree with the following: Thinking in terms of probabilities is a valuable tool in my skill repertoire.

|

|

||||||

- When was the last time you explicitly assigned a probability to something? Write a short tweet about it.

|

|

||||||

|

|

||||||

- "Calibration" is the practice of knowing how certain you are, even when you're not certain. For example, a bookie who says they're 90% certain of the outcome of each of a hundred horse races, and who is right about ninety out of those hundred horse races - is perfectly calibrated.

|

|

||||||

|

|

||||||

In these questions, you will be asked a question and then asked to give a calibration percent. The percent represents your probability that the answer is right. Suppose the question is "What country is the city of Paris located in?" and you are absolutely sure it is France. In that case, your calibration percent is 100 - you are 100% sure it is France.

|

|

||||||

|

|

||||||

But suppose you think there's a fifty-fifty chance it's either France or Germany. In that case, you might still answer France, but your calibration percent is only 50 - you are only 50% sure it's France.

|

|

||||||

|

|

||||||

Or suppose you have no idea, so you pick a country totally at random. In that case, you might think that if there are about one hundred possible countries, and it could be any of them, there's only about a 1% chance you're right. Therefore, you would put down a calibration percent of 1. Please answer on a scale from 0% (definitely false) to 100% (definitely true)

|

|

||||||

|

|

||||||

- Are you smiling right now?

|

|

||||||

- After each question: Without checking a source, estimate your subjective probability that the answer you just gave is correct.-

|

|

||||||

- Which is heavier, a virus or a prion?

|

|

||||||

- I'm thinking of a number between one and ten, what is it?

|

|

||||||

- What year was the fast food chain "Dairy Queen" founded? (Within five years)

|

|

||||||

- Alexander Hamilton appears on how many distinct denominations of US Currency?

|

|

||||||

- Without counting, how many keys on a standard IBM keyboard released after 1986, within ten?

|

|

||||||

- Too easy to cheat.

|

|

||||||

- What's the diameter of a standard soccerball, in cm within 2?

|

|

||||||

- How many calories in a reese's peanut butter cup within 20?

|

|

||||||

- What is the probability that supernatural events (including God, ghosts, magic, etc) have occurred since the

|

|

||||||

beginning of the universe?

|

|

||||||

- What is the probability that there is a god, defined as a supernatural intelligent entity who created the universe?

|

|

||||||

- What is the probability that any of humankind's revealed religions is more or less correct?

|

|

||||||

|

|

||||||

## Attribution

|

|

||||||

I took several questions from the 2016 LessWrong Diaspora Survey and the CFAR 2015 longitudinal study

|

|

||||||

CFAR's rationality checklist

|

|

||||||

http://www.rationality.org/resources/rationality-checklist

|

|

||||||

|

|

@ -1,44 +0,0 @@

|

||||||

# Details of the implementation

|

|

||||||

|

|

||||||

## Talking with the staff about whether an RCT is a good idea.

|

|

||||||

|

|

||||||

Without the support of the staff, an RCT could not go forward. In particular, an RCT will require that we don't accept promising applicants, i.e., from the 2 most promising applicants, we'd want to have 1 in the control group. This to be a forced decision would probably engender great resentment.

|

|

||||||

|

|

||||||

Similarly, though we would prefer to have smaller groups, of 20, we wouldn't have enough power, even after 4 years if we went that route. Instead, we'd want to accept upwards of 32 students (-2 who, on expectation, won't get their visa on time). Other design studies, like ranking our applicants from 1 to 40, taking the best 20 and randomizing the last 20 (10 for ESPR, 10 for the control group) would appease the staff, but again wouldn't buy us enough power.

|

|

||||||

|

|

||||||

If we want our final alumni pool to be equally as good as in previous years, we would want to increase our reach, our advertising efforts say ~3x, i.e., to find 60 excellent students in total, 30 for the control and 30 for the treatment group. This would be possible by, f.ex., asking every previous participant to nominate a friend, by announcing the camp to the most prestigious highschools in countries with a rationality community, etc. An SSC post / banner wouldn't hurt. A successful effort in this area seems necessary for the full buy in of the staff, and might require additional funds.

|

|

||||||

|

|

||||||

## Spillovers.

|

|

||||||

|

|

||||||

If a promising person from the control group tried to apply the next year, we'd have to deny them the chance to come, or else lose the most promising people from the control group, losing validity.

|

|

||||||

|

|

||||||

We also don't want people on the control group to be disheartened because they didn't get in. For this, I suggest dividing our application in two steps: One in which we select both groups, and a coin toss.

|

|

||||||

|

|

||||||

If people have heard about ESPR, they might read writings by Kahneman, Bostrom, Yudkowsky, et al. If they aren't accepted, they might fulfill their need for cognition by continuing reading such materials. Thus, what we will measure will be the difference between applicants interested in rationality and applicants interested in rationality who go to ESPR, not between equally talented people with no previous contact. At any point, it would seem necessary to disallow explicit mentoring of applicants. Here, again, the full buy in of the staff is needed.

|

|

||||||

|

|

||||||

SPARC is another camp which teaches very similar stuff. I have considered doing the RCT both on ESPR and SPARC at the same time, but SPARC's emphasis on math olympiad people makes that a little bit sketchy. However, because they are still very similar interventions, we don't want to have a person in the control group going to SPARC. This might be a sore point.

|

|

||||||

|

|

||||||

## Stratification.

|

|

||||||

|

|

||||||

Suppose that after randomly allocating the students, we found that the treatment group was richer. This would *suck*, because maybe our effect is just them being, f.ex., healthier. In expectation, the two groups are the same, but maybe in practice they turn out not to be.

|

|

||||||

|

|

||||||

An alternative would be to divide the students into rich and poor, and randomly choose in each bucket. This is refered to as stratification, and buys additional power, though I still have to get into the gritty details. I'm still thinking about along which variables we want to stratify, if at all, and further reflection is needed.

|

|

||||||

|

|

||||||

Note to self: Paired random assignment might be a problem with respect to attrition (f.ex. no visa on time); JPAL recommends strata of at least 4 people.

|

|

||||||

|

|

||||||

## Measurements

|

|

||||||

|

|

||||||

The section of measurements was written by me, Nuño, alone. The next step would be to ask, f.ex. the teachers of each class to propose their own measurements, and combine them what we already have. In the case of NVC I have done a small literature review, so this is less vital, but still important.

|

|

||||||

|

|

||||||

## Incentives.

|

|

||||||

The survey takes 15-30 minutes to complete, and while I've tried to make it engaging and propose pauses, I think that an incentive is needed (i.e., the people in the control group might tell us to fuck off).

|

|

||||||

|

|

||||||

I initially thought about donating X USD to the AMF in their name every time they completed a survey, but I realized that this would motivate the most altruistical individuals the most, thus getting selection effects. Now, I'm leaning towards just giving the survey takers that amount of money.

|

|

||||||

|

|

||||||

As a lower bound, 40 people * 3 years * 2 surveys * 10 USD = 2400 USD, or 800 USD/year, as an upper bound, 60 people * 4 years * 4 surveys * 15 USD = 14400 USD or 3600 USD / year. I don't feel this is that significant in comparison to the total cost of the camp. More expensive, I think, is the time which I and others would work on this for free / the counterfactual projects we might undertake with that time. I am as of yet uncertain of the weight of this factor.

|

|

||||||

|

|

||||||

## Take off and burn.

|

|

||||||

|

|

||||||

To end with a high note, there is a noninsignificant probability that the first year of the RTC we realize we've made a number of grievous mistakes. I.e., it would surprise me if everything went without a hitch the first time. Personally, this only worries me if we don't learn enough to be able to pull it off the next year, which I happen to consider rather unlikely.

|

|

||||||

|

|

||||||

If that risk is unacceptable, we could partner with someone like IDInsight, MIT's JPAL, etc. The problem is that those organizations specialize in development interventions. It wouldn't hurt to ask, though.

|

|

||||||

|

Before Width: | Height: | Size: 156 KiB |

|

|

@ -1,232 +0,0 @@

|

||||||

# Review of *The Power of Survey Design* and *Improving Survey Questions*

|

|

||||||

|

|

||||||

[Epistemic status: Confident.]

|

|

||||||

|

|

||||||

Simplicio: I have a question.

|

|

||||||

Salviati: Woe be upon me.

|

|

||||||

Simplicio: When people make surveys, how do they make sure that the questions measure what they want to measure?

|

|

||||||

|

|

||||||

## Outline

|

|

||||||

- Introduction.

|

|

||||||

- For the eyes of those who are designing a survey.

|

|

||||||

- People are inconsistent. Some ways in which populations are sistematically biased

|

|

||||||

- The Dark Arts!

|

|

||||||

- Legitimacy

|

|

||||||

- Don't bore the answerer

|

|

||||||

- Elite respondents.

|

|

||||||

- Useful categories

|

|

||||||

- Memory

|

|

||||||

- Consistency and ignorance

|

|

||||||

- Subjective vs objective questions

|

|

||||||

- Tactics

|

|

||||||

- Be aware of the biases

|

|

||||||

- Don't confuse the question with the question objective.

|

|

||||||

- An avalanche of advice.

|

|

||||||

- Closing thoughts

|

|

||||||

|

|

||||||

## Introduction

|

|

||||||

|

|

||||||

As part of my research on ESPR's impact, I've read two books on the topic of survey design, namely *The Power of Survey Design* (TPOSD) and *Improving survey questions: Design and Evaluation*.

|

|

||||||

|

|

||||||

They have given me an appreciation of the biases and problems that are likely to pop up when having people complete surveys, and I think this knowledge would be valuable to a variety of people in the EA and rationality communities.

|

|

||||||

|

|

||||||

For example, some people are looking into mental health as an effective cause area. In particular, in Spain Danica Wilbanks is working on trying to estimate the prevalence of mental health issues in the EA community. Something to consider in this case is that people with severe depression might be less likely to answer a survey, because doing so takes effort. So the actual proportion in the survey is likely to be an underestimate. Unless people with mental health issues are more likely to participate in a survey about the topic.

|

|

||||||

|

|

||||||

I've gotten some enjoyment and extra motivation out of inhabiting the state of mind of an [HPMOR Dark Lord](http://www.hpmor.com) while framing the study of these matters as learning the Dark Arts. May you share this enjoyment with me.

|

|

||||||

|

|

||||||

## For the eyes of those who are designing a survey:

|

|

||||||

|

|

||||||

You might want to read this review for the quicks, then:

|

|

||||||

|

|

||||||

a) If you don't want to spend too much time: Focus on [this checklist](), [this list of principles](), as well as [this neat summary I found on the internet]().

|

|

||||||

|

|

||||||

b) If you want to spend a moderate amount of time:

|

|

||||||

- Chapter 3 of *The Power of Survey Design* (68 pages) and/or Chapter 4 of *Improving survey questions* (22 pages) for general things to watch out for when writting questions. Chapter 3 of TPOSD is the backbone of the book.

|

|

||||||

- Chapter 5 of *The Power of Survey Design* (40 pages) for how to use the dark arts to have more people answer your questions willingly and happily.

|

|

||||||

|

|

||||||

c) For even more detail:

|

|

||||||

- The introductions, i.e. Chapter 1 and 2 of *The Power of Survey Design* (9 and 22 pages, respectively), and Chapter 1 of *Improving survey questions* (7 pages) if introductions are your thing, or if you want to plan your strategy. In particular, Chapter 2 of TPOSD has a cool Gantt Chart.

|

|

||||||

- Chapters 2 and 3 of *Improving survey questions* (38 and 32 pages, respectively) for considerations on gathering factual and subjective data, respectively.

|

|

||||||

- Chapter 5 of *Improving survey questions* (25 pages) for how to evaluate/test your survey before the actual implementation.

|

|

||||||

- Chapter 6 of *Improving survey questions* (12 pages) for kind of obvious advice about trying to find something like hospital records to validate your questionnaire with, or about repeating some important questions in slightly different form and get really worried if they don't answer the same thing.

|

|

||||||

|

|

||||||

[Here]() and [here]() are the indexes for both books. [libgen.io](libgen.io) might be of use to download an illegal copy.

|

|

||||||

|

|

||||||

Both books are clearly dated in some aspects: neither considers online surveys, as self-administered surveys were, back in the day, mailing surveys to people. The second suggests: "sensitive questions are put on a tape player (such as a *Walkman*) that can be heard only through earphones". However, I think that on the broad principles and considerations, both books remain useful guides.

|

|

||||||

|

|

||||||

## People are inconsistent. Some ways in which populations are sistematically biased

|

|

||||||

|

|

||||||

Here is a nonexhaustive collection of curious anecdotes mentioned in the first book:

|

|

||||||

|

|

||||||

- A Latinobarometro poll in 2004 showed that while a clear majority (63 percent) in Latin America would never support a military government, 55 percent would not mind a nondemocratic government if it solved economic problems.

|

|

||||||

|

|

||||||

- When asked about a fictitious “Public Affairs Act” one-third of respondents volunteered an answer

|

|

||||||

|

|

||||||

- The choice of numeric scales has an impact on response patterns: Using a scale which goes from -5 to produces a different distribution of answers than using a scale that goes from 0 to 10.

|

|

||||||

|

|

||||||

- The order of questions influences the answer. Wording as well: framing the question with the term "welfare" instead of with the formulation "incentives for people with low incomes" produces a big effect.

|

|

||||||

|

|

||||||

- Options that appear at the beginning of a long list seem to have a higher likelihood of being selected. For example, when alternatives are listed from poor to excellent rather than the other way around, respondents are more likely to use the negative end of the scale. Unless it's in a phone interview, or read out loud, in which case the last options are more likely.

|

|

||||||

|

|

||||||

- When asked whether they had visited a doctor in the last two weeks: Apparently, when respondents have had a recent doctor visit, but not one within the last two weeks, there is a tendency to want to report it. In essence, they feel that accurate reporting really means that they are the kind of person who saw a doctor recently, if not exactly and precisely within the last two weeks.

|

|

||||||

|

|

||||||

- The percentage of people supporting US involvement in WW2 almost doubled if the word "Hitler" appeared in the question.

|

|

||||||

|

|

||||||

Frankly, I find this so fucking scary. I guess that some part of me implictly had a model of people having a thought out position with respect to democracy, which questions merely elicited. As if.

|

|

||||||

|

|

||||||

## The Dark Arts!

|

|

||||||

|

|

||||||

Key extract: "Evidence shows that expressions of reclutance can be overcome" (p. 175, *The Power of Survey Design*, and Chapter 5 of the same book). I'm fascinated by this chapter, because the author has spent way more time thinking about this than the survey-taker: he is one or two levels above the potential answerer and can nudge his behavior.

|

|

||||||

|

|

||||||

As a short aside, the analogies to pick up artistry are abundant. One could caritatively summarize their position as highlighting that questions pertaining romance and sex will be answered differently depending on how they're posed, because people don't have an answer written in stone beforehand.

|

|

||||||

|

|

||||||

Of course, the questionnaire writer could write biased questions with the intention of producing the answers he wishes to obtain, but these books go in a subtle direction: Once good questions have been written, how do you convice people, perhaps initially reclutant, to take part in your survey? How do you get them to answer sensitive questions truthfully?

|

|

||||||

|

|

||||||

For example:

|

|

||||||

|

|

||||||

> Three factors have been proven to affect persuasion: the quantity of the arguments presented, the quality of the arguments, and the relevance of the topic to the respondent. Research on attitude change shows that the number of arguments (quantity) presented has an impact on respondent attitudes only if saliency is low (figure 5.6). Conversely, the quality of the arguments has a positive impact on respondents only if personal involvement is high (figure 5.7) When respondents show high involvement, argument quality has a much stronger effect on persuasion, while weak arguments might be counterproductive. At the same time, when saliency is low, the quantity of the arguments appears to be effective, while their quality has no significant persuasive effect (figure 5.8) (Petty and Cacioppo 1984).

|

|

||||||

> These few minutes of introduction will determine the climate of the entire interview. Hence, this time is extremely important and it must be used to pique the respondent’s interest...

|

|

||||||

|

|

||||||

Most importantly, how do you defend against someone who has carried out multiple randomized trials to map out the different behaviors you might adopt, and how to best persuade you in each of them? I feel that Tzvi's essay on people who "are out to get you" has mapped the possible behaviors you might adopt in defense. Chief among them is actually being aware.

|

|

||||||

|

|

||||||

### Legitimacy

|

|

||||||

|

|

||||||

At the beginning, make sure to assure legal confidentiality, maybe research the relevant laws in your jurisdiction and make reference to them. Name drop sponsors, include contact names and phone numbers. Explain the importance of your research, its unique characteristics and practical benefits.

|

|

||||||

|

|

||||||

There is a part of signalling [spelling] confidentiality, legitimacy, competence which involves actually doing the thing. For example, if you assure legal confidentiality, but then ask information which would permit easy deanonimization, people might notice and get pissed. But another part is merely: be aware of this dimension.

|

|

||||||

|

|

||||||

The first questions should be easy, pleasant, and interesting. Build up confidence in the survey's objective, stimulate their interest and participation by making sure that the respondent is able to see the relationship between the question asked and the purpose of the study.

|

|

||||||

|

|

||||||

Make sensitive questions longer, as they are then perceived as less threatening. Perhaps add a preface explaining that both alternatives are ok. Don't ask them at the beginning of your survey.

|

|

||||||

|

|

||||||

### Bears repeating: Don't bore the answerer.

|

|

||||||

Seems obvious. Cooperation will be highest when the questionnaire is interesting and when it avoids items difficult to answer, time-consuming, or embarrassing. In my case, making my survey interesting means starting with a prisoner's dilemma with real payoffs, which will double as the monetary incentive to complete the survey.

|

|

||||||

|

|

||||||

It serves no purpose to ask the respondent about something he or she does not understand clearly or that is too far in the past to remember correctly; doing so generates inaccurate information.

|

|

||||||

|

|

||||||

Don't ask a long sequence of very similar questions. This bores and irritates people, which leads them to answer mechanically. A term used for this is acquiescence bias: in questions with an "agree-disagre" or "yes-no" format, people tend to agree or say yes even when the meaning is reversed. In questions with a "0-5" scale, people tend to choose 2.

|

|

||||||

|

|

||||||

On the other hard, don't make them hord to hard. In general, telling the respondents a definition and asking them to clasify themselves, is too much work.

|

|

||||||

|

|

||||||

### Elite respondents

|

|

||||||

This section might be particularly relevant for the high-IQ crowd characteristic of the EA and LW movements. Again, the key movement is to match the level of cognitive complexity of the question with the respondent's level of cognitive ability, as not doing so leads to frustration. Looking back on my experiences as a survey participant, this does mirror my experience.

|

|

||||||

|

|

||||||

Elites are apparently quickly irritated if the topic of the questions is not of interest to them. Vague queries generate a sense of frustration, and lead to a perception that the study is not legitimate. Oversimplifications are noticed and disliked.

|

|

||||||

|

|

||||||

Start with a narrative question, add open questions at regular intervals throughout the form. Elites “resent being encased in the straightjacket of standardized questions” and feel particularly frustrated if they perceive that the response alternatives do not accurately address their key concern.

|

|

||||||

|

|

||||||

For example, the recent 80,000 Hours had the following question: "Have your career plans changed in any way as result of engaging with 80,000 Hours?". The possible answers were not really exhaustive, and in particularly, there was no option for "I made a big change, but only partially as a result of 80,000 Hours". Or "I made a big change, but I am really not sure of what the counterfactual scenario would have been". I remember that this frustrated me, because, as far as I remember the alternatives did not provide a clear way to express this.

|

|

||||||

|

|

||||||

*Improving Survey Questions* goes on at length about ensuring that people are asked questions to which they know the answers, and in some cases, "Have your career plans changed in any way as result of engaging with 80,000 Hours?" [look up which exact question was it] might be one of them. Perhaps an alternative would be to divide that question in:

|

|

||||||

|

|

||||||

- Have your career plans changed in any way in the last year?

|

|

||||||

- How big was that change?

|

|

||||||

- Did 80,000h have any influence on it?

|

|

||||||

- Where "100" means that 80,000h was unambiguously causally responsible [spelling] for that change, "50" means that you would have given it even odds to you making that change in the absence of any interaction with 80K, and "0" means that you're absolutely sure 80K had nothing to do with that change, how much has 80,000h influenced that change? responsibility [check spelling].

|

|

||||||

|

|

||||||

Yet I'm not confident that formulation is superior, and at some level, I trust 80K to have [done their homework](https://80000hours.org/2017/12/annual-review/).

|

|

||||||

|

|

||||||

## Useful categories.

|

|

||||||

|

|

||||||

### Memory

|

|

||||||

|

|

||||||

Events less than two weeks into the past can be remembered without much error. There are several ways in which people can estimate the frequency with which something happens, chiefly:

|

|

||||||

- Availability bias: How easy it is to remember X.

|

|

||||||

- Episodic enumeration: Recalling and counting occurrences of an event

|

|

||||||

- Resorting to some sense of normative frequency.

|

|

||||||

- etc.

|

|

||||||

|

|

||||||

Of these, episodic enumeration turns out to be the most accurate, and people use it more the less instances of the things there are. The wording of the question might be changed to facilitate episodic enummeration.

|

|

||||||

|

|

||||||

Asking a longer question, and communicating to responders the significance of the question has a positive effect on the accuracy of the answer. This means phrasing such as “please take your time to answer this question,” “the accuracy of this question is particularly important,” or “please take at least 30 seconds to think about this question before answering”.

|

|

||||||

|

|

||||||

If you want to measure knowledge, take into account that recognizing is easier than recalling. More people will be able to recognize a definition of effective altruism than be able to produce one on their own. If you use a multiple question with n options, and x% of people knew the answer, whereas (100-x)% didn't, you might expect that (100-x)/n % hadn't known the answer, but guessed correctly by chance, so you'd see that y% = x% + (100-x)/n % selected the correct option.

|

|

||||||

|

|

||||||

### Consistency and Ignorance.

|

|

||||||

|

|

||||||

In of our examples at the beginning, one third of respondents gave an opinion about a ficticious Act. This generalizes; respondents rarely admit ignorance. It is thus a good idea to offer an "I don't know", or "I don't really care about this topic". The recent SlateStarCodex Community Survey had a problem in this regard with respect to some questions, because once checked, they couldn't go unchecked.

|

|

||||||

|

|

||||||

With regards to consistency, it is a good idea to ask similar questions in different parts of the questionnaire to check the consistency of answers. Reverse some of the questions.

|

|

||||||

|

|

||||||

### Subjective vs objective questions

|

|

||||||

The author of *Improving Survey Questions* views the distinction between objective and subjective questions as very important. That there is no direct way to know about people's subjective states independent of what they tell us apparently has serious metaphysical implications. To this, he devotes a whole chapter.

|

|

||||||

|

|

||||||

Anyways, despite the lack of an independent measure, there are still things to do, chiefly:

|

|

||||||

- Place answers on a single well defined continuum

|

|

||||||

- Specify clearly what is to be rated.

|

|

||||||

|

|

||||||

And yet, the author goes full relativist:

|

|

||||||

> "The concept of bias is meaningless for subjective questions. By changing wording, response order, or other things, it is possible to change the distribution of answers. However, the concept of bias implies systematic deviations from some true score, and there is no true score... Do not conclude that "most people favor gun control", "most people oppose abortions"... All that happened is that a majority of respondents picked response alternatives to a particular question that the researcher chose to interpret as favorable or positive."

|

|

||||||

|

|

||||||

## Test your questionnaire

|

|

||||||

I appreciated the pithy phrases "Armchair discussions cannot replace direct contact with the population being analyzed" and "Everybody thinks they can write good survey questions". With respect to testing a questionnaire, the books go over different strategies and argues for some reflexivity when deciding what type of test to undertake.

|

|

||||||

|

|

||||||

In particular, the intuitive or traditional way to go about testing a questionnaire would be a focus group: you have some test subjects, have them take the survey, and then talk with them or with the interviewers. This, the authors argue, is messy, because some people might dominate the conversation out of proportion to the problems they encountered. Additionally, random respondents are not actually very good judges of questions.

|

|

||||||

|

|

||||||

Instead, no matter what type of test you're carrying out, having a table with issues for each question, filled individually and before any discussion, makes the process less prone to social effects.

|

|

||||||

|

|

||||||

Another alternative is to try to get in the mind of the respondent while they're taking the survey. To this effect, you can ask respondents:

|

|

||||||

- to paraphrase their understanding of the question.

|

|

||||||

- to define terms

|

|

||||||

- for any uncertainties or confusions

|

|

||||||

- how accurately they were able to answer certain question and how likely they think they or others would be to distort answers to certain questions

|

|

||||||

- if the question called for a numerical figure, how they arrived at the number.

|

|

||||||

|

|

||||||

F.ex.:

|

|

||||||

Question: Overall, how would you rate your health: excellent, very good, fair, or poor?

|

|

||||||

Followup question: When you said that your health was (previous answer), what did you take into account or think about in making that rating?

|

|

||||||

|

|

||||||

In the case of pretesting the survey, a division in conventional, behavioral and cognitive interview is presented, and the cases in which each of them are more adequate are outlined.

|

|

||||||

|

|

||||||