Add Lerna with dependencies between packages

This commit is contained in:

parent

239c08b6cb

commit

1b3ad56977

3

.gitignore

vendored

Normal file

3

.gitignore

vendored

Normal file

|

|

@ -0,0 +1,3 @@

|

||||||

|

node_modules

|

||||||

|

.cache

|

||||||

|

.merlin

|

||||||

13

README.md

13

README.md

|

|

@ -5,15 +5,10 @@ This is an experiment DSL/language for making probabilistic estimates.

|

||||||

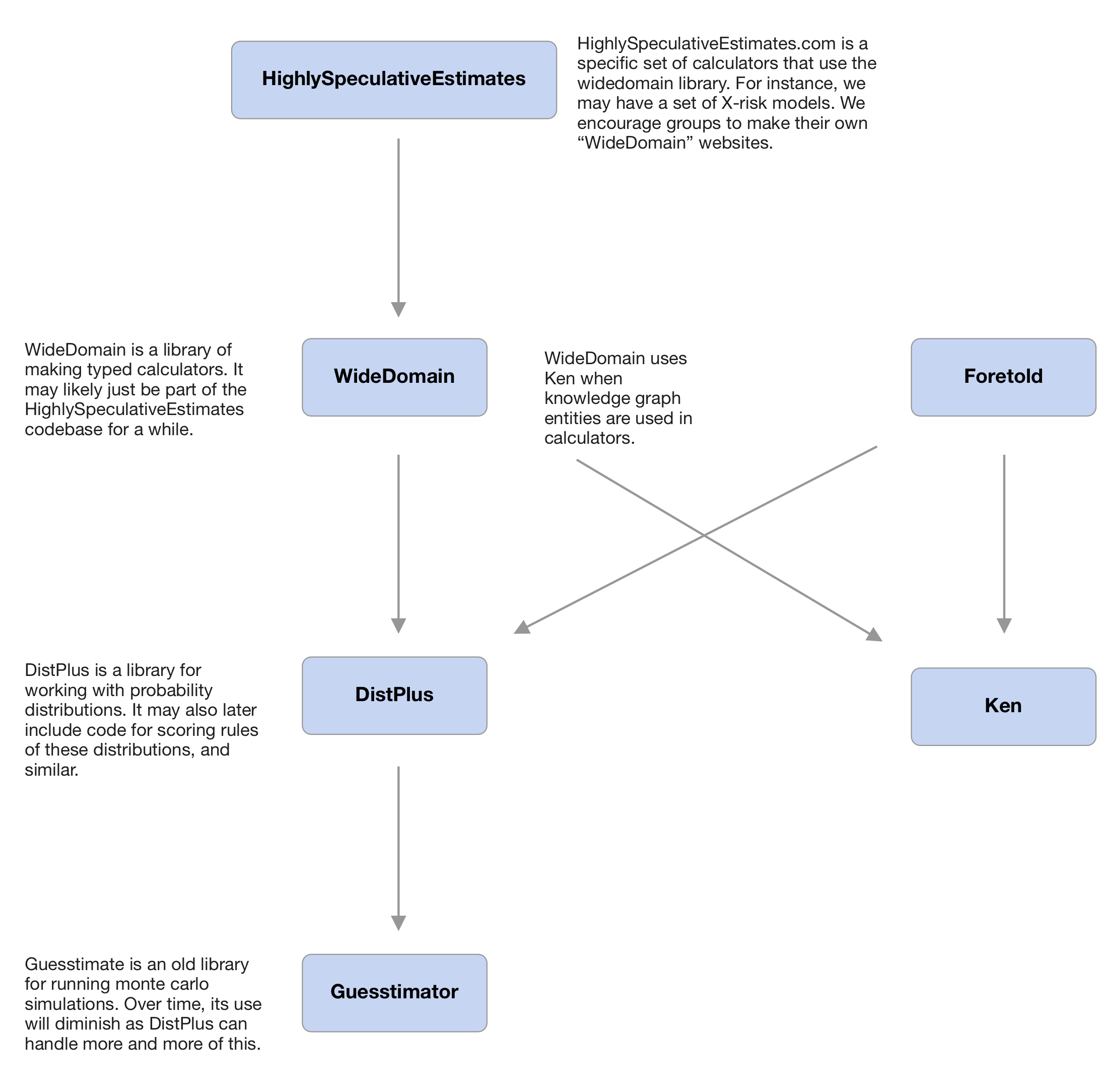

## DistPlus

|

## DistPlus

|

||||||

We have a custom library called DistPlus to handle distributions with additional metadata. This helps handle mixed distributions (continuous + discrete), a cache for a cdf, possible unit types (specific times are supported), and limited domains.

|

We have a custom library called DistPlus to handle distributions with additional metadata. This helps handle mixed distributions (continuous + discrete), a cache for a cdf, possible unit types (specific times are supported), and limited domains.

|

||||||

|

|

||||||

## Running

|

## Running packages in the monorepo

|

||||||

|

This application uses `lerna` to manage dependencies between packages. To install

|

||||||

Currently it only has a few very simple models.

|

dependencies of all packages, run:

|

||||||

|

|

||||||

```

|

```

|

||||||

yarn

|

lerna bootstrap

|

||||||

yarn run start

|

|

||||||

yarn run parcel

|

|

||||||

```

|

```

|

||||||

|

|

||||||

## Expected future setup

|

|

||||||

|

|

||||||

|

|

|

||||||

6

lerna.json

Normal file

6

lerna.json

Normal file

|

|

@ -0,0 +1,6 @@

|

||||||

|

{

|

||||||

|

"packages": [

|

||||||

|

"packages/*"

|

||||||

|

],

|

||||||

|

"version": "0.0.0"

|

||||||

|

}

|

||||||

13

package.json

13

package.json

|

|

@ -1,8 +1,7 @@

|

||||||

{

|

{

|

||||||

"name": "root",

|

"private": true,

|

||||||

"private": true,

|

"devDependencies": {

|

||||||

"devDependencies": {

|

"lerna": "^4.0.0"

|

||||||

},

|

},

|

||||||

"scripts": {

|

"name": "squiggle"

|

||||||

}

|

}

|

||||||

}

|

|

||||||

|

|

|

||||||

|

|

@ -1,13 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

describe("Bandwidth", () => {

|

|

||||||

test("nrd0()", () => {

|

|

||||||

let data = [|1., 4., 3., 2.|];

|

|

||||||

expect(Bandwidth.nrd0(data)) |> toEqual(0.7625801874014622);

|

|

||||||

});

|

|

||||||

test("nrd()", () => {

|

|

||||||

let data = [|1., 4., 3., 2.|];

|

|

||||||

expect(Bandwidth.nrd(data)) |> toEqual(0.8981499984950554);

|

|

||||||

});

|

|

||||||

});

|

|

||||||

|

|

@ -1,104 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

describe("DistTypes", () => {

|

|

||||||

describe("Domain", () => {

|

|

||||||

let makeComplete = (yPoint, expectation) =>

|

|

||||||

makeTest(

|

|

||||||

"With input: " ++ Js.Float.toString(yPoint),

|

|

||||||

DistTypes.Domain.yPointToSubYPoint(Complete, yPoint),

|

|

||||||

expectation,

|

|

||||||

);

|

|

||||||

let makeSingle =

|

|

||||||

(

|

|

||||||

direction: [ | `left | `right],

|

|

||||||

excludingProbabilityMass,

|

|

||||||

yPoint,

|

|

||||||

expectation,

|

|

||||||

) =>

|

|

||||||

makeTest(

|

|

||||||

"Excluding: "

|

|

||||||

++ Js.Float.toString(excludingProbabilityMass)

|

|

||||||

++ " and yPoint: "

|

|

||||||

++ Js.Float.toString(yPoint),

|

|

||||||

DistTypes.Domain.yPointToSubYPoint(

|

|

||||||

direction == `left

|

|

||||||

? LeftLimited({xPoint: 3.0, excludingProbabilityMass})

|

|

||||||

: RightLimited({xPoint: 3.0, excludingProbabilityMass}),

|

|

||||||

yPoint,

|

|

||||||

),

|

|

||||||

expectation,

|

|

||||||

);

|

|

||||||

let makeDouble = (domain, yPoint, expectation) =>

|

|

||||||

makeTest(

|

|

||||||

"Excluding: limits",

|

|

||||||

DistTypes.Domain.yPointToSubYPoint(domain, yPoint),

|

|

||||||

expectation,

|

|

||||||

);

|

|

||||||

|

|

||||||

describe("With Complete Domain", () => {

|

|

||||||

makeComplete(0.0, Some(0.0));

|

|

||||||

makeComplete(0.6, Some(0.6));

|

|

||||||

makeComplete(1.0, Some(1.0));

|

|

||||||

});

|

|

||||||

describe("With Left Limit", () => {

|

|

||||||

makeSingle(`left, 0.5, 1.0, Some(1.0));

|

|

||||||

makeSingle(`left, 0.5, 0.75, Some(0.5));

|

|

||||||

makeSingle(`left, 0.8, 0.9, Some(0.5));

|

|

||||||

makeSingle(`left, 0.5, 0.4, None);

|

|

||||||

makeSingle(`left, 0.5, 0.5, Some(0.0));

|

|

||||||

});

|

|

||||||

describe("With Right Limit", () => {

|

|

||||||

makeSingle(`right, 0.5, 1.0, None);

|

|

||||||

makeSingle(`right, 0.5, 0.25, Some(0.5));

|

|

||||||

makeSingle(`right, 0.8, 0.5, None);

|

|

||||||

makeSingle(`right, 0.2, 0.2, Some(0.25));

|

|

||||||

makeSingle(`right, 0.5, 0.5, Some(1.0));

|

|

||||||

makeSingle(`right, 0.5, 0.0, Some(0.0));

|

|

||||||

makeSingle(`right, 0.5, 0.5, Some(1.0));

|

|

||||||

});

|

|

||||||

describe("With Left and Right Limit", () => {

|

|

||||||

makeDouble(

|

|

||||||

LeftAndRightLimited(

|

|

||||||

{excludingProbabilityMass: 0.25, xPoint: 3.0},

|

|

||||||

{excludingProbabilityMass: 0.25, xPoint: 10.0},

|

|

||||||

),

|

|

||||||

0.5,

|

|

||||||

Some(0.5),

|

|

||||||

);

|

|

||||||

makeDouble(

|

|

||||||

LeftAndRightLimited(

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 3.0},

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 10.0},

|

|

||||||

),

|

|

||||||

0.2,

|

|

||||||

Some(0.125),

|

|

||||||

);

|

|

||||||

makeDouble(

|

|

||||||

LeftAndRightLimited(

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 3.0},

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 10.0},

|

|

||||||

),

|

|

||||||

0.1,

|

|

||||||

Some(0.0),

|

|

||||||

);

|

|

||||||

makeDouble(

|

|

||||||

LeftAndRightLimited(

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 3.0},

|

|

||||||

{excludingProbabilityMass: 0.1, xPoint: 10.0},

|

|

||||||

),

|

|

||||||

0.05,

|

|

||||||

None,

|

|

||||||

);

|

|

||||||

});

|

|

||||||

})

|

|

||||||

});

|

|

||||||

|

|

@ -1,415 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let shape: DistTypes.xyShape = {xs: [|1., 4., 8.|], ys: [|8., 9., 2.|]};

|

|

||||||

|

|

||||||

// let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

// only

|

|

||||||

// ? Only.test(str, () =>

|

|

||||||

// expect(item1) |> toEqual(item2)

|

|

||||||

// )

|

|

||||||

// : test(str, () =>

|

|

||||||

// expect(item1) |> toEqual(item2)

|

|

||||||

// );

|

|

||||||

|

|

||||||

// let makeTestCloseEquality = (~only=false, str, item1, item2, ~digits) =>

|

|

||||||

// only

|

|

||||||

// ? Only.test(str, () =>

|

|

||||||

// expect(item1) |> toBeSoCloseTo(item2, ~digits)

|

|

||||||

// )

|

|

||||||

// : test(str, () =>

|

|

||||||

// expect(item1) |> toBeSoCloseTo(item2, ~digits)

|

|

||||||

// );

|

|

||||||

|

|

||||||

// describe("Shape", () => {

|

|

||||||

// describe("Continuous", () => {

|

|

||||||

// open Continuous;

|

|

||||||

// let continuous = make(`Linear, shape, None);

|

|

||||||

// makeTest("minX", T.minX(continuous), 1.0);

|

|

||||||

// makeTest("maxX", T.maxX(continuous), 8.0);

|

|

||||||

// makeTest(

|

|

||||||

// "mapY",

|

|

||||||

// T.mapY(r => r *. 2.0, continuous) |> getShape |> (r => r.ys),

|

|

||||||

// [|16., 18.0, 4.0|],

|

|

||||||

// );

|

|

||||||

// describe("xToY", () => {

|

|

||||||

// describe("when Linear", () => {

|

|

||||||

// makeTest(

|

|

||||||

// "at 4.0",

|

|

||||||

// T.xToY(4., continuous),

|

|

||||||

// {continuous: 9.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// // Note: This below is weird to me, I'm not sure if it's what we want really.

|

|

||||||

// makeTest(

|

|

||||||

// "at 0.0",

|

|

||||||

// T.xToY(0., continuous),

|

|

||||||

// {continuous: 8.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "at 5.0",

|

|

||||||

// T.xToY(5., continuous),

|

|

||||||

// {continuous: 7.25, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "at 10.0",

|

|

||||||

// T.xToY(10., continuous),

|

|

||||||

// {continuous: 2.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

// describe("when Stepwise", () => {

|

|

||||||

// let continuous = make(`Stepwise, shape, None);

|

|

||||||

// makeTest(

|

|

||||||

// "at 4.0",

|

|

||||||

// T.xToY(4., continuous),

|

|

||||||

// {continuous: 9.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "at 0.0",

|

|

||||||

// T.xToY(0., continuous),

|

|

||||||

// {continuous: 0.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "at 5.0",

|

|

||||||

// T.xToY(5., continuous),

|

|

||||||

// {continuous: 9.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "at 10.0",

|

|

||||||

// T.xToY(10., continuous),

|

|

||||||

// {continuous: 2.0, discrete: 0.0},

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

// });

|

|

||||||

// makeTest(

|

|

||||||

// "integral",

|

|

||||||

// T.Integral.get(~cache=None, continuous) |> getShape,

|

|

||||||

// {xs: [|1.0, 4.0, 8.0|], ys: [|0.0, 25.5, 47.5|]},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "toLinear",

|

|

||||||

// {

|

|

||||||

// let continuous =

|

|

||||||

// make(`Stepwise, {xs: [|1., 4., 8.|], ys: [|0.1, 5., 1.0|]}, None);

|

|

||||||

// continuous |> toLinear |> E.O.fmap(getShape);

|

|

||||||

// },

|

|

||||||

// Some({

|

|

||||||

// xs: [|1.00007, 1.00007, 4.0, 4.00007, 8.0, 8.00007|],

|

|

||||||

// ys: [|0.0, 0.1, 0.1, 5.0, 5.0, 1.0|],

|

|

||||||

// }),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "toLinear",

|

|

||||||

// {

|

|

||||||

// let continuous = make(`Stepwise, {xs: [|0.0|], ys: [|0.3|]}, None);

|

|

||||||

// continuous |> toLinear |> E.O.fmap(getShape);

|

|

||||||

// },

|

|

||||||

// Some({xs: [|0.0|], ys: [|0.3|]}),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integralXToY",

|

|

||||||

// T.Integral.xToY(~cache=None, 0.0, continuous),

|

|

||||||

// 0.0,

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integralXToY",

|

|

||||||

// T.Integral.xToY(~cache=None, 2.0, continuous),

|

|

||||||

// 8.5,

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integralXToY",

|

|

||||||

// T.Integral.xToY(~cache=None, 100.0, continuous),

|

|

||||||

// 47.5,

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integralEndY",

|

|

||||||

// continuous

|

|

||||||

// |> T.normalize //scaleToIntegralSum(~intendedSum=1.0)

|

|

||||||

// |> T.Integral.sum(~cache=None),

|

|

||||||

// 1.0,

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

|

|

||||||

// describe("Discrete", () => {

|

|

||||||

// open Discrete;

|

|

||||||

// let shape: DistTypes.xyShape = {

|

|

||||||

// xs: [|1., 4., 8.|],

|

|

||||||

// ys: [|0.3, 0.5, 0.2|],

|

|

||||||

// };

|

|

||||||

// let discrete = make(shape, None);

|

|

||||||

// makeTest("minX", T.minX(discrete), 1.0);

|

|

||||||

// makeTest("maxX", T.maxX(discrete), 8.0);

|

|

||||||

// makeTest(

|

|

||||||

// "mapY",

|

|

||||||

// T.mapY(r => r *. 2.0, discrete) |> (r => getShape(r).ys),

|

|

||||||

// [|0.6, 1.0, 0.4|],

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 4.0",

|

|

||||||

// T.xToY(4., discrete),

|

|

||||||

// {discrete: 0.5, continuous: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 0.0",

|

|

||||||

// T.xToY(0., discrete),

|

|

||||||

// {discrete: 0.0, continuous: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 5.0",

|

|

||||||

// T.xToY(5., discrete),

|

|

||||||

// {discrete: 0.0, continuous: 0.0},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "scaleBy",

|

|

||||||

// scaleBy(~scale=4.0, discrete),

|

|

||||||

// make({xs: [|1., 4., 8.|], ys: [|1.2, 2.0, 0.8|]}, None),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "normalize, then scale by 4.0",

|

|

||||||

// discrete

|

|

||||||

// |> T.normalize

|

|

||||||

// |> scaleBy(~scale=4.0),

|

|

||||||

// make({xs: [|1., 4., 8.|], ys: [|1.2, 2.0, 0.8|]}, None),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "scaleToIntegralSum: back and forth",

|

|

||||||

// discrete

|

|

||||||

// |> T.normalize

|

|

||||||

// |> scaleBy(~scale=4.0)

|

|

||||||

// |> T.normalize,

|

|

||||||

// discrete,

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integral",

|

|

||||||

// T.Integral.get(~cache=None, discrete),

|

|

||||||

// Continuous.make(

|

|

||||||

// `Stepwise,

|

|

||||||

// {xs: [|1., 4., 8.|], ys: [|0.3, 0.8, 1.0|]},

|

|

||||||

// None

|

|

||||||

// ),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integral with 1 element",

|

|

||||||

// T.Integral.get(~cache=None, Discrete.make({xs: [|0.0|], ys: [|1.0|]}, None)),

|

|

||||||

// Continuous.make(`Stepwise, {xs: [|0.0|], ys: [|1.0|]}, None),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integralXToY",

|

|

||||||

// T.Integral.xToY(~cache=None, 6.0, discrete),

|

|

||||||

// 0.9,

|

|

||||||

// );

|

|

||||||

// makeTest("integralEndY", T.Integral.sum(~cache=None, discrete), 1.0);

|

|

||||||

// makeTest("mean", T.mean(discrete), 3.9);

|

|

||||||

// makeTestCloseEquality(

|

|

||||||

// "variance",

|

|

||||||

// T.variance(discrete),

|

|

||||||

// 5.89,

|

|

||||||

// ~digits=7,

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

|

|

||||||

// describe("Mixed", () => {

|

|

||||||

// open Distributions.Mixed;

|

|

||||||

// let discreteShape: DistTypes.xyShape = {

|

|

||||||

// xs: [|1., 4., 8.|],

|

|

||||||

// ys: [|0.3, 0.5, 0.2|],

|

|

||||||

// };

|

|

||||||

// let discrete = Discrete.make(discreteShape, None);

|

|

||||||

// let continuous =

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {xs: [|3., 7., 14.|], ys: [|0.058, 0.082, 0.124|]},

|

|

||||||

// None

|

|

||||||

// )

|

|

||||||

// |> Continuous.T.normalize; //scaleToIntegralSum(~intendedSum=1.0);

|

|

||||||

// let mixed = Mixed.make(

|

|

||||||

// ~continuous,

|

|

||||||

// ~discrete,

|

|

||||||

// );

|

|

||||||

// makeTest("minX", T.minX(mixed), 1.0);

|

|

||||||

// makeTest("maxX", T.maxX(mixed), 14.0);

|

|

||||||

// makeTest(

|

|

||||||

// "mapY",

|

|

||||||

// T.mapY(r => r *. 2.0, mixed),

|

|

||||||

// Mixed.make(

|

|

||||||

// ~continuous=

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {

|

|

||||||

// xs: [|3., 7., 14.|],

|

|

||||||

// ys: [|

|

|

||||||

// 0.11588411588411589,

|

|

||||||

// 0.16383616383616384,

|

|

||||||

// 0.24775224775224775,

|

|

||||||

// |],

|

|

||||||

// },

|

|

||||||

// None

|

|

||||||

// ),

|

|

||||||

// ~discrete=Discrete.make({xs: [|1., 4., 8.|], ys: [|0.6, 1.0, 0.4|]}, None)

|

|

||||||

// ),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 4.0",

|

|

||||||

// T.xToY(4., mixed),

|

|

||||||

// {discrete: 0.25, continuous: 0.03196803196803197},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 0.0",

|

|

||||||

// T.xToY(0., mixed),

|

|

||||||

// {discrete: 0.0, continuous: 0.028971028971028972},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 5.0",

|

|

||||||

// T.xToY(7., mixed),

|

|

||||||

// {discrete: 0.0, continuous: 0.04095904095904096},

|

|

||||||

// );

|

|

||||||

// makeTest("integralEndY", T.Integral.sum(~cache=None, mixed), 1.0);

|

|

||||||

// makeTest(

|

|

||||||

// "scaleBy",

|

|

||||||

// Mixed.scaleBy(~scale=2.0, mixed),

|

|

||||||

// Mixed.make(

|

|

||||||

// ~continuous=

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {

|

|

||||||

// xs: [|3., 7., 14.|],

|

|

||||||

// ys: [|

|

|

||||||

// 0.11588411588411589,

|

|

||||||

// 0.16383616383616384,

|

|

||||||

// 0.24775224775224775,

|

|

||||||

// |],

|

|

||||||

// },

|

|

||||||

// None

|

|

||||||

// ),

|

|

||||||

// ~discrete=Discrete.make({xs: [|1., 4., 8.|], ys: [|0.6, 1.0, 0.4|]}, None),

|

|

||||||

// ),

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "integral",

|

|

||||||

// T.Integral.get(~cache=None, mixed),

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {

|

|

||||||

// xs: [|1.00007, 1.00007, 3., 4., 4.00007, 7., 8., 8.00007, 14.|],

|

|

||||||

// ys: [|

|

|

||||||

// 0.0,

|

|

||||||

// 0.0,

|

|

||||||

// 0.15,

|

|

||||||

// 0.18496503496503497,

|

|

||||||

// 0.4349674825174825,

|

|

||||||

// 0.5398601398601399,

|

|

||||||

// 0.5913086913086913,

|

|

||||||

// 0.6913122927072927,

|

|

||||||

// 1.0,

|

|

||||||

// |],

|

|

||||||

// },

|

|

||||||

// None,

|

|

||||||

// ),

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

|

|

||||||

// describe("Distplus", () => {

|

|

||||||

// open DistPlus;

|

|

||||||

// let discreteShape: DistTypes.xyShape = {

|

|

||||||

// xs: [|1., 4., 8.|],

|

|

||||||

// ys: [|0.3, 0.5, 0.2|],

|

|

||||||

// };

|

|

||||||

// let discrete = Discrete.make(discreteShape, None);

|

|

||||||

// let continuous =

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {xs: [|3., 7., 14.|], ys: [|0.058, 0.082, 0.124|]},

|

|

||||||

// None

|

|

||||||

// )

|

|

||||||

// |> Continuous.T.normalize; //scaleToIntegralSum(~intendedSum=1.0);

|

|

||||||

// let mixed =

|

|

||||||

// Mixed.make(

|

|

||||||

// ~continuous,

|

|

||||||

// ~discrete,

|

|

||||||

// );

|

|

||||||

// let distPlus =

|

|

||||||

// DistPlus.make(

|

|

||||||

// ~shape=Mixed(mixed),

|

|

||||||

// ~squiggleString=None,

|

|

||||||

// (),

|

|

||||||

// );

|

|

||||||

// makeTest("minX", T.minX(distPlus), 1.0);

|

|

||||||

// makeTest("maxX", T.maxX(distPlus), 14.0);

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 4.0",

|

|

||||||

// T.xToY(4., distPlus),

|

|

||||||

// {discrete: 0.25, continuous: 0.03196803196803197},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 0.0",

|

|

||||||

// T.xToY(0., distPlus),

|

|

||||||

// {discrete: 0.0, continuous: 0.028971028971028972},

|

|

||||||

// );

|

|

||||||

// makeTest(

|

|

||||||

// "xToY at 5.0",

|

|

||||||

// T.xToY(7., distPlus),

|

|

||||||

// {discrete: 0.0, continuous: 0.04095904095904096},

|

|

||||||

// );

|

|

||||||

// makeTest("integralEndY", T.Integral.sum(~cache=None, distPlus), 1.0);

|

|

||||||

// makeTest(

|

|

||||||

// "integral",

|

|

||||||

// T.Integral.get(~cache=None, distPlus) |> T.toContinuous,

|

|

||||||

// Some(

|

|

||||||

// Continuous.make(

|

|

||||||

// `Linear,

|

|

||||||

// {

|

|

||||||

// xs: [|1.00007, 1.00007, 3., 4., 4.00007, 7., 8., 8.00007, 14.|],

|

|

||||||

// ys: [|

|

|

||||||

// 0.0,

|

|

||||||

// 0.0,

|

|

||||||

// 0.15,

|

|

||||||

// 0.18496503496503497,

|

|

||||||

// 0.4349674825174825,

|

|

||||||

// 0.5398601398601399,

|

|

||||||

// 0.5913086913086913,

|

|

||||||

// 0.6913122927072927,

|

|

||||||

// 1.0,

|

|

||||||

// |],

|

|

||||||

// },

|

|

||||||

// None,

|

|

||||||

// ),

|

|

||||||

// ),

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

|

|

||||||

// describe("Shape", () => {

|

|

||||||

// let mean = 10.0;

|

|

||||||

// let stdev = 4.0;

|

|

||||||

// let variance = stdev ** 2.0;

|

|

||||||

// let numSamples = 10000;

|

|

||||||

// open Distributions.Shape;

|

|

||||||

// let normal: SymbolicTypes.symbolicDist = `Normal({mean, stdev});

|

|

||||||

// let normalShape = ExpressionTree.toShape(numSamples, `SymbolicDist(normal));

|

|

||||||

// let lognormal = SymbolicDist.Lognormal.fromMeanAndStdev(mean, stdev);

|

|

||||||

// let lognormalShape = ExpressionTree.toShape(numSamples, `SymbolicDist(lognormal));

|

|

||||||

|

|

||||||

// makeTestCloseEquality(

|

|

||||||

// "Mean of a normal",

|

|

||||||

// T.mean(normalShape),

|

|

||||||

// mean,

|

|

||||||

// ~digits=2,

|

|

||||||

// );

|

|

||||||

// makeTestCloseEquality(

|

|

||||||

// "Variance of a normal",

|

|

||||||

// T.variance(normalShape),

|

|

||||||

// variance,

|

|

||||||

// ~digits=1,

|

|

||||||

// );

|

|

||||||

// makeTestCloseEquality(

|

|

||||||

// "Mean of a lognormal",

|

|

||||||

// T.mean(lognormalShape),

|

|

||||||

// mean,

|

|

||||||

// ~digits=2,

|

|

||||||

// );

|

|

||||||

// makeTestCloseEquality(

|

|

||||||

// "Variance of a lognormal",

|

|

||||||

// T.variance(lognormalShape),

|

|

||||||

// variance,

|

|

||||||

// ~digits=0,

|

|

||||||

// );

|

|

||||||

// });

|

|

||||||

// });

|

|

||||||

|

|

@ -1,57 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

let evalParams: ExpressionTypes.ExpressionTree.evaluationParams = {

|

|

||||||

samplingInputs: {

|

|

||||||

sampleCount: 1000,

|

|

||||||

outputXYPoints: 10000,

|

|

||||||

kernelWidth: None,

|

|

||||||

shapeLength: 1000,

|

|

||||||

},

|

|

||||||

environment:

|

|

||||||

[|

|

|

||||||

("K", `SymbolicDist(`Float(1000.0))),

|

|

||||||

("M", `SymbolicDist(`Float(1000000.0))),

|

|

||||||

("B", `SymbolicDist(`Float(1000000000.0))),

|

|

||||||

("T", `SymbolicDist(`Float(1000000000000.0))),

|

|

||||||

|]

|

|

||||||

->Belt.Map.String.fromArray,

|

|

||||||

evaluateNode: ExpressionTreeEvaluator.toLeaf,

|

|

||||||

};

|

|

||||||

|

|

||||||

let shape1: DistTypes.xyShape = {xs: [|1., 4., 8.|], ys: [|0.2, 0.4, 0.8|]};

|

|

||||||

|

|

||||||

describe("XYShapes", () => {

|

|

||||||

describe("logScorePoint", () => {

|

|

||||||

makeTest(

|

|

||||||

"When identical",

|

|

||||||

{

|

|

||||||

let foo =

|

|

||||||

HardcodedFunctions.(

|

|

||||||

makeRenderedDistFloat("scaleMultiply", (dist, float) =>

|

|

||||||

verticalScaling(`Multiply, dist, float)

|

|

||||||

)

|

|

||||||

);

|

|

||||||

|

|

||||||

TypeSystem.Function.T.run(

|

|

||||||

evalParams,

|

|

||||||

[|

|

|

||||||

`SymbolicDist(`Float(100.0)),

|

|

||||||

`SymbolicDist(`Float(1.0)),

|

|

||||||

|],

|

|

||||||

foo,

|

|

||||||

);

|

|

||||||

},

|

|

||||||

Error("Sad"),

|

|

||||||

)

|

|

||||||

})

|

|

||||||

});

|

|

||||||

|

|

@ -1,24 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

describe("Lodash", () => {

|

|

||||||

describe("Lodash", () => {

|

|

||||||

makeTest("min", Lodash.min([|1, 3, 4|]), 1);

|

|

||||||

makeTest("max", Lodash.max([|1, 3, 4|]), 4);

|

|

||||||

makeTest("uniq", Lodash.uniq([|1, 3, 4, 4|]), [|1, 3, 4|]);

|

|

||||||

makeTest(

|

|

||||||

"countBy",

|

|

||||||

Lodash.countBy([|1, 3, 4, 4|], r => r),

|

|

||||||

Js.Dict.fromArray([|("1", 1), ("3", 1), ("4", 2)|]),

|

|

||||||

);

|

|

||||||

})

|

|

||||||

});

|

|

||||||

|

|

@ -1,51 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

describe("Lodash", () => {

|

|

||||||

describe("Lodash", () => {

|

|

||||||

makeTest(

|

|

||||||

"split",

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete([|1.432, 1.33455, 2.0|]),

|

|

||||||

([|1.432, 1.33455, 2.0|], E.FloatFloatMap.empty()),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"split",

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete([|

|

|

||||||

1.432,

|

|

||||||

1.33455,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

|])

|

|

||||||

|> (((c, disc)) => (c, disc |> E.FloatFloatMap.toArray)),

|

|

||||||

([|1.432, 1.33455|], [|(2.0, 4.0)|]),

|

|

||||||

);

|

|

||||||

|

|

||||||

let makeDuplicatedArray = count => {

|

|

||||||

let arr = Belt.Array.range(1, count) |> E.A.fmap(float_of_int);

|

|

||||||

let sorted = arr |> Belt.SortArray.stableSortBy(_, compare);

|

|

||||||

E.A.concatMany([|sorted, sorted, sorted, sorted|])

|

|

||||||

|> Belt.SortArray.stableSortBy(_, compare);

|

|

||||||

};

|

|

||||||

|

|

||||||

let (_, discrete) =

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete(makeDuplicatedArray(10));

|

|

||||||

let toArr = discrete |> E.FloatFloatMap.toArray;

|

|

||||||

makeTest("splitMedium", toArr |> Belt.Array.length, 10);

|

|

||||||

|

|

||||||

let (c, discrete) =

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete(makeDuplicatedArray(500));

|

|

||||||

let toArr = discrete |> E.FloatFloatMap.toArray;

|

|

||||||

makeTest("splitMedium", toArr |> Belt.Array.length, 500);

|

|

||||||

})

|

|

||||||

});

|

|

||||||

|

|

@ -1,63 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

let shape1: DistTypes.xyShape = {xs: [|1., 4., 8.|], ys: [|0.2, 0.4, 0.8|]};

|

|

||||||

|

|

||||||

let shape2: DistTypes.xyShape = {

|

|

||||||

xs: [|1., 5., 10.|],

|

|

||||||

ys: [|0.2, 0.5, 0.8|],

|

|

||||||

};

|

|

||||||

|

|

||||||

let shape3: DistTypes.xyShape = {

|

|

||||||

xs: [|1., 20., 50.|],

|

|

||||||

ys: [|0.2, 0.5, 0.8|],

|

|

||||||

};

|

|

||||||

|

|

||||||

describe("XYShapes", () => {

|

|

||||||

describe("logScorePoint", () => {

|

|

||||||

makeTest(

|

|

||||||

"When identical",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape1),

|

|

||||||

Some(0.0),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"When similar",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape2),

|

|

||||||

Some(1.658971191043856),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"When very different",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape3),

|

|

||||||

Some(210.3721280423322),

|

|

||||||

);

|

|

||||||

});

|

|

||||||

// describe("transverse", () => {

|

|

||||||

// makeTest(

|

|

||||||

// "When very different",

|

|

||||||

// XYShape.Transversal._transverse(

|

|

||||||

// (aCurrent, aLast) => aCurrent +. aLast,

|

|

||||||

// [|1.0, 2.0, 3.0, 4.0|],

|

|

||||||

// ),

|

|

||||||

// [|1.0, 3.0, 6.0, 10.0|],

|

|

||||||

// )

|

|

||||||

// });

|

|

||||||

describe("integrateWithTriangles", () => {

|

|

||||||

makeTest(

|

|

||||||

"integrates correctly",

|

|

||||||

XYShape.Range.integrateWithTriangles(shape1),

|

|

||||||

Some({

|

|

||||||

xs: [|1., 4., 8.|],

|

|

||||||

ys: [|0.0, 0.9000000000000001, 3.3000000000000007|],

|

|

||||||

}),

|

|

||||||

)

|

|

||||||

});

|

|

||||||

});

|

|

||||||

|

|

@ -39,7 +39,7 @@

|

||||||

"@rescript/react",

|

"@rescript/react",

|

||||||

"bs-css",

|

"bs-css",

|

||||||

"bs-css-dom",

|

"bs-css-dom",

|

||||||

"squiggle-experimental",

|

"@foretold-app/squiggle",

|

||||||

"rationale",

|

"rationale",

|

||||||

"bs-moment",

|

"bs-moment",

|

||||||

"reschema"

|

"reschema"

|

||||||

|

|

|

||||||

54800

packages/playground/package-lock.json

generated

54800

packages/playground/package-lock.json

generated

File diff suppressed because it is too large

Load Diff

|

|

@ -1,9 +1,10 @@

|

||||||

{

|

{

|

||||||

"name": "estiband",

|

"name": "@foretold-app/squiggle-playground",

|

||||||

"version": "0.1.0",

|

"version": "0.1.0",

|

||||||

"homepage": "https://foretold-app.github.io/estiband/",

|

"homepage": "https://foretold-app.github.io/estiband/",

|

||||||

"scripts": {

|

"scripts": {

|

||||||

"build": "rescript build",

|

"build": "rescript build",

|

||||||

|

"build:deps": "rescript build -with-deps",

|

||||||

"build:style": "tailwind build src/styles/index.css -o src/styles/tailwind.css",

|

"build:style": "tailwind build src/styles/index.css -o src/styles/tailwind.css",

|

||||||

"start": "rescript build -w",

|

"start": "rescript build -w",

|

||||||

"clean": "rescript clean",

|

"clean": "rescript clean",

|

||||||

|

|

@ -38,8 +39,8 @@

|

||||||

"bs-css": "^15.1.0",

|

"bs-css": "^15.1.0",

|

||||||

"bs-css-dom": "^3.1.0",

|

"bs-css-dom": "^3.1.0",

|

||||||

"bs-moment": "0.6.0",

|

"bs-moment": "0.6.0",

|

||||||

"bs-reform": "^10.0.3",

|

|

||||||

"bsb-js": "1.1.7",

|

"bsb-js": "1.1.7",

|

||||||

|

"css-loader": "^6.6.0",

|

||||||

"d3": "7.3.0",

|

"d3": "7.3.0",

|

||||||

"gh-pages": "2.2.0",

|

"gh-pages": "2.2.0",

|

||||||

"jest": "^25.5.1",

|

"jest": "^25.5.1",

|

||||||

|

|

@ -51,17 +52,16 @@

|

||||||

"moduleserve": "0.9.1",

|

"moduleserve": "0.9.1",

|

||||||

"moment": "2.24.0",

|

"moment": "2.24.0",

|

||||||

"pdfast": "^0.2.0",

|

"pdfast": "^0.2.0",

|

||||||

"postcss-cli": "7.1.0",

|

"postcss-cli": "^9.1.0",

|

||||||

"rationale": "0.2.0",

|

"rationale": "0.2.0",

|

||||||

"react": "^16.10.0",

|

"react": "17.0.2",

|

||||||

"react-ace": "^9.2.0",

|

"react-ace": "^9.2.0",

|

||||||

"react-dom": "^0.13.0 || ^0.14.0 || ^15.0.1 || ^16.0.0",

|

"react-dom": "^17.0.2",

|

||||||

"react-use": "^17.3.2",

|

"react-use": "^17.3.2",

|

||||||

"react-vega": "^7.4.4",

|

"react-vega": "^7.4.4",

|

||||||

"reason-react": ">=0.7.0",

|

|

||||||

"reschema": "^2.2.0",

|

"reschema": "^2.2.0",

|

||||||

"rescript": "^9.1.4",

|

"rescript": "^9.1.4",

|

||||||

"squiggle-experimental": "^0.1.8",

|

"@foretold-app/squiggle": "^0.1.9",

|

||||||

"tailwindcss": "1.2.0",

|

"tailwindcss": "1.2.0",

|

||||||

"vega": "*",

|

"vega": "*",

|

||||||

"vega-embed": "6.6.0",

|

"vega-embed": "6.6.0",

|

||||||

|

|

@ -69,9 +69,9 @@

|

||||||

},

|

},

|

||||||

"devDependencies": {

|

"devDependencies": {

|

||||||

"@glennsl/bs-jest": "^0.5.1",

|

"@glennsl/bs-jest": "^0.5.1",

|

||||||

"bs-platform": "9.0.2",

|

"bs-platform": "8.4.2",

|

||||||

"docsify": "^4.12.2",

|

"docsify": "^4.12.2",

|

||||||

"parcel-bundler": "1.12.4",

|

"parcel-bundler": "^1.12.5",

|

||||||

"parcel-plugin-bundle-visualiser": "^1.2.0",

|

"parcel-plugin-bundle-visualiser": "^1.2.0",

|

||||||

"parcel-plugin-less-js-enabled": "1.0.2"

|

"parcel-plugin-less-js-enabled": "1.0.2"

|

||||||

},

|

},

|

||||||

|

|

|

||||||

|

|

@ -5,4 +5,4 @@

|

||||||

tailwindcss('./tailwind.js'),

|

tailwindcss('./tailwind.js'),

|

||||||

require('autoprefixer'),

|

require('autoprefixer'),

|

||||||

],

|

],

|

||||||

};

|

};

|

||||||

|

|

|

||||||

|

|

@ -85,11 +85,6 @@ module O = {

|

||||||

|

|

||||||

let min = compare(\"<")

|

let min = compare(\"<")

|

||||||

let max = compare(\">")

|

let max = compare(\">")

|

||||||

module React = {

|

|

||||||

let defaultNull = default(React.null)

|

|

||||||

let fmapOrNull = fn => \"||>"(fmap(fn), default(React.null))

|

|

||||||

let flatten = default(React.null)

|

|

||||||

}

|

|

||||||

}

|

}

|

||||||

|

|

||||||

/* Functions */

|

/* Functions */

|

||||||

|

|

@ -196,18 +191,6 @@ module J = {

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

module M = {

|

|

||||||

let format = MomentRe.Moment.format

|

|

||||||

let format_standard = "MMM DD, YYYY HH:mm"

|

|

||||||

let format_simple = "L"

|

|

||||||

/* TODO: Figure out better name */

|

|

||||||

let goFormat_simple = MomentRe.Moment.format(format_simple)

|

|

||||||

let goFormat_standard = MomentRe.Moment.format(format_standard)

|

|

||||||

let toUtc = MomentRe.momentUtc

|

|

||||||

let toJSON = MomentRe.Moment.toJSON

|

|

||||||

let momentDefaultFormat = MomentRe.momentDefaultFormat

|

|

||||||

}

|

|

||||||

|

|

||||||

module JsDate = {

|

module JsDate = {

|

||||||

let fromString = Js.Date.fromString

|

let fromString = Js.Date.fromString

|

||||||

let now = Js.Date.now

|

let now = Js.Date.now

|

||||||

|

|

@ -97,7 +97,7 @@ module DemoDist = {

|

||||||

<div>

|

<div>

|

||||||

{switch options {

|

{switch options {

|

||||||

| Some(options) =>

|

| Some(options) =>

|

||||||

let inputs1 = ProgramEvaluator.Inputs.make(

|

let inputs1 = ForetoldAppSquiggle.ProgramEvaluator.Inputs.make(

|

||||||

~samplingInputs={

|

~samplingInputs={

|

||||||

sampleCount: Some(options.sampleCount),

|

sampleCount: Some(options.sampleCount),

|

||||||

outputXYPoints: Some(options.outputXYPoints),

|

outputXYPoints: Some(options.outputXYPoints),

|

||||||

|

|

@ -114,15 +114,15 @@ module DemoDist = {

|

||||||

(),

|

(),

|

||||||

)

|

)

|

||||||

|

|

||||||

let distributionList = ProgramEvaluator.evaluateProgram(inputs1)

|

let distributionList = ForetoldAppSquiggle.ProgramEvaluator.evaluateProgram(inputs1)

|

||||||

|

|

||||||

let renderExpression = response1 =>

|

let renderExpression = response1 =>

|

||||||

switch response1 {

|

switch response1 {

|

||||||

| #DistPlus(distPlus1) => <DistPlusPlot distPlus={DistPlus.T.normalize(distPlus1)} />

|

| #DistPlus(distPlus1) => <DistPlusPlot distPlus={ForetoldAppSquiggle.DistPlus.T.normalize(distPlus1)} />

|

||||||

| #Float(f) => <NumberShower number=f precision=3 />

|

| #Float(f) => <NumberShower number=f precision=3 />

|

||||||

| #Function((f, a), env) =>

|

| #Function((f, a), env) =>

|

||||||

// Problem: When it gets the function, it doesn't save state about previous commands

|

// Problem: When it gets the function, it doesn't save state about previous commands

|

||||||

let foo: ProgramEvaluator.Inputs.inputs = {

|

let foo: ForetoldAppSquiggle.ProgramEvaluator.Inputs.inputs = {

|

||||||

squiggleString: squiggleString,

|

squiggleString: squiggleString,

|

||||||

samplingInputs: inputs1.samplingInputs,

|

samplingInputs: inputs1.samplingInputs,

|

||||||

environment: env,

|

environment: env,

|

||||||

|

|

@ -130,13 +130,13 @@ module DemoDist = {

|

||||||

let results =

|

let results =

|

||||||

E.A.Floats.range(options.diagramStart, options.diagramStop, options.diagramCount)

|

E.A.Floats.range(options.diagramStart, options.diagramStop, options.diagramCount)

|

||||||

|> E.A.fmap(r =>

|

|> E.A.fmap(r =>

|

||||||

ProgramEvaluator.evaluateFunction(

|

ForetoldAppSquiggle.ProgramEvaluator.evaluateFunction(

|

||||||

foo,

|

foo,

|

||||||

(f, a),

|

(f, a),

|

||||||

[#SymbolicDist(#Float(r))],

|

[#SymbolicDist(#Float(r))],

|

||||||

) |> E.R.bind(_, a =>

|

) |> E.R.bind(_, a =>

|

||||||

switch a {

|

switch a {

|

||||||

| #DistPlus(d) => Ok((r, DistPlus.T.normalize(d)))

|

| #DistPlus(d) => Ok((r, ForetoldAppSquiggle.DistPlus.T.normalize(d)))

|

||||||

| n =>

|

| n =>

|

||||||

Js.log2("Error here", n)

|

Js.log2("Error here", n)

|

||||||

Error("wrong type")

|

Error("wrong type")

|

||||||

|

|

|

||||||

|

|

@ -1,7 +1,7 @@

|

||||||

open DistPlusPlotReducer

|

open DistPlusPlotReducer

|

||||||

let plotBlue = #hex("1860ad")

|

let plotBlue = #hex("1860ad")

|

||||||

|

|

||||||

let showAsForm = (distPlus: DistTypes.distPlus) =>

|

let showAsForm = (distPlus: ForetoldAppSquiggle.DistTypes.distPlus) =>

|

||||||

<div> <Antd.Input value={distPlus.squiggleString |> E.O.default("")} /> </div>

|

<div> <Antd.Input value={distPlus.squiggleString |> E.O.default("")} /> </div>

|

||||||

|

|

||||||

let showFloat = (~precision=3, number) => <NumberShower number precision />

|

let showFloat = (~precision=3, number) => <NumberShower number precision />

|

||||||

|

|

@ -23,27 +23,27 @@ let table = (distPlus, x) =>

|

||||||

<td className="px-4 py-2 border"> {x |> E.Float.toString |> React.string} </td>

|

<td className="px-4 py-2 border"> {x |> E.Float.toString |> React.string} </td>

|

||||||

<td className="px-4 py-2 border ">

|

<td className="px-4 py-2 border ">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.xToY(x)

|

|> ForetoldAppSquiggle.DistPlus.T.xToY(x)

|

||||||

|> DistTypes.MixedPoint.toDiscreteValue

|

|> ForetoldAppSquiggle.DistTypes.MixedPoint.toDiscreteValue

|

||||||

|> Js.Float.toPrecisionWithPrecision(_, ~digits=7)

|

|> Js.Float.toPrecisionWithPrecision(_, ~digits=7)

|

||||||

|> React.string}

|

|> React.string}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border ">

|

<td className="px-4 py-2 border ">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.xToY(x)

|

|> ForetoldAppSquiggle.DistPlus.T.xToY(x)

|

||||||

|> DistTypes.MixedPoint.toContinuousValue

|

|> ForetoldAppSquiggle.DistTypes.MixedPoint.toContinuousValue

|

||||||

|> Js.Float.toPrecisionWithPrecision(_, ~digits=7)

|

|> Js.Float.toPrecisionWithPrecision(_, ~digits=7)

|

||||||

|> React.string}

|

|> React.string}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border ">

|

<td className="px-4 py-2 border ">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.Integral.xToY(x)

|

|> ForetoldAppSquiggle.DistPlus.T.Integral.xToY(x)

|

||||||

|> E.Float.with2DigitsPrecision

|

|> E.Float.with2DigitsPrecision

|

||||||

|> React.string}

|

|> React.string}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border ">

|

<td className="px-4 py-2 border ">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.Integral.sum

|

|> ForetoldAppSquiggle.DistPlus.T.Integral.sum

|

||||||

|> E.Float.with2DigitsPrecision

|

|> E.Float.with2DigitsPrecision

|

||||||

|> React.string}

|

|> React.string}

|

||||||

</td>

|

</td>

|

||||||

|

|

@ -61,16 +61,16 @@ let table = (distPlus, x) =>

|

||||||

<tr>

|

<tr>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.toContinuous

|

|> ForetoldAppSquiggle.DistPlus.T.toContinuous

|

||||||

|> E.O.fmap(Continuous.T.Integral.sum)

|

|> E.O.fmap(ForetoldAppSquiggle.Continuous.T.Integral.sum)

|

||||||

|> E.O.fmap(E.Float.with2DigitsPrecision)

|

|> E.O.fmap(E.Float.with2DigitsPrecision)

|

||||||

|> E.O.default("")

|

|> E.O.default("")

|

||||||

|> React.string}

|

|> React.string}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border ">

|

<td className="px-4 py-2 border ">

|

||||||

{distPlus

|

{distPlus

|

||||||

|> DistPlus.T.toDiscrete

|

|> ForetoldAppSquiggle.DistPlus.T.toDiscrete

|

||||||

|> E.O.fmap(Discrete.T.Integral.sum)

|

|> E.O.fmap(ForetoldAppSquiggle.Discrete.T.Integral.sum)

|

||||||

|> E.O.fmap(E.Float.with2DigitsPrecision)

|

|> E.O.fmap(E.Float.with2DigitsPrecision)

|

||||||

|> E.O.default("")

|

|> E.O.default("")

|

||||||

|> React.string}

|

|> React.string}

|

||||||

|

|

@ -97,28 +97,28 @@ let percentiles = distPlus =>

|

||||||

<tbody>

|

<tbody>

|

||||||

<tr>

|

<tr>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.01) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.01) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.05) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.05) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.25) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.25) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.5) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.5) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.75) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.75) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.95) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.95) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.99) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.99) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.Integral.yToX(0.99999) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.99999) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

</tr>

|

</tr>

|

||||||

</tbody>

|

</tbody>

|

||||||

|

|

@ -133,11 +133,11 @@ let percentiles = distPlus =>

|

||||||

</thead>

|

</thead>

|

||||||

<tbody>

|

<tbody>

|

||||||

<tr>

|

<tr>

|

||||||

<td className="px-4 py-2 border"> {distPlus |> DistPlus.T.mean |> showFloat} </td>

|

<td className="px-4 py-2 border"> {distPlus |> ForetoldAppSquiggle.DistPlus.T.mean |> showFloat} </td>

|

||||||

<td className="px-4 py-2 border">

|

<td className="px-4 py-2 border">

|

||||||

{distPlus |> DistPlus.T.variance |> (r => r ** 0.5) |> showFloat}

|

{distPlus |> ForetoldAppSquiggle.DistPlus.T.variance |> (r => r ** 0.5) |> showFloat}

|

||||||

</td>

|

</td>

|

||||||

<td className="px-4 py-2 border"> {distPlus |> DistPlus.T.variance |> showFloat} </td>

|

<td className="px-4 py-2 border"> {distPlus |> ForetoldAppSquiggle.DistPlus.T.variance |> showFloat} </td>

|

||||||

</tr>

|

</tr>

|

||||||

</tbody>

|

</tbody>

|

||||||

</table>

|

</table>

|

||||||

|

|

@ -155,11 +155,11 @@ let adjustBoth = discreteProbabilityMassFraction => {

|

||||||

|

|

||||||

module DistPlusChart = {

|

module DistPlusChart = {

|

||||||

@react.component

|

@react.component

|

||||||

let make = (~distPlus: DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

let make = (~distPlus: ForetoldAppSquiggle.DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

||||||

open DistPlus

|

open ForetoldAppSquiggle.DistPlus

|

||||||

|

|

||||||

let discrete = distPlus |> T.toDiscrete |> E.O.fmap(Discrete.getShape)

|

let discrete = distPlus |> T.toDiscrete |> E.O.fmap(ForetoldAppSquiggle.Discrete.getShape)

|

||||||

let continuous = distPlus |> T.toContinuous |> E.O.fmap(Continuous.getShape)

|

let continuous = distPlus |> T.toContinuous |> E.O.fmap(ForetoldAppSquiggle.Continuous.getShape)

|

||||||

|

|

||||||

// // We subtract a bit from the range to make sure that it fits. Maybe this should be done in d3 instead.

|

// // We subtract a bit from the range to make sure that it fits. Maybe this should be done in d3 instead.

|

||||||

// let minX =

|

// let minX =

|

||||||

|

|

@ -172,12 +172,12 @@ module DistPlusChart = {

|

||||||

// | _ => None

|

// | _ => None

|

||||||

// };

|

// };

|

||||||

|

|

||||||

let minX = distPlus |> DistPlus.T.Integral.yToX(0.00001)

|

let minX = distPlus |> T.Integral.yToX(0.00001)

|

||||||

|

|

||||||

let maxX = distPlus |> DistPlus.T.Integral.yToX(0.99999)

|

let maxX = distPlus |> T.Integral.yToX(0.99999)

|

||||||

|

|

||||||

let timeScale = distPlus.unit |> DistTypes.DistributionUnit.toJson

|

let timeScale = distPlus.unit |> ForetoldAppSquiggle.DistTypes.DistributionUnit.toJson

|

||||||

let discreteProbabilityMassFraction = distPlus |> DistPlus.T.toDiscreteProbabilityMassFraction

|

let discreteProbabilityMassFraction = distPlus |> T.toDiscreteProbabilityMassFraction

|

||||||

|

|

||||||

let (yMaxDiscreteDomainFactor, yMaxContinuousDomainFactor) = adjustBoth(

|

let (yMaxDiscreteDomainFactor, yMaxContinuousDomainFactor) = adjustBoth(

|

||||||

discreteProbabilityMassFraction,

|

discreteProbabilityMassFraction,

|

||||||

|

|

@ -202,13 +202,13 @@ module DistPlusChart = {

|

||||||

|

|

||||||

module IntegralChart = {

|

module IntegralChart = {

|

||||||

@react.component

|

@react.component

|

||||||

let make = (~distPlus: DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

let make = (~distPlus: ForetoldAppSquiggle.DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

||||||

let integral = distPlus.integralCache

|

let integral = distPlus.integralCache

|

||||||

let continuous = integral |> Continuous.toLinear |> E.O.fmap(Continuous.getShape)

|

let continuous = integral |> ForetoldAppSquiggle.Continuous.toLinear |> E.O.fmap(ForetoldAppSquiggle.Continuous.getShape)

|

||||||

let minX = distPlus |> DistPlus.T.Integral.yToX(0.00001)

|

let minX = distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.00001)

|

||||||

|

|

||||||

let maxX = distPlus |> DistPlus.T.Integral.yToX(0.99999)

|

let maxX = distPlus |> ForetoldAppSquiggle.DistPlus.T.Integral.yToX(0.99999)

|

||||||

let timeScale = distPlus.unit |> DistTypes.DistributionUnit.toJson

|

let timeScale = distPlus.unit |> ForetoldAppSquiggle.DistTypes.DistributionUnit.toJson

|

||||||

<DistributionPlot

|

<DistributionPlot

|

||||||

xScale={config.xLog ? "log" : "linear"}

|

xScale={config.xLog ? "log" : "linear"}

|

||||||

yScale={config.yLog ? "log" : "linear"}

|

yScale={config.yLog ? "log" : "linear"}

|

||||||

|

|

@ -225,7 +225,7 @@ module IntegralChart = {

|

||||||

|

|

||||||

module Chart = {

|

module Chart = {

|

||||||

@react.component

|

@react.component

|

||||||

let make = (~distPlus: DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

let make = (~distPlus: ForetoldAppSquiggle.DistTypes.distPlus, ~config: chartConfig, ~onHover) => {

|

||||||

let chart = React.useMemo2(

|

let chart = React.useMemo2(

|

||||||

() =>

|

() =>

|

||||||

config.isCumulative

|

config.isCumulative

|

||||||

|

|

@ -246,7 +246,7 @@ module Chart = {

|

||||||

let button = "bg-gray-300 hover:bg-gray-500 text-grey-darkest text-xs px-4 py-1"

|

let button = "bg-gray-300 hover:bg-gray-500 text-grey-darkest text-xs px-4 py-1"

|

||||||

|

|

||||||

@react.component

|

@react.component

|

||||||

let make = (~distPlus: DistTypes.distPlus) => {

|

let make = (~distPlus: ForetoldAppSquiggle.DistTypes.distPlus) => {

|

||||||

let (x, setX) = React.useState(() => 0.)

|

let (x, setX) = React.useState(() => 0.)

|

||||||

let (state, dispatch) = React.useReducer(DistPlusPlotReducer.reducer, DistPlusPlotReducer.init)

|

let (state, dispatch) = React.useReducer(DistPlusPlotReducer.reducer, DistPlusPlotReducer.init)

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -95,12 +95,12 @@ let make = (

|

||||||

?xScale

|

?xScale

|

||||||

?yScale

|

?yScale

|

||||||

?timeScale

|

?timeScale

|

||||||

discrete={discrete |> E.O.fmap(XYShape.T.toJs)}

|

discrete={discrete |> E.O.fmap(ForetoldAppSquiggle.XYShape.T.toJs)}

|

||||||

height

|

height

|

||||||

marginBottom=50

|

marginBottom=50

|

||||||

marginTop=0

|

marginTop=0

|

||||||

onHover

|

onHover

|

||||||

continuous={continuous |> E.O.fmap(XYShape.T.toJs)}

|

continuous={continuous |> E.O.fmap(ForetoldAppSquiggle.XYShape.T.toJs)}

|

||||||

showDistributionLines

|

showDistributionLines

|

||||||

showDistributionYAxis

|

showDistributionYAxis

|

||||||

showVerticalLine

|

showVerticalLine

|

||||||

|

|

|

||||||

|

|

@ -1,3 +1,4 @@

|

||||||

|

open ForetoldAppSquiggle

|

||||||

@module("./PercentilesChart.js")

|

@module("./PercentilesChart.js")

|

||||||

external percentilesChart: React.element = "PercentilesChart"

|

external percentilesChart: React.element = "PercentilesChart"

|

||||||

|

|

||||||

|

|

@ -30,7 +31,7 @@ module Internal = {

|

||||||

@react.component

|

@react.component

|

||||||

@module("./PercentilesChart.js")

|

@module("./PercentilesChart.js")

|

||||||

let make = (~dists: array<(float, DistTypes.distPlus)>, ~children=React.null) => {

|

let make = (~dists: array<(float, DistTypes.distPlus)>, ~children=React.null) => {

|

||||||

let data = dists |> E.A.fmap(((x, r)) =>

|

let data = dists -> Belt.Array.map(((x, r)) =>

|

||||||

{

|

{

|

||||||

"x": x,

|

"x": x,

|

||||||

"p1": r |> DistPlus.T.Integral.yToX(0.01),

|

"p1": r |> DistPlus.T.Integral.yToX(0.01),

|

||||||

|

|

|

||||||

|

|

@ -20,8 +20,8 @@ let make = (~number, ~precision) => {

|

||||||

let numberWithPresentation = JS.numberShow(number, precision)

|

let numberWithPresentation = JS.numberShow(number, precision)

|

||||||

<span>

|

<span>

|

||||||

{JS.valueGet(numberWithPresentation) |> React.string}

|

{JS.valueGet(numberWithPresentation) |> React.string}

|

||||||

{JS.symbolGet(numberWithPresentation) |> E.O.React.fmapOrNull(React.string)}

|

{JS.symbolGet(numberWithPresentation) |> R.O.fmapOrNull(React.string)}

|

||||||

{JS.powerGet(numberWithPresentation) |> E.O.React.fmapOrNull(e =>

|

{JS.powerGet(numberWithPresentation) |> R.O.fmapOrNull(e =>

|

||||||

<span>

|

<span>

|

||||||

{j`\\u00b710` |> React.string}

|

{j`\\u00b710` |> React.string}

|

||||||

<span style=sup> {e |> E.Float.toString |> React.string} </span>

|

<span style=sup> {e |> E.Float.toString |> React.string} </span>

|

||||||

|

|

|

||||||

|

|

@ -1,171 +0,0 @@

|

||||||

// TODO: This setup is more confusing than it should be, there's more work to do in cleanup here.

|

|

||||||

module Inputs = {

|

|

||||||

module SamplingInputs = {

|

|

||||||

type t = {

|

|

||||||

sampleCount: option<int>,

|

|

||||||

outputXYPoints: option<int>,

|

|

||||||

kernelWidth: option<float>,

|

|

||||||

shapeLength: option<int>,

|

|

||||||

}

|

|

||||||

}

|

|

||||||

let defaultRecommendedLength = 100

|

|

||||||

let defaultShouldDownsample = true

|

|

||||||

|

|

||||||

type inputs = {

|

|

||||||

squiggleString: string,

|

|

||||||

samplingInputs: SamplingInputs.t,

|

|

||||||

environment: ExpressionTypes.ExpressionTree.environment,