Merge pull request #12 from foretold-app/gentype-experiment

Begin conversion to rescript and add GenType

|

|

@ -1,13 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

describe("Bandwidth", () => {

|

|

||||||

test("nrd0()", () => {

|

|

||||||

let data = [|1., 4., 3., 2.|];

|

|

||||||

expect(Bandwidth.nrd0(data)) |> toEqual(0.7625801874014622);

|

|

||||||

});

|

|

||||||

test("nrd()", () => {

|

|

||||||

let data = [|1., 4., 3., 2.|];

|

|

||||||

expect(Bandwidth.nrd(data)) |> toEqual(0.8981499984950554);

|

|

||||||

});

|

|

||||||

});

|

|

||||||

|

|

@ -1,51 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

describe("Lodash", () => {

|

|

||||||

describe("Lodash", () => {

|

|

||||||

makeTest(

|

|

||||||

"split",

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete([|1.432, 1.33455, 2.0|]),

|

|

||||||

([|1.432, 1.33455, 2.0|], E.FloatFloatMap.empty()),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"split",

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete([|

|

|

||||||

1.432,

|

|

||||||

1.33455,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

2.0,

|

|

||||||

|])

|

|

||||||

|> (((c, disc)) => (c, disc |> E.FloatFloatMap.toArray)),

|

|

||||||

([|1.432, 1.33455|], [|(2.0, 4.0)|]),

|

|

||||||

);

|

|

||||||

|

|

||||||

let makeDuplicatedArray = count => {

|

|

||||||

let arr = Belt.Array.range(1, count) |> E.A.fmap(float_of_int);

|

|

||||||

let sorted = arr |> Belt.SortArray.stableSortBy(_, compare);

|

|

||||||

E.A.concatMany([|sorted, sorted, sorted, sorted|])

|

|

||||||

|> Belt.SortArray.stableSortBy(_, compare);

|

|

||||||

};

|

|

||||||

|

|

||||||

let (_, discrete) =

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete(makeDuplicatedArray(10));

|

|

||||||

let toArr = discrete |> E.FloatFloatMap.toArray;

|

|

||||||

makeTest("splitMedium", toArr |> Belt.Array.length, 10);

|

|

||||||

|

|

||||||

let (c, discrete) =

|

|

||||||

SamplesToShape.Internals.T.splitContinuousAndDiscrete(makeDuplicatedArray(500));

|

|

||||||

let toArr = discrete |> E.FloatFloatMap.toArray;

|

|

||||||

makeTest("splitMedium", toArr |> Belt.Array.length, 500);

|

|

||||||

})

|

|

||||||

});

|

|

||||||

|

|

@ -1,63 +0,0 @@

|

||||||

open Jest;

|

|

||||||

open Expect;

|

|

||||||

|

|

||||||

let makeTest = (~only=false, str, item1, item2) =>

|

|

||||||

only

|

|

||||||

? Only.test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

)

|

|

||||||

: test(str, () =>

|

|

||||||

expect(item1) |> toEqual(item2)

|

|

||||||

);

|

|

||||||

|

|

||||||

let shape1: DistTypes.xyShape = {xs: [|1., 4., 8.|], ys: [|0.2, 0.4, 0.8|]};

|

|

||||||

|

|

||||||

let shape2: DistTypes.xyShape = {

|

|

||||||

xs: [|1., 5., 10.|],

|

|

||||||

ys: [|0.2, 0.5, 0.8|],

|

|

||||||

};

|

|

||||||

|

|

||||||

let shape3: DistTypes.xyShape = {

|

|

||||||

xs: [|1., 20., 50.|],

|

|

||||||

ys: [|0.2, 0.5, 0.8|],

|

|

||||||

};

|

|

||||||

|

|

||||||

describe("XYShapes", () => {

|

|

||||||

describe("logScorePoint", () => {

|

|

||||||

makeTest(

|

|

||||||

"When identical",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape1),

|

|

||||||

Some(0.0),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"When similar",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape2),

|

|

||||||

Some(1.658971191043856),

|

|

||||||

);

|

|

||||||

makeTest(

|

|

||||||

"When very different",

|

|

||||||

XYShape.logScorePoint(30, shape1, shape3),

|

|

||||||

Some(210.3721280423322),

|

|

||||||

);

|

|

||||||

});

|

|

||||||

// describe("transverse", () => {

|

|

||||||

// makeTest(

|

|

||||||

// "When very different",

|

|

||||||

// XYShape.Transversal._transverse(

|

|

||||||

// (aCurrent, aLast) => aCurrent +. aLast,

|

|

||||||

// [|1.0, 2.0, 3.0, 4.0|],

|

|

||||||

// ),

|

|

||||||

// [|1.0, 3.0, 6.0, 10.0|],

|

|

||||||

// )

|

|

||||||

// });

|

|

||||||

describe("integrateWithTriangles", () => {

|

|

||||||

makeTest(

|

|

||||||

"integrates correctly",

|

|

||||||

XYShape.Range.integrateWithTriangles(shape1),

|

|

||||||

Some({

|

|

||||||

xs: [|1., 4., 8.|],

|

|

||||||

ys: [|0.0, 0.9000000000000001, 3.3000000000000007|],

|

|

||||||

}),

|

|

||||||

)

|

|

||||||

});

|

|

||||||

});

|

|

||||||

20

docs/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,20 @@

|

||||||

|

# Dependencies

|

||||||

|

/node_modules

|

||||||

|

|

||||||

|

# Production

|

||||||

|

/build

|

||||||

|

|

||||||

|

# Generated files

|

||||||

|

.docusaurus

|

||||||

|

.cache-loader

|

||||||

|

|

||||||

|

# Misc

|

||||||

|

.DS_Store

|

||||||

|

.env.local

|

||||||

|

.env.development.local

|

||||||

|

.env.test.local

|

||||||

|

.env.production.local

|

||||||

|

|

||||||

|

npm-debug.log*

|

||||||

|

yarn-debug.log*

|

||||||

|

yarn-error.log*

|

||||||

41

docs/README.md

Normal file

|

|

@ -0,0 +1,41 @@

|

||||||

|

# Website

|

||||||

|

|

||||||

|

This website is built using [Docusaurus 2](https://docusaurus.io/), a modern static website generator.

|

||||||

|

|

||||||

|

### Installation

|

||||||

|

|

||||||

|

```

|

||||||

|

$ yarn

|

||||||

|

```

|

||||||

|

|

||||||

|

### Local Development

|

||||||

|

|

||||||

|

```

|

||||||

|

$ yarn start

|

||||||

|

```

|

||||||

|

|

||||||

|

This command starts a local development server and opens up a browser window. Most changes are reflected live without having to restart the server.

|

||||||

|

|

||||||

|

### Build

|

||||||

|

|

||||||

|

```

|

||||||

|

$ yarn build

|

||||||

|

```

|

||||||

|

|

||||||

|

This command generates static content into the `build` directory and can be served using any static contents hosting service.

|

||||||

|

|

||||||

|

### Deployment

|

||||||

|

|

||||||

|

Using SSH:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ USE_SSH=true yarn deploy

|

||||||

|

```

|

||||||

|

|

||||||

|

Not using SSH:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ GIT_USER=<Your GitHub username> yarn deploy

|

||||||

|

```

|

||||||

|

|

||||||

|

If you are using GitHub pages for hosting, this command is a convenient way to build the website and push to the `gh-pages` branch.

|

||||||

3

docs/babel.config.js

Normal file

|

|

@ -0,0 +1,3 @@

|

||||||

|

module.exports = {

|

||||||

|

presets: [require.resolve('@docusaurus/core/lib/babel/preset')],

|

||||||

|

};

|

||||||

131

docs/blog/2019-09-05-short-presentation.md

Normal file

|

|

@ -0,0 +1,131 @@

|

||||||

|

---

|

||||||

|

slug: Squiggle-Talk

|

||||||

|

title: The Squiggly language (Short Presentation)

|

||||||

|

authors: ozzie

|

||||||

|

---

|

||||||

|

|

||||||

|

# Multivariate estimation & the Squiggly language

|

||||||

|

*This post was originally published on Aug 2020, on [LessWrong](https://www.lesswrong.com/posts/g9QdXySpydd6p8tcN/sunday-august-16-12pm-pdt-talks-by-ozzie-gooen-habryka-ben). The name of the project has since been changed from Suiggly to Squiggle*

|

||||||

|

|

||||||

|

*(Talk given at the LessWrong Lighting Talks in 2020. Ozzie Gooen is responsible for the talk, Jacob Lagerros and Justis Mills edited the transcript.* [an event on Sunday 16th of August](https://www.lesswrong.com/posts/g9QdXySpydd6p8tcN/sunday-august-16-12pm-pdt-talks-by-ozzie-gooen-habryka-ben))

|

||||||

|

|

||||||

|

|

||||||

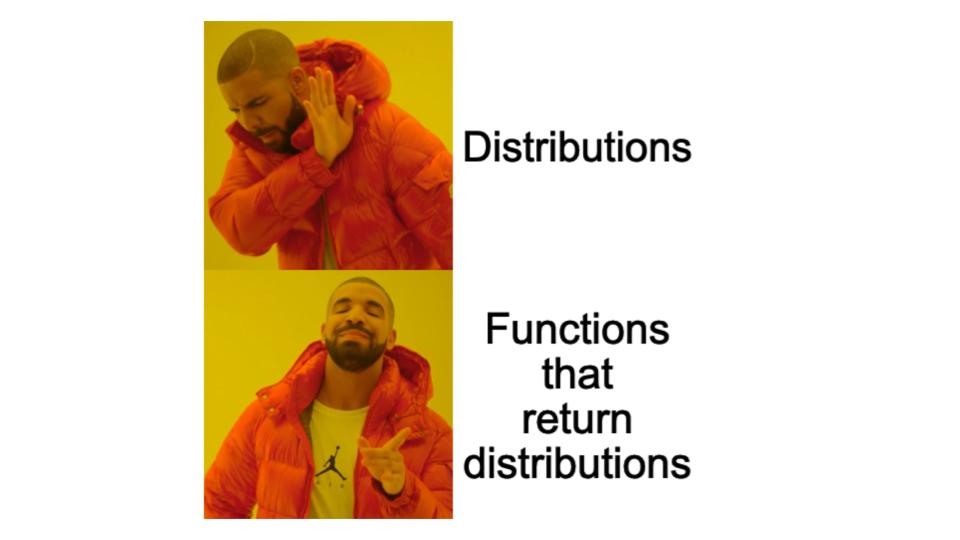

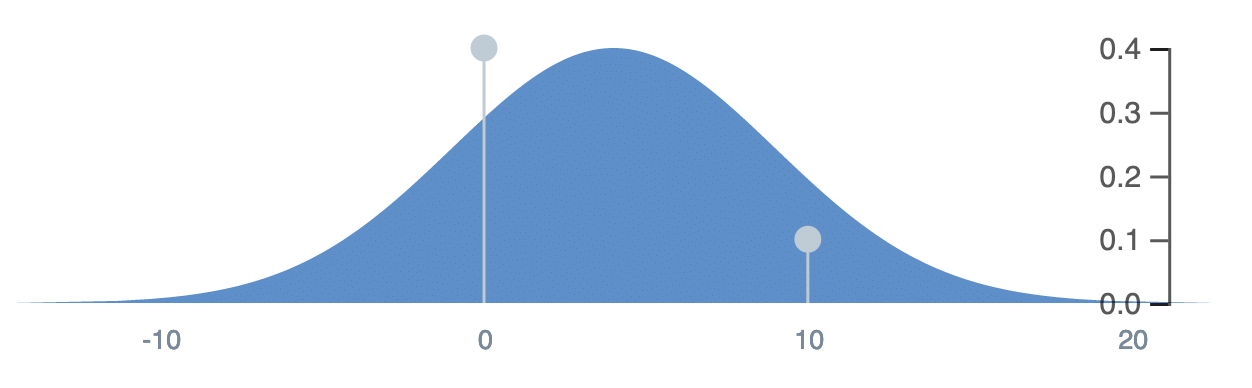

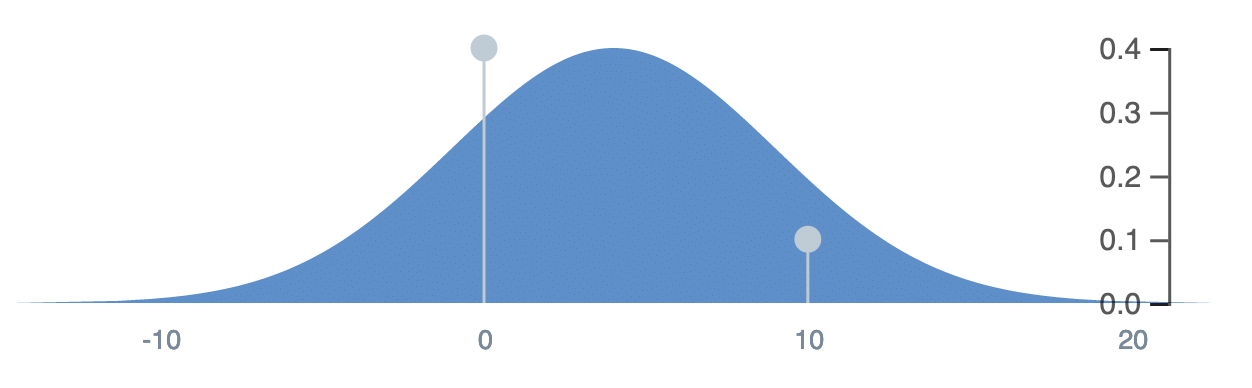

|

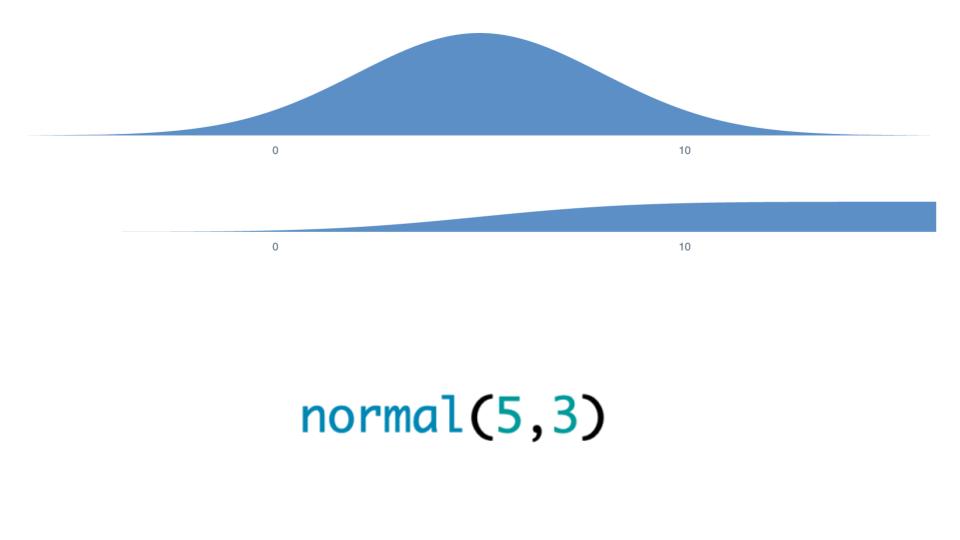

**Ozzie:** This image is my [TLDR](https://en.wikipedia.org/wiki/Wikipedia:Too_long;_didn%27t_read) on probability distributions:

|

||||||

|

|

||||||

|

Basically, distributions are kind of old school. People are used to estimating and predicting them. We don't want that. We want functions that return distributions -- those are way cooler. The future is functions, not distributions.

|

||||||

|

|

||||||

|

<!--truncate-->

|

||||||

|

|

||||||

|

What do I mean by this?

|

||||||

|

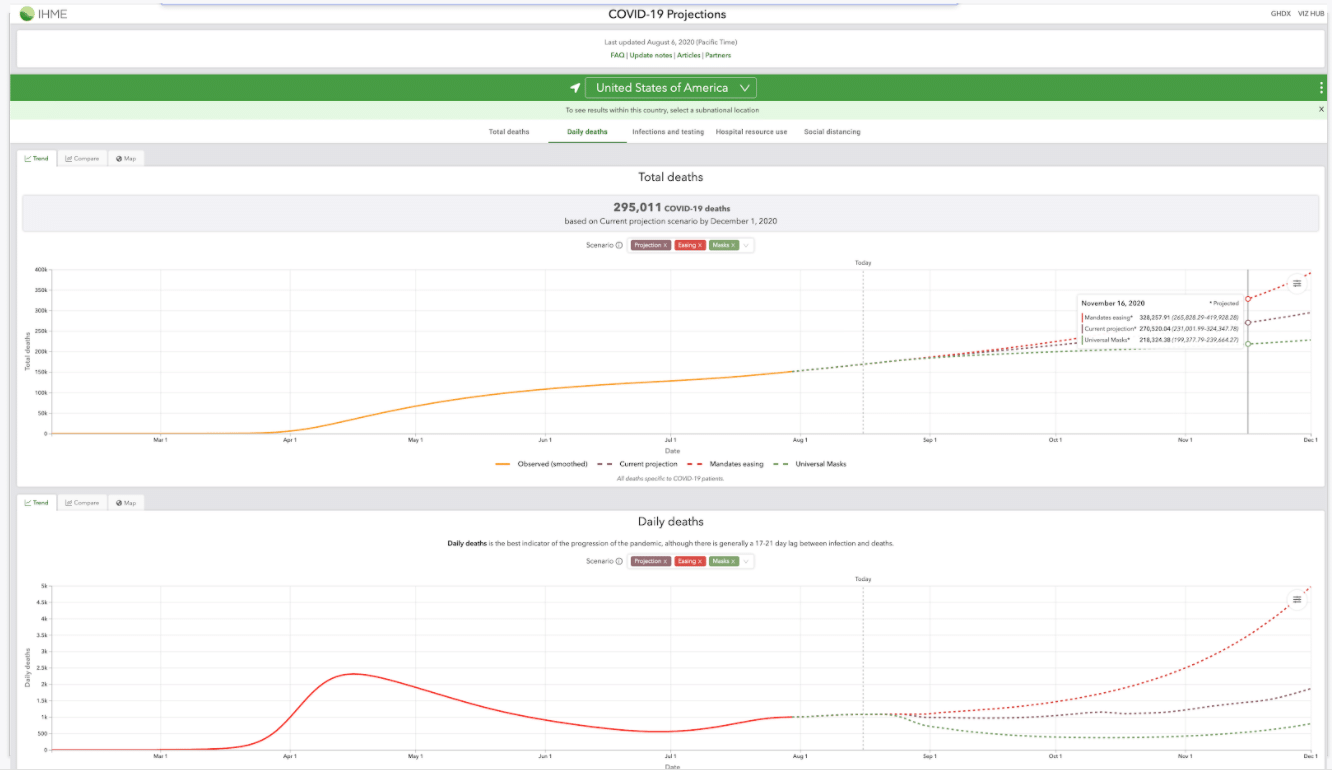

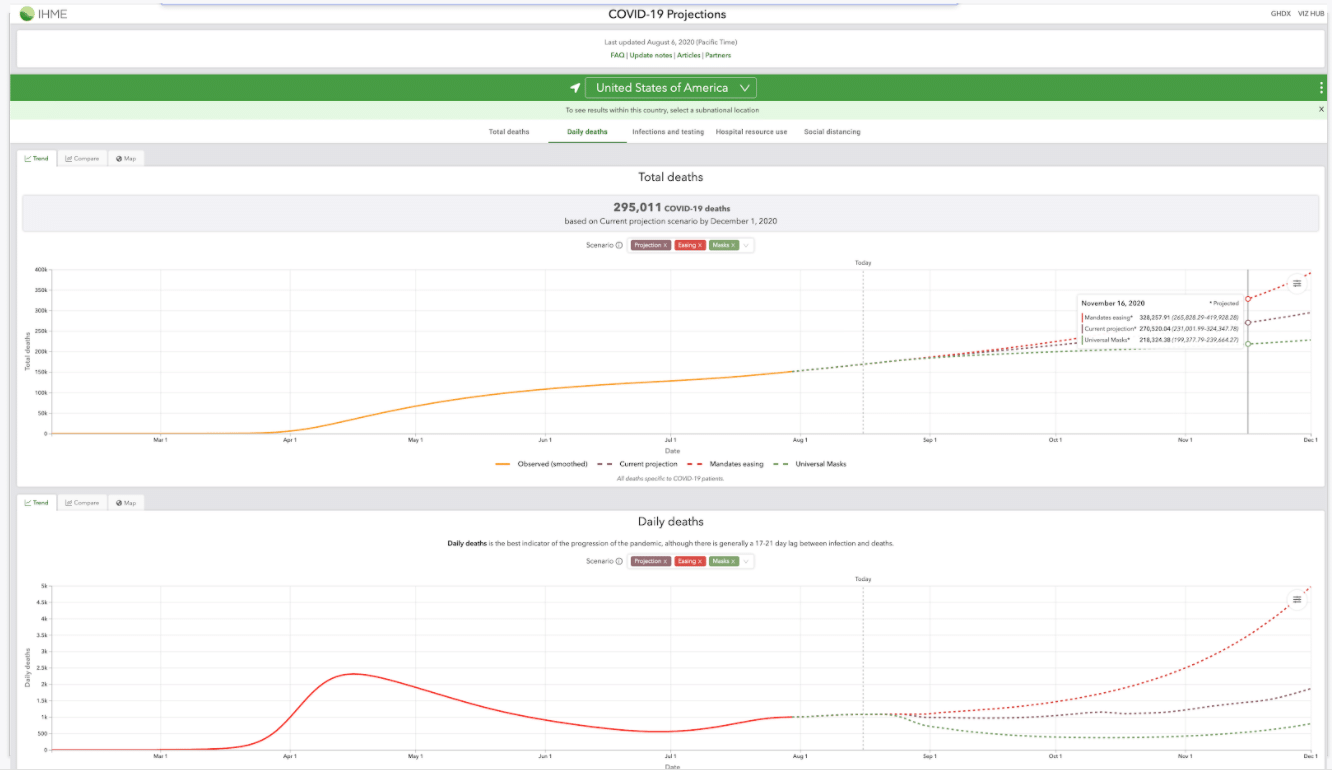

For an example, let's look at some of the existing COVID models. This is one of them, from the IHME:

|

||||||

|

|

||||||

|

You can see that it made projections for total deaths, daily deaths, and a bunch of other variables. And for each indicator, you could choose a country or a location, and it gives you a forecast of what that indicator may look like.

|

||||||

|

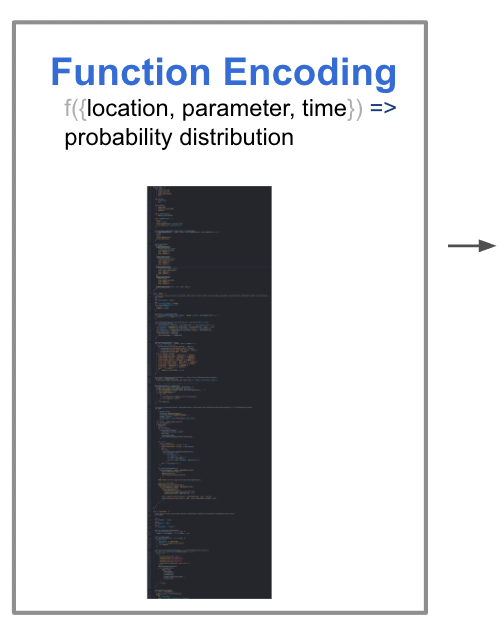

So basically there's some function that for any parameter, which could be deaths or daily deaths or time or whatever, outputs a probability density. That's the core thing that's happening.

|

||||||

|

|

||||||

|

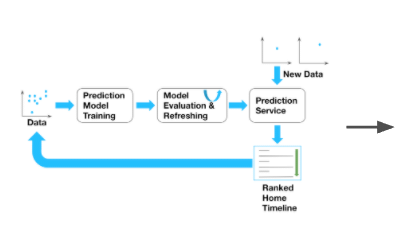

So if you were able to parameterize the model in that way, and format it in these terms, you could basically wrap the function in some encoding. And then do the same forecast, but now using a centralized encoding.

|

||||||

|

So right now, basically for people to make something like the COVID dashboard from before, they have to use this intense output and write some custom GUI. It's a whole custom process. Moreover, it's very difficult to write*your own* function that calls their underlying model.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

But, hypothetically, if we had an encoding layer between the model and the output, these forecasters could basically write the results of their model into one function, or into one big file. Then that file could be interpreted and run on demand. That would be a much nicer format.

|

||||||

|

|

||||||

|

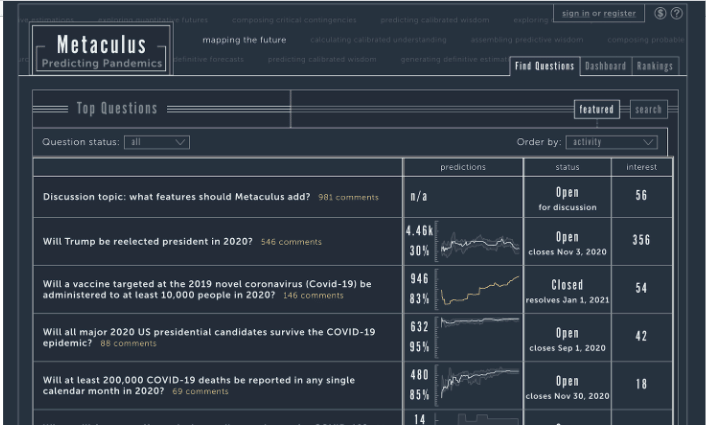

Let’s take a look at Metaculus, which is about the best forecasting platform we have right now.

|

||||||

|

|

||||||

|

|

||||||

|

On Metaculus, everything is a point estimate, which is limiting. In general, it's great that we have good point estimates, but most people don't want to look at this. They’d rather look at the pretty dashboard from before, right?

|

||||||

|

|

||||||

|

So we need to figure out ways of getting our predictors to work together to make things that look more like the pretty graphs. And one of those questions is: how do we get predictors to write functions that return distributions?

|

||||||

|

|

||||||

|

Ultimately, I think this is something that we obviously want. But it is kind of tricky to get there.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

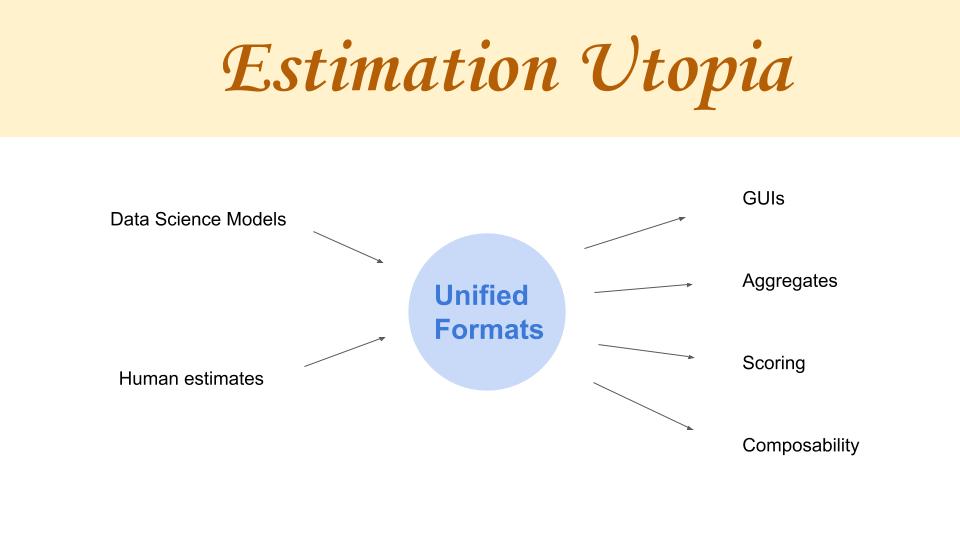

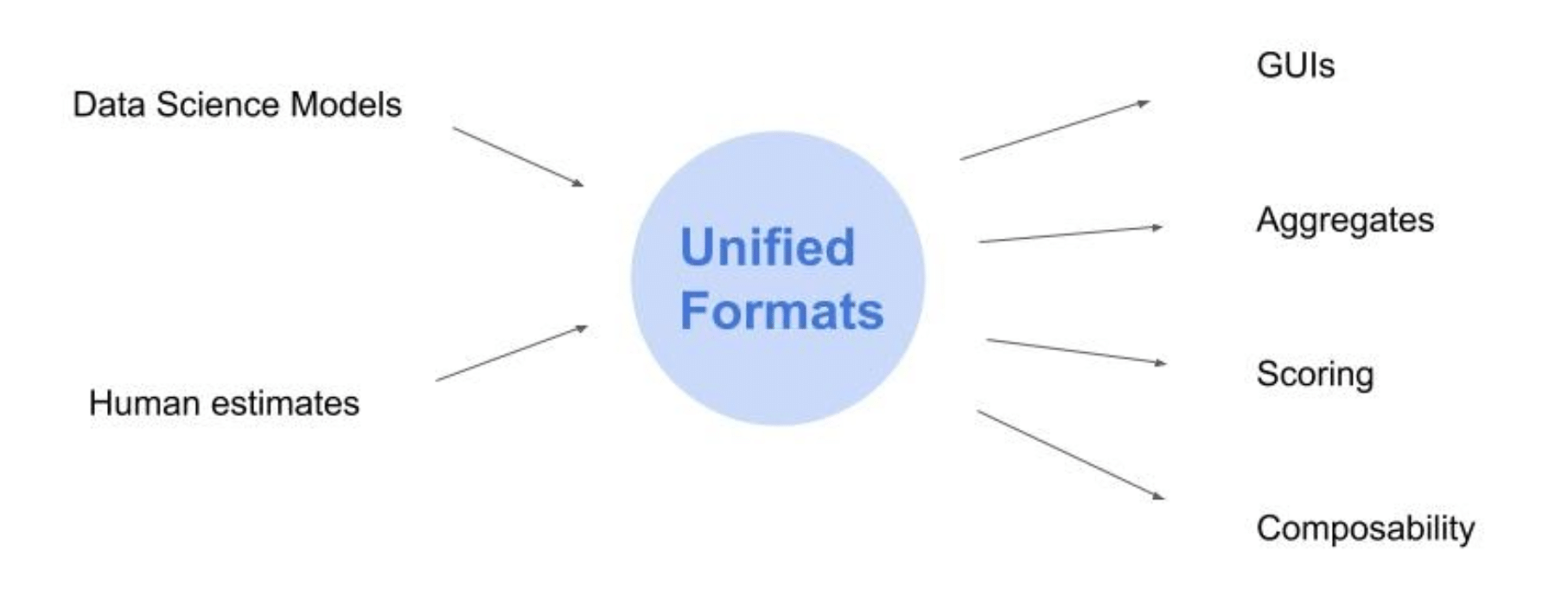

So in Estimation Utopia, as I call it, we’d allow for people to take the results of their data science models and convert them into a unified format. But also, humans could just intuitively go ahead and write in the unified format directly. And if we have unified formats that are portable and could be run in different areas with different programming languages, then it would be very easy to autogenerate GUIs for them, including aggregates which combined multiple models at the same time. We could also do scoring, which is something that is obvious that we want, as well as compose models together.

|

||||||

|

|

||||||

|

So that's why I've been working on the Squiggly language.

|

||||||

|

Let’s look at some quick examples!

|

||||||

|

|

||||||

|

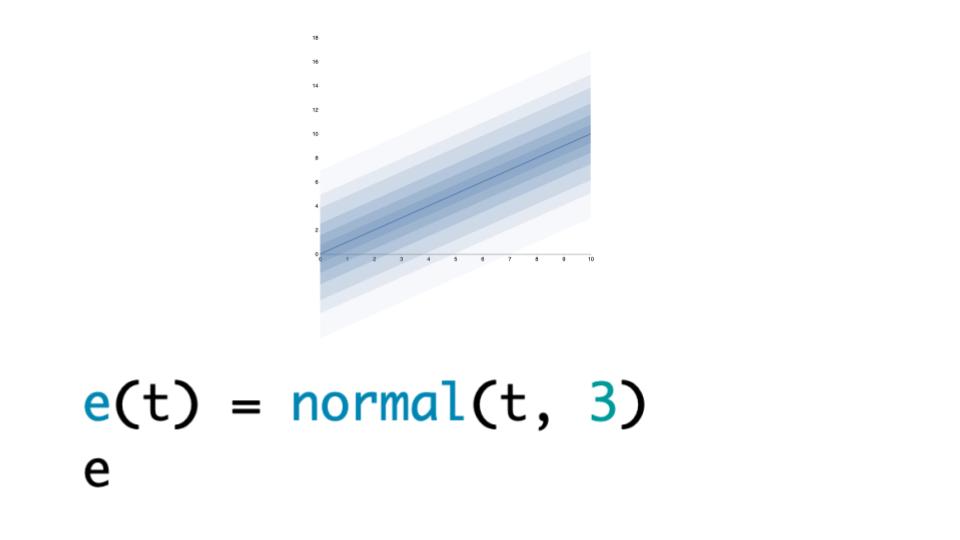

This is a classic normal distribution, but once you have this, some of the challenge is making it as easy as possible to make functions that return distributions.

|

||||||

|

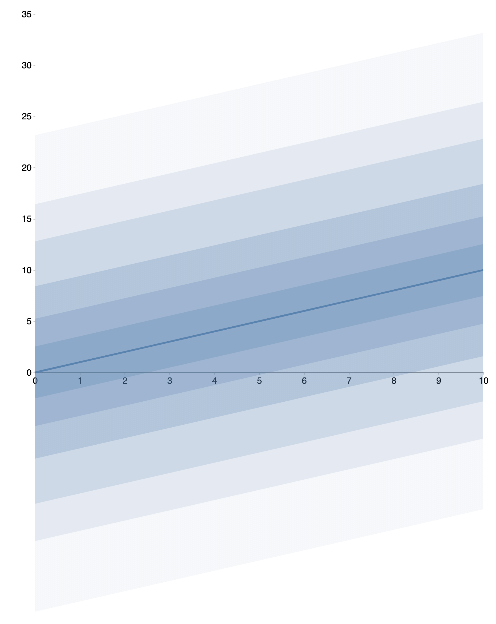

Here's a case for any *t*:

|

||||||

|

|

||||||

|

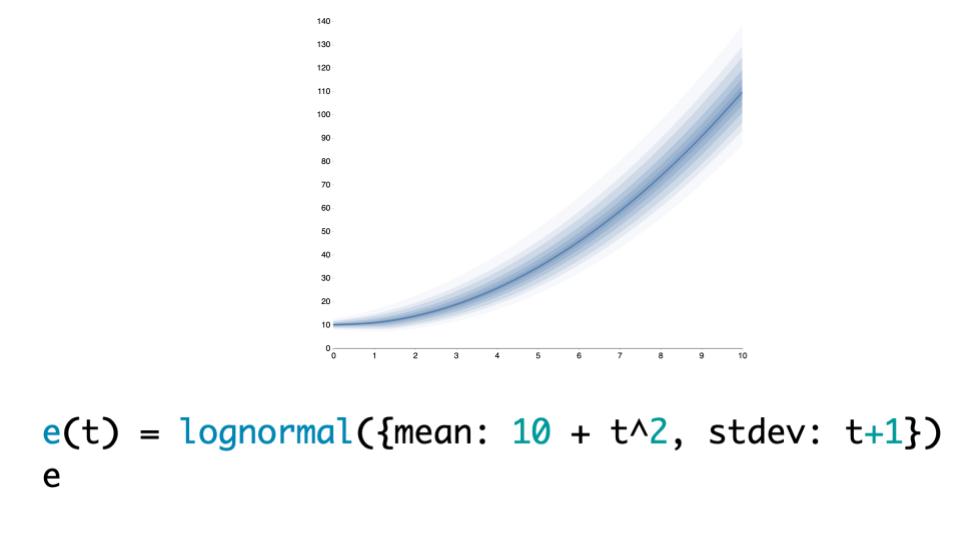

We're going to give you a normal, with *t* as a mean and the standard deviation of 3. This is a plot where it's basically showing bars at each one of the deciles. It gets a bit wider at the end. It's very easy once you have this to just create it for any specific combination of values.

|

||||||

|

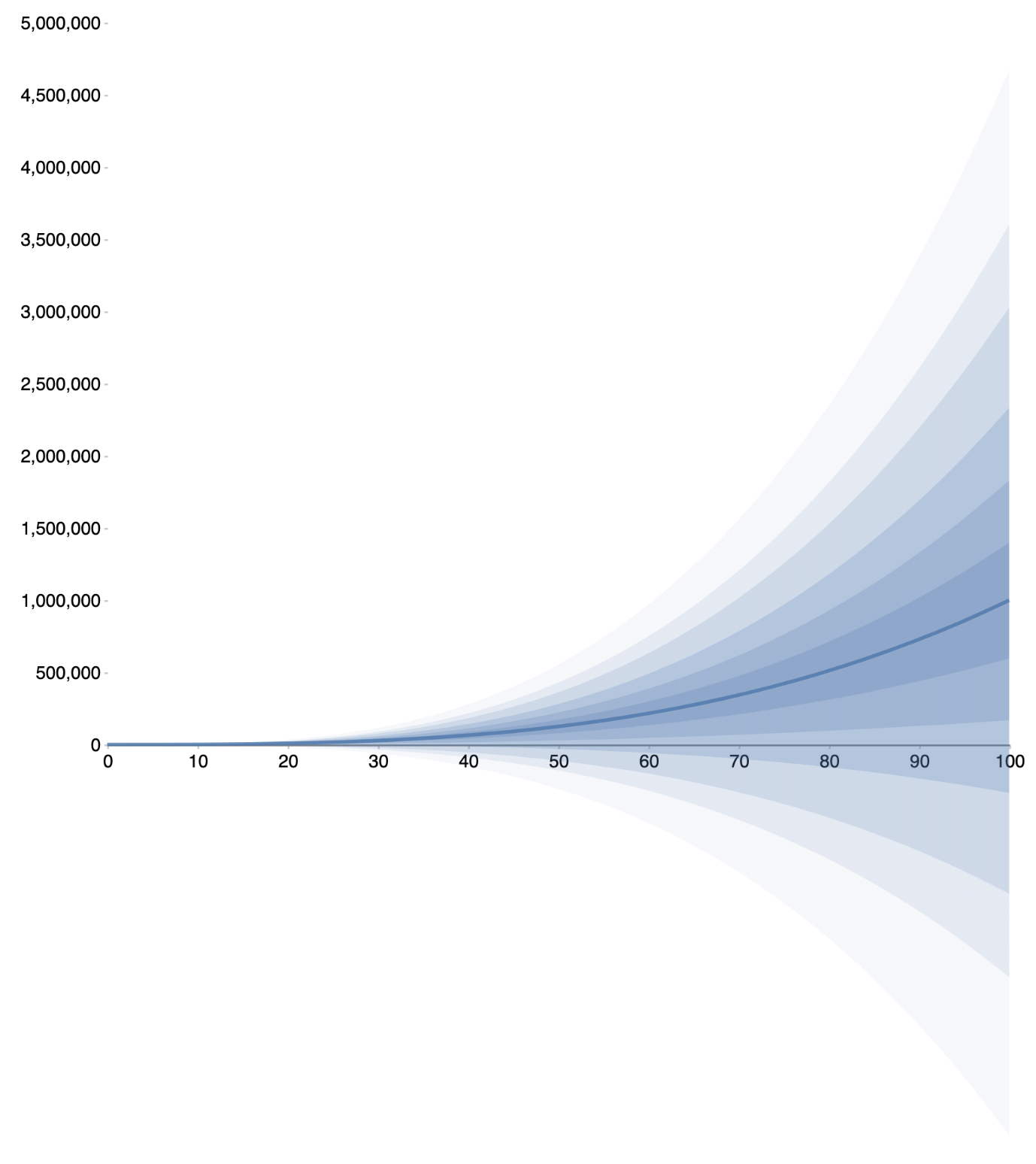

It’s also cool, because once you have it in this format, it’s very easy to combine multiple models. For instance, here’s a lognormal.

|

||||||

|

|

||||||

|

For example, if I have an estimate and my friend Jacob has an estimate, then we could write a function that for every time *t*, basically queries each one of our estimates and gives that as a combined result.

|

||||||

|

This kind of shows you a problem with fan charts, that they don’t show the fact that all the probability amasses on the very top and the very bottom. That’s an issue that we’ll get over soon. Here’s what it looks like if I aggregate my model with Jacob’s.

|

||||||

|

|

||||||

|

|

||||||

|

## Questions

|

||||||

|

**Raemon:**

|

||||||

|

I had a little bit of excitement, and then fear, and then excitement again, when you talked about a unified format. The excitement was like, “Ah, a unified format, that sounds nice.” Then I had an image of all of the giant coordination problems that result from failed attempts to create a new unified format, where the attempted unified format becomes [yet another distinct format](https://xkcd.com/927/) among all the preexisting options.

|

||||||

|

|

||||||

|

Then I got kind of excited again because to a first approximation, as far as I can tell, in the grand scheme of things currently, approximately zero people use prediction markets. You might actually be able to figure out the right format and get it right the first time. You also might run into the same problems that all the other people that tried to come up with unified formats did, which was that it was hard to figure that out right at the beginning. Maybe now I am scared again. Do you have any thoughts on this?

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

Yeah, I’d say in this case, I think there’s no format that does this type of thing yet. This is a pretty unexplored space. Of course, writing the first format in a space is kind of scary, right? Maybe I should spend a huge amount of time making it great, because maybe it’ll lock in. Maybe I should just iterate. I’m not too sure what to do there.

|

||||||

|

|

||||||

|

And there are also a few different ways that the format could go. I don’t know who it’s going to be the most useful for, which will be important. But right now, I’m just experimenting and seeing what’s good for small communities. Well, specifically what’s good for me.

|

||||||

|

|

||||||

|

**Raemon:**

|

||||||

|

Yeah, you can build the thing that seems good for you. That seems good. If you get to a point where you want to scale it up, making sure that whatever you’re scaling up is reasonably flexible or something might be nice. I don’t know.

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

Yeah. Right now, I’m aiming for something that’s good at a bunch of things but not that great at any one of them. I’m also very curious to get outside opinions. Hopefully people could start playing with this, and I can get their thoughts.

|

||||||

|

|

||||||

|

- - - -

|

||||||

|

**habryka:**

|

||||||

|

This feels very similar to [Guesstimate](https://www.getguesstimate.com/) , which you also built, just in programming language as opposed to visual language. How does this project differ?

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

Basically, you could kind of think about this as “Guesstimate: The Language”. But it does come with a lot of advantages. The main one is that you could write functions. With Guesstimate you couldn’t write functions. That was a gigantic limitation!

|

||||||

|

|

||||||

|

Really, a lot of Squiggly is me trying to remake for my sins with Guesstimate. With Guesstimate, if one person makes a model of how the damage from bicycling, like the micromorts that they’re taking when they bike, that model only works for them. If you wanted to go and configure it to match your situation, you’d have to go in and modify it manually. It’s actually very difficult to port these models. If one person writes a good model, it’s hard for somebody else to copy and paste it, hopefully into another programming tool. It’s not very portable.

|

||||||

|

|

||||||

|

So I think these new features are pretty fundamental. I think that this is a pretty big step in the right direction. In general text-based solutions have a lot of benefits when you can use them, but it is kind of tricky to use them.

|

||||||

|

|

||||||

|

- - - -

|

||||||

|

**Johnswentworth:**

|

||||||

|

I’m getting sort of mixed vibes about what exactly the use case here is. If we’re thinking of this as a sort of standard for representing models, then I should be able to convert models in other formats, right? Like, if I have a model in Excel or I have a model in [Pyro](https://pyro.ai/) , then there should be some easy way to turn it into this standard format?

|

||||||

|

|

||||||

|

On the other hand, if we’re trying to create a language in which people write models, then that’s a whole different use case where being a standard isn’t really part of it at all (instead it looks more like the actual UI you showed us).

|

||||||

|

|

||||||

|

So I’m sort of not sure what the picture is in your head for how someone is actually going to use this and what it’s going to do for them, or what the value add is compared to Excel or Pyro.

|

||||||

|

|

||||||

|

**Ozzie:** Yeah, great question. So I would say that I’d ideally have both data scientists and judgemental forecasters trying to use it, and those are two very distinct types of use cases, as you mentioned. It’s very possible that they both want their own ideal format, and it doesn’t make sense to have one format for the two of them. I’m excited for users who don’t have any way of making these methods intuitively at the moment.

|

||||||

|

|

||||||

|

Suppose, for example, that you’re trying to forecast the GDP of US for each year in the coming decades.

|

||||||

|

|

||||||

|

Step one is making sure that, basically, people on Metaculus or existing other forecasting platforms, could basically be writing functions using this language and then submitting those instead of just submitting point forecasts. So you’d be able to say “given as input a specific year, and some other parameters, output this distribution” — instead of having to make a new and separate forecast for each and every year. Then having the whole rest of the forecasting pipeline work with that (e.g. scoring, visualisations, and so forth).

|

||||||

|

|

||||||

|

When you do that, though, it is pretty easy to take some results from other, more advanced tools, and put them into probably very simple functions. So, for instance, if there is a distribution over time (as in the GDP example), that may be something they could interpolate with a few different points. There could be some very simple setups where you take your different Pyro model or something that actually did some intense equations, and then basically put them into this very simple function that just interpolates based on that and then uses this new format.

|

||||||

|

|

||||||

|

**Johnswentworth:**

|

||||||

|

What would be the advantage of that?

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

It’s complicated. If you made your model in Pyro and you wanted to then export it and allow someone to play with it, that could be a tricky thing, because your Pyro model might be computationally expensive to run. As opposed to trying to export a representation that is basically a combination of a CSV and a light wrapper function. And then people run that, which is more convenient and facilitates more collaboration.

|

||||||

|

|

||||||

|

**Johnswentworth:**

|

||||||

|

Why would people run that though? Why do people want that compressed model?

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

I mean, a lot of the COVID models are like that, where basically the *running* of the simulation was very time intensive and required one person’s whole PC. But it would still be nice to be able to export the *results*of that and then make those interactable, right?

|

||||||

|

|

||||||

|

**Johnswentworth:**

|

||||||

|

Oh, I see. Okay, I buy that.

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

I also don’t want to have to write all of the work to do all of the Pyro stuff in this language. It’s way too much.

|

||||||

|

|

||||||

|

**Johnswentworth:**

|

||||||

|

Usually, when I’m thinking about this sort of thing, and I look at someone’s model, I really want to know what the underlying gears were behind it. Which is exactly the opposite of what you’re talking about. So it’s just a use case that I’m not used to thinking through. But I agree, it does make sense.

|

||||||

|

|

||||||

|

- - - -

|

||||||

|

**habryka:**

|

||||||

|

Why call the language Squiggly? There were a surprising lack of squiggles in the language. I was like, “Ah, it makes sense, you just use the squiggles as the primary abstraction” — but then you showed me your code editor and there were no squiggles, and I was very disappointed.

|

||||||

|

|

||||||

|

**Ozzie:**

|

||||||

|

Yeah, so I haven’t written my own parser yet. I’ve been using the one from math.js. When I write my own, it’s possible I’ll add it. I also am just really unsure about the name.

|

||||||

148

docs/blog/2019-11-25-technical-overview.md

Normal file

|

|

@ -0,0 +1,148 @@

|

||||||

|

---

|

||||||

|

slug: technical-overview

|

||||||

|

title: Technical Overview

|

||||||

|

authors: ozzie

|

||||||

|

---

|

||||||

|

# Squiggle Technical Overview

|

||||||

|

This piece is meant to be read after [Squiggle: An Overview](https://www.lesswrong.com/posts/i5BWqSzuLbpTSoTc4/squiggle-an-overview) . It includes technical information I thought best separated out for readers familiar with coding. As such, it’s a bit of a grab-bag. It explains the basic internals of Squiggle, outlines ways it could be used in other programming languages, and details some of the history behind it.

|

||||||

|

|

||||||

|

The Squiggle codebase is organized in [this github repo](https://github.com/foretold-app/squiggle) . It’s open source. The code is quite messy now, but do ping me if you’re interested in running it or understanding it.

|

||||||

|

|

||||||

|

## Project Subcomponents

|

||||||

|

I think of Squiggle in three distinct clusters.

|

||||||

|

1. A high-level ReasonML library for probability distributions.

|

||||||

|

2. A simple programming language.

|

||||||

|

3. Custom visualizations and GUIs.

|

||||||

|

|

||||||

|

### 1. A high-level ReasonML library for probability distribution functions

|

||||||

|

Python has some great libraries for working with probabilities and symbolic mathematics. Javascript doesn’t. Squiggle is to be run in Javascript (for interactive editing and use), so the first step for this is to have good libraries to do the basic math.

|

||||||

|

|

||||||

|

The second step is to have-level types that could express various types of distributions and functions of distributions. For example, some distributions have symbolic representations, and others are rendered (stored as x-y coordinates). These two types have to be dealt with separately. Squiggle also has limited support for continuous and discrete mixtures, and the math for this adds more complexity.

|

||||||

|

|

||||||

|

When it comes to performing functions on expressions, there’s a lot of optimization necessary for this to go smoothly.

|

||||||

|

Say you were to write the function,

|

||||||

|

```

|

||||||

|

multimodal(normal(5,2), normal(10,1) + uniform(1,10)) * 100

|

||||||

|

```

|

||||||

|

|

||||||

|

You’d want to apply a combination of symbolic, numeric, and sampling techniques in order to render this equation. In this case, Squiggle would perform sampling to compute the distribution of normal(10,1) + uniform(1,10) and then it would use numeric methods for the rest of the equation. In the future, it would be neat if Squiggle would also first symbolically modify the internal distributions to be multiplied by 100, rather than performing it as a separate numeric step.

|

||||||

|

|

||||||

|

This type-dependent function operations can be confusing to users, but hopefully less confusing than having to figure out how to do each of the three and doing them separately. I imagine there could be some debugging UI to better explain what operations are performed.

|

||||||

|

|

||||||

|

### 2. Simple programming language functionality

|

||||||

|

It can be useful to think of Squiggle as similar to SQL, Excel, or Probabilistic Programming Languages like [WebPPL](http://webppl.org/) . There are simple ways to declare variables and write functions, but don’t expect to use classes, inheritance, or monads. There’s no for loops, though it will probably have some kinds of reduce() methods in the future.

|

||||||

|

|

||||||

|

So far the parsing is done with MathJS, meaning we can’t change the syntax. I’m looking forward to doing so and have been thinking about what it should be like. One idea I’m aiming for is to allow for simple dependent typing for the sake of expressing limited functions. For instance,

|

||||||

|

|

||||||

|

```

|

||||||

|

myFunction(t: [float from 0 to 30]) = normal(t,10)

|

||||||

|

myFunction

|

||||||

|

```

|

||||||

|

|

||||||

|

This function would return an error if called with a float less than 0 or greater than 30. I imagine that many prediction functions would only be estimated for limited domains.

|

||||||

|

|

||||||

|

With some introspection it should be possible to auto-generate calculator-like interfaces.

|

||||||

|

|

||||||

|

### 3. Visualizations and GUIs

|

||||||

|

The main visualizations need to be made from scratch because there’s little out there now in terms of quality open-source visualizations of probability distributions and similar. This is especially true for continuous and discrete mixtures. D3 seems like the main library here, and D3 can be gnarly to write and maintain.

|

||||||

|

|

||||||

|

Right now we’re using a basic [Vega](https://vega.github.io/) chart for the distribution over a variable, but this will be replaced later.

|

||||||

|

|

||||||

|

In the near term, I’m interested in making calculator-like user interfaces of various kinds. I imagine one prediction function could be used for many interfaces of calculators.

|

||||||

|

|

||||||

|

## Deployment Story, or, Why Javascript?

|

||||||

|

Squiggle is written in ReasonML which compiles to Javascript. The obvious alternative is Python. Lesser obvious but interesting options are Mathematica or Rust via WebAssembly.

|

||||||

|

|

||||||

|

The plan for Squiggle is to prioritize small programs that could be embedded in other programs and run quickly. Perhaps there will be 30 submissions for a “Covid-19 over time per location” calculator, and we’d want to run them in parallel in order to find the average answer or to rank them. I could imagine many situations where it would be useful to run these functions for many different inputs; for example, for kinds of sensitivity analyses.

|

||||||

|

|

||||||

|

One nice-to-have feature would be functions that call other functions. Perhaps a model of your future income levels depends on some other aggregated function of the S&P 500, which further depends on models of potential tail risks to the economy. If this were the case you would want to have those model dependencies be easily accessible. This could be done via downloading or having a cloud API to quickly call them remotely.

|

||||||

|

|

||||||

|

Challenges like these require some programmatic architecture where functions can be fully isolated/sandboxed and downloaded and run on the fly. There are very few web application infrastructures aimed to do things like this, I assume in part because of the apparent difficulty.

|

||||||

|

|

||||||

|

Python is open source and has the most open-source tooling for probabilistic work. Ought’s [Ergo](https://github.com/oughtinc/ergo) is in Python, and their Elicit uses Ergo (I believe). [Pyro](https://pyro.ai/) and [Edward](http://edwardlib.org/) , two of the most recent and advanced probabilistic programming languages, are accessible in Python. Generally, Python is the obvious choice.

|

||||||

|

|

||||||

|

Unfortunately, the current tooling to run small embedded Python programs, particularly in the browser, is quite mediocre. There are a few attempts to bring Python directly to the browser, like [Pyrodide](https://hacks.mozilla.org/2019/04/pyodide-bringing-the-scientific-python-stack-to-the-browser/) , but these are quite early and relatively poorly supported. If you want to run a bunch of Python jobs on demand, you could use Serverless platforms like [AWS Lambda](https://aws.amazon.com/lambda/) or something more specialized like [PythonAnywhere](https://www.pythonanywhere.com/) . Even these are relatively young and raise challenges around speed, cost, and complexity.

|

||||||

|

|

||||||

|

I’ve looked a fair bit into various solutions. I think that for at least the next 5 to 15 years, the Python solutions will be challenging to run as conveniently as Javascript solutions would. For this time it’s expected that Python will have to run in separate servers, and this raises issues of speed, cost, and complexity.

|

||||||

|

|

||||||

|

At [Guesstimate](https://www.getguesstimate.com/) , we experimented with solutions that had sampling running on a server and found this to hurt the experience. We tested latency of around 40ms to 200ms. Being able to see the results of calculations as you type is a big deal and server computation prevented this. It’s possible that newer services with global/local server infrastructures could help here (as opposed to setups with only 10 servers spread around globally), but it would be tricky. [Fly.io](https://fly.io/) launched in the last year, maybe that would be a decent fit for near-user computation.

|

||||||

|

|

||||||

|

Basically, at this point, it seems important that Squiggle programs could be easily imported and embedded in the browser and servers, and for this, Javascript currently seems like the best bet. Javascript currently has poor support for probability, but writing our own probability libraries is more feasible than making Python portable. All of the options seem fairly mediocre, but Javascript a bit less so.

|

||||||

|

|

||||||

|

Javascript obviously runs well in the browser, but its versatility is greater than that. [Observable](https://observablehq.com/) and other in-browser Javascript coding platforms load in [NPM](https://www.npmjs.com/) libraries on the fly to run directly in the browser, which demonstrates that such functionality is possible. It’s [possible](https://code.google.com/archive/p/pyv8/) (though I imagine a bit rough) to call Javascript programs from Python.

|

||||||

|

|

||||||

|

ReasonML compiles to OCaml before it compiles to Javascript. I’ve found it convenient for writing complicated code and now am hesitant to go back to a dynamic, non-functional language. There’s definitely a whole lot to do (the existing Javascript support for math is very limited), but at least there are decent approaches to doing it.

|

||||||

|

|

||||||

|

I imagine the landscape will change a lot in the next 3 to 10 years. I’m going to continue to keep an eye on the space. If things change I could very much imagine pursuing a rewrite, but I think it will be a while before any change seems obvious.

|

||||||

|

|

||||||

|

## Using Squiggle with other languages

|

||||||

|

Once the basics of Squiggle are set up, it could be used to describe the results of models that come from other programs. Similar to how many programming languages have ORMs to generate custom SQL statements, similar tools could be made to generate Squiggle functions. The important thing to grok is that Squiggle functions are submitted information, not just internally useful tools. If there were an API to accept “predictions”, people would submit Squiggle code snippets directly to this API.

|

||||||

|

|

||||||

|

*I’d note here that I find it somewhat interesting how few public APIs do accept code snippets. I could imagine a version of Facebook where you could submit a Javascript function that would take in information about a post and return a number that would be used for ranking it in your feed. This kind of functionality seems like it could be very powerful. My impression is that it’s currently thought to be too hard to do given existing technologies. This of course is not a good sign for the feasibility of my proposal here, but this coarse seems like a necessary one to do at some time.*

|

||||||

|

|

||||||

|

### Example #1:

|

||||||

|

Say you calculate a few parameters, but know they represent a multimodal combination of a normal distribution and a uniform distribution. You want to submit that as your prediction or estimate via the API of Metaculus or Foretold. You could write that as (in Javascript):

|

||||||

|

|

||||||

|

```

|

||||||

|

var squiggleValue = `mm(normal(${norm.mean}, ${norm.stdev}}), uniform(0, ${uni.max}))`

|

||||||

|

```

|

||||||

|

|

||||||

|

The alternative to this is that you send a bunch of X-Y coordinates representing the distribution, but this isn’t good. It would require you to load the necessary library, do all the math on your end, and then send (what is now a both approximated and much longer) form to the server.

|

||||||

|

|

||||||

|

With Squiggle, you don’t need to calculate the shape of the function in your code, you just need to express it symbolically and send that off.

|

||||||

|

|

||||||

|

### Example #2:

|

||||||

|

Say you want to describe a distribution with a few or a bunch of calculated CDF points. You could do this by wrapping these points into a function that would convert them into a smooth distribution using one of several possible interpolation methods. Maybe in Javascript this would be something like,

|

||||||

|

|

||||||

|

```

|

||||||

|

var points = [[1, 30], [4, 40], [50,70]];

|

||||||

|

var squiggleValue = `interpolatePoints(${points}, metalog)`

|

||||||

|

```

|

||||||

|

|

||||||

|

I could imagine it is possible that the majority of distributions generated from other code would be sent this way. However, I can’t tell what the specifics of that now or what interpolation strategies may be favored. Doing it with many options would allow us to wait and learn what seems to be best. If there is one syntax used an overwhelming proportion of the time, perhaps that could be separated into its own simpler format.

|

||||||

|

|

||||||

|

### Example #3:

|

||||||

|

Say you want to estimate Tesla stock at every point in the next 10 years. You decide to estimate this using a simple analytical equation, where you predict that the price of Tesla stock can be modeled as growing by a mean of -3 to 8 percent each year from the current price using a normal distribution (apologies to Nassim Taleb).

|

||||||

|

|

||||||

|

You have a script that fetches Tesla’s current stock, then uses that in the following string template:

|

||||||

|

|

||||||

|

```

|

||||||

|

var squiggleValue = `(t) => ${current_price} * (0.97 to 1.08)^t`

|

||||||

|

```

|

||||||

|

|

||||||

|

It may seem a bit silly to not just fetch Tesla’s price from within Squiggle, but it does help separate concerns. Data fetching within Squiggle would raise a bunch of issues, especially when trying to score Squiggle functions.It may seem a bit silly to not just fetch Tesla’s price from within Squiggle, but it does help separate concerns. Data fetching within Squiggle would raise a bunch of issues, especially when trying to score Squiggle functions.

|

||||||

|

|

||||||

|

## History: From Guesstimate to Squiggle

|

||||||

|

The history of “Squiggle” goes back to early Guesstimate. It’s been quite a meandering journey. I was never really expecting things to go the particular way they did, but at least am relatively satisfied with how things are right now. I imagine these details won’t be interesting to most readers, but wanted to include it for those particularly close to the project, or for those curious on what I personally have been up to.

|

||||||

|

|

||||||

|

90% of the work on Squiggle has been on a probability distribution editor (“A high-level ReasonML library for probability distribution functions**”)**. This has been a several year process, including my time with Guesstimate. The other 10% of the work, with the custom functions, is much more recent.

|

||||||

|

|

||||||

|

Things started with [Guesstimate](https://www.getguesstimate.com/) in around 2016. The Guesstimate editor used a simple sampling setup. It was built with [Math.js](https://mathjs.org/) plus a bit of tooling to support sampling and a few custom functions.[1] The editor produced histograms, as opposed to smooth shapes.

|

||||||

|

|

||||||

|

When I started working on [Foretold](https://www.foretold.io/) , in 2018, I hoped we could directly take the editor from Guesstimate. It soon became clear the histograms it produced wouldn’t be adequate.

|

||||||

|

|

||||||

|

In Foretold we needed to score distributions. Scoring distributions requires finding the probability density function at different points, and that requires a continuous representation of the distribution. Converting random samples to continuous distributions requires kernel density estimation. I tried simple kernel density estimation, but couldn’t get this to work well. Randomness in distribution shape is quite poor for forecasting users. It brings randomness into scoring, it looks strange (confusing), and it’s terrible when there are long tails.

|

||||||

|

|

||||||

|

Limited distribution editors like those in Metaculus or Elicit don’t use sampling; they use numeric techniques. For example, to take the pointwise sum of three uniform distributions, they would take the pdfs at each point and add them vertically. Numeric techniques are well defined for a narrow subset of combinations of distributions. The main problem with these editors is that they are (so far) highly limited in flexibility; you can only make linear combinations of single kinds of distributions (logistic distributions in Metaculus and uniform ones with Elicit.)

|

||||||

|

|

||||||

|

It took a while, but we eventually created a simple editor that would use numeric techniques to combine a small subset of distributions and functions using a semi-flexible string representation. If users would request functionality not available in this editor (like multiplying two distributions together, which would require sampling), it would fall back to using the old editor. This was useful but suboptimal. It required us to keep two versions of the editor with slightly different syntaxes, which was not fun for users to keep track of.

|

||||||

|

|

||||||

|

The numeric solver could figure out syntaxes like,

|

||||||

|

```

|

||||||

|

multimodal(normal(5,2), uniform(10,13), [.2,.8])

|

||||||

|

```

|

||||||

|

|

||||||

|

But would break anytime you wanted to use any other function, like,

|

||||||

|

```

|

||||||

|

multimodal(normal(5,2) + lognormal(1,1.5), uniform(10,13), [.2,.8])*100

|

||||||

|

```

|

||||||

|

|

||||||

|

The next step was making a system that would more precisely use numeric methods and Monte Carlo sampling.

|

||||||

|

|

||||||

|

At this point we needed to replace most of Math.js. Careful control over the use of Monte Carlo techniques vs. numeric techniques required us to write our own interpreter. [Sebastian Kosch](https://aldusleaf.org/) did the first main stab at this. I then read a fair bit about how to write interpreted languages and fleshed out the functionality. If you’re interested, the book [Crafting Interpreters](https://craftinginterpreters.com/) is pretty great on this topic.{interpreters}

|

||||||

|

|

||||||

|

At this point we were 80% of the way there to having simple variables and functions, so those made sense to add as well. Once we had functions, it was simple to try out visualizations of single variable distributions, something I’ve been wanting to test out for a long time. This proved surprisingly fun, though of course it was limited (and still is.)

|

||||||

|

|

||||||

|

After messing with these functions, and spending a lot more time thinking about them, I decided to focus more on making this a formalized language in order to better explore a few areas. This is when I took this language out of its previous application (called WideDomain, it’s not important now), and renamed it Squiggle.

|

||||||

|

|

||||||

|

[1] It was great this worked at the time; writing my own version may have been too challenging, so it’s possible this hack was counterfactually responsible for Guesstimate.

|

||||||

194

docs/blog/2021-11-23-overview.md

Normal file

|

|

@ -0,0 +1,194 @@

|

||||||

|

---

|

||||||

|

slug: overview-1

|

||||||

|

title: Squiggle Overview

|

||||||

|

authors: ozzie

|

||||||

|

---

|

||||||

|

|

||||||

|

I’ve spent a fair bit of time over the last several years iterating on a text-based probability distribution editor (the ``5 to 10`` input editor in Guesstimate and Foretold). Recently I’ve added some programming language functionality to it, and have decided to refocus it as a domain-specific language.

|

||||||

|

|

||||||

|

The language is currently called *Squiggle*. Squiggle is made for expressing distributions and functions that return distributions. I hope that it can be used one day for submitting complex predictions on Foretold and other platforms.

|

||||||

|

|

||||||

|

Right now Squiggle is very much a research endeavor. I’m making significant sacrifices for stability and deployment in order to test out exciting possible features. If it were being developed in a tech company, it would be in the “research” or “labs” division.

|

||||||

|

|

||||||

|

You can mess with the current version of Squiggle [here](https://squiggle-language.com/dist-builder) . Consider it in pre-alpha stage. If you do try it out, please do contact me with questions and concerns. It is still fairly buggy and undocumented.

|

||||||

|

|

||||||

|

I expect to spend a lot of time on Squiggle in the next several months or years. I’m curious to get feedback from the community. In the short term I’d like to get high-level feedback, in the longer term I’d appreciate user testing. If you have thoughts or would care to just have a call and chat, please reach out! We ( [The Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/) ) have some funding now, so I’m also interested in contractors or hires if someone is a really great fit.

|

||||||

|

|

||||||

|

Squiggle was previously introduced in a short talk that was transcribed [here](https://www.lesswrong.com/posts/kTzADPE26xh3dyTEu/multivariate-estimation-and-the-squiggly-language) , and Nuño Sempere wrote a post about using it [here](https://www.lesswrong.com/posts/kTzADPE26xh3dyTEu/multivariate-estimation-and-the-squiggly-language) .

|

||||||

|

|

||||||

|

*Note: the code for this has developed since my time on Guesstimate. With Guesstimate, I had one cofounder, Matthew McDermott. During the last two years, I’ve had a lot of help from a handful of programmers and enthusiasts. Many thanks to Sebastian Kosch and Nuño Sempere, who both contributed. I’ll refer to this vague collective as “we” throughout this post.*

|

||||||

|

|

||||||

|

---

|

||||||

|

# Video Demo

|

||||||

|

<iframe width="675" height="380" src="https://www.youtube.com/embed/kJLybQWujco" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

|

||||||

|

|

||||||

|

## A Quick Tour

|

||||||

|

The syntax is forked from Guesstimate and Foretold.

|

||||||

|

|

||||||

|

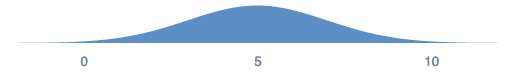

**A simple normal distribution**

|

||||||

|

|

||||||

|

```

|

||||||

|

normal(5,2)

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

You may notice that unlike Guesstimate, the distribution is nearly perfectly smooth. It’s this way because it doesn’t use sampling for (many) functions where it doesn’t need to.

|

||||||

|

|

||||||

|

**Lognormal shorthand**

|

||||||

|

```

|

||||||

|

5 to 10

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

This results in a lognormal distribution with 5 to 10 being the 5th and 95th confidence intervals respectively.

|

||||||

|

You can also write lognormal distributions as: ### lognormal(1,2)

|

||||||

|

or ### lognormal({mean: 3, stdev: 8})

|

||||||

|

.

|

||||||

|

|

||||||

|

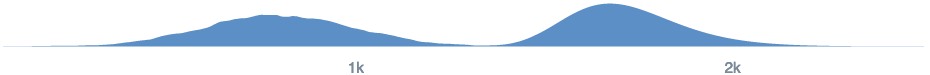

**Mix distributions with the multimodal function**

|

||||||

|

|

||||||

|

```multimodal(normal(5,2), uniform(14,19), [.2, .8])```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

You can also use the shorthand *mm*(), and add an array at the end to represent the weights of each combined distribution.

|

||||||

|

*Note: Right now, in the demo, I believe “multimodal” is broken, but you can use “mm”.*

|

||||||

|

|

||||||

|

**Mix distributions with discrete data**

|

||||||

|

*Note: This is particularly buggy.* .

|

||||||

|

|

||||||

|

```

|

||||||

|

multimodal(0, 10, normal(4,5), [.4,.1, .5])

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**Variables**

|

||||||

|

```

|

||||||

|

expected_case = normal(5,2)

|

||||||

|

long_tail = 3 to 1000

|

||||||

|

multimodal(expected_case, long_tail, [.2,.8])

|

||||||

|

```

|

||||||

|

|

||||||

|

**Simple calculations**

|

||||||

|

When calculations are done on two distributions, and there is no trivial symbolic solution the system will use Monte Carlo sampling for these select combinations. This assumes they are perfectly independent.

|

||||||

|

```

|

||||||

|

multimodal(normal(5,2) + uniform(10,3), (5 to 10) + 10) * 100

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**Pointwise calculations**

|

||||||

|

We have an infix for what can be described as pointwise distribution calculations. Calculations are done along the y-axis instead of the x-axis, so to speak. “Pointwise” multiplication is equivalent to an independent Bayesian update. After each calculation, the distributions are renormalized.

|

||||||

|

```

|

||||||

|

normal(10,4) .* normal(14,3)

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**First-Class Functions**

|

||||||

|

When a function is written, we can display a plot of that function for many values of a single variable. The below plots treat the single variable input on the x-axis, and show various percentiles going from the median outwards.

|

||||||

|

|

||||||

|

```

|

||||||

|

myFunction(t) = normal(t,10)

|

||||||

|

myFunction

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

```

|

||||||

|

myFunction(t) = normal(t^3,t^3.1)

|

||||||

|

myFunction

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## Reasons to Focus on Functions

|

||||||

|

Up until recently, Squiggle didn’t have function support. Going forward this will be the primary feature.

|

||||||

|

|

||||||

|

Functions are useful for two distinct purposes. First, they allow composition of models. Second, they can be used directly to be submitted as predictions. For instance, in theory you could predict, “For any point in time T, and company N, from now until 2050, this function will predict the market cap of the company.”

|

||||||

|

|

||||||

|

At this point I’m convinced of a few things:

|

||||||

|

* It’s possible to intuitively write distributions and functions that return distributions, with the right tooling.

|

||||||

|

* Functions that return distributions are highly preferable to specific distributions, if possible.

|

||||||

|

* It would also be great if existing forecasting models could be distilled into common formats.

|

||||||

|

* There’s very little activity in this space now.

|

||||||

|

* There’s a high amount of value of information to further exploring the space.

|

||||||

|

* Writing a small DSL like this will be a fair bit of work, but can be feasible if the functionality is kept limited.

|

||||||

|

* Also, there are several other useful aspects about having a simple language equivalent for Guesstimate style models.

|

||||||

|

|

||||||

|

I think that this is a highly neglected area and I’m surprised it hasn’t been explored more. It’s possible that doing a good job is too challenging for a small team, but I think it’s worth investigating further.

|

||||||

|

|

||||||

|

## What Squiggle is Meant For

|

||||||

|

|

||||||

|

The first main purpose of Squiggle is to help facilitate the creation of judgementally estimated distributions and functions.

|

||||||

|

|

||||||

|

Existing solutions assume the use of either data analysis and models, or judgemental estimation for points, but not judgemental estimation to intuit models. Squiggle is meant to allow people to estimate functions in situations where there is very little data available, and it’s assumed all or most variables will be intuitively estimated.

|

||||||

|

|

||||||

|

A second possible use case is to embed the results of computational models. Functions in Squiggle are rather portable and composable. Squiggle (or better future tools) could help make the results of these models interoperable.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

One thing that Squiggle is **not** meant for is heavy calculation. It’s not a probabilistic programming language, because it doesn’t specialize in inference. Squiggle is a high-level language and is not great for performance optimization. The idea is that if you need to do heavy computational modeling, you’d do so using separate tools, then convert the results to lookup tables or other simple functions that you could express in Squiggle.

|

||||||

|

|

||||||

|

One analogy is to think about the online estimation “calculators” and “model explorers”. See the [microCOVID Project](https://www.microcovid.org/?distance=normal&duration=120&interaction=oneTime&personCount=20&riskProfile=closedPod20&setting=outdoor&subLocation=US_06001&theirMask=basic&topLocation=US_06&voice=normal&yourMask=basic) calculator and the [COVID-19 Predictions](https://covid19.healthdata.org/united-states-of-america?view=total-deaths&tab=trend) . In both of these, I assume there was some data analysis and processing stage done on the local machines of the analysts. The results were translated into some processed format (like a set of CSV files), and then custom code was written for a front end to analyze and display that data.

|

||||||

|

|

||||||

|

If they were to use a hypothetical front end unified format, this would mean converting their results into a Javascript function that could be called using a standardized interface. This standardization would make it easier for these calculators to be called by third party wigets and UIs, or for them to be downloaded and called from other workflows. The priority here is that the calculators could be run quickly and that the necessary code and data is minimized in size. Heavy calculation and analysis would still happen separately.

|

||||||

|

|

||||||

|

### Future “Comprehensive” Uses

|

||||||

|

On the more comprehensive end, it would be interesting to figure out how individuals or collectives could make large clusters of these functions, where many functions call other functions, and continuous data is pulled in. The latter would probably require some server/database setup that ingests Squiggle files.

|

||||||

|

|

||||||

|

I think the comprehensive end is significantly more exciting than simpler use cases but also significantly more challenging. It’s equivalent to going from Docker the core technology, to Docker hub, then making an attempt at Kubernetes. Here we barely have a prototype of the proverbial Docker, so there’s a lot of work to do.

|

||||||

|

|

||||||

|

### Why doesn’t this exist already?

|

||||||

|

I will briefly pause here to flag that I believe the comprehensive end seems fairly obvious as a goal and I’m quite surprised it hasn’t really been attempted yet, from what I can tell. I imagine such work could be useful to many important actors, conditional on them understanding how to use it.

|

||||||

|

|

||||||

|

My best guess is this is due to some mix between:

|

||||||

|

* It’s too technical for many people to be comfortable with.

|

||||||

|

* There’s a fair amount of work to be done, and it’s difficult to monetize quickly.

|

||||||

|

* There’s been an odd, long-standing cultural bias against clearly intuitive estimates.

|

||||||

|

* The work is substantially harder than I realize.

|

||||||

|

|

||||||

|

# Related Tools

|

||||||

|

**Guesstimate**

|

||||||

|

I previously made Guesstimate and take a lot of inspiration from it. Squiggle will be a language that uses pure text, not a spreadsheet. Perhaps Squiggle could one day be made available within Guesstimate cells.

|

||||||

|

|

||||||

|

**Ergo**

|

||||||

|

[Ought](https://ought.org/) has a Python library called [Ergo](https://github.com/oughtinc/ergo) with a lot of tooling for judgemental forecasting. It’s written in Python so works well with the Python ecosystem. My impression is that it’s made much more to do calculations of specific distributions than to represent functions. Maybe Ergo results could eventually be embedded into Squiggle functions.

|

||||||

|

|

||||||

|

**Elicit**

|

||||||

|

[Elicit](https://elicit.org/) is also made by [Ought](https://ought.org/) . It does a few things, I recommend just checking it out. Perhaps Squiggle could one day be an option in Elicit as a forecasting format.

|

||||||

|

|

||||||

|

**Causal**

|

||||||

|

[Causal](https://www.causal.app/) is a startup that makes it simple to represent distributions over time. It seems fairly optimized for clever businesses. I imagine it probably is going to be the most polished and easy to use tool in its targeted use cases for quite a while. Causal has an innovative UI with HTML blocks for the different distributions; it’s not either a spreadsheet-like Guesstimate or a programming language, but something in between.

|

||||||

|

|

||||||

|

**Spreadsheets**

|

||||||

|

Spreadsheets are really good at organizing large tables of parameters for complex estimations. Regular text files aren’t. I could imagine ways Squiggle could have native support for something like Markdown Tables that get converted into small editable spreadsheets when being edited. Another solution would be to allow the use of JSON or TOML in the language, and auto-translate that into easier tools like tables in editors that allow for them.[2]

|

||||||

|

|

||||||

|

**Probabilistic Programming Languages**

|

||||||

|

There are a bunch of powerful Probabilistic Programming Languages out there. These typically specialize in doing inference on specific data sets. Hopefully, they could be complementary to Squiggle in the long term. As said earlier, Probabilistic Programming Languages are great for computationally intense operations, and Squiggle is not.

|

||||||

|

|

||||||

|

**Prediction Markets and Prediction Tournaments**

|

||||||

|

Most of these tools have fairly simple inputs or forecasting types. If Squiggle becomes polished, I plan to encourage its use for these platforms. I would like to see Squiggle as an open-source, standardized language, but it will be a while (if ever) for it to be stable enough.

|

||||||

|

|

||||||

|

**Declarative Programming Languages**

|

||||||

|

Many declarative programming languages seem relevant. There are several logical or ontological languages, but my impression is that most assume certainty, which seems vastly suboptimal. I think that there’s a lot of exploration for languages that allow users to basically state all of their beliefs probabilistically, including statements about the relationships between these beliefs. The purpose wouldn’t be to find one specific variable (as often true with probabilistic programming languages), but to more to express one’s beliefs to those interested, or do various kinds of resulting analyses.

|

||||||

|

|

||||||

|

**Knowledge Graphs**

|

||||||

|

Knowledge graphs seem like the best tool for describing semantic relationships in ways that anyone outside a small group could understand. I tried making my own small knowledge graph library called [Ken](https://kenstandard.com/) , which we’ve been using a little in [Foretold](https://www.foretold.io/) . If Squiggle winds up achieving the comprehensive vision mentioned, I imagine there will be a knowledge graph somewhere.

|

||||||

|

|

||||||

|

For example, someone could write a function that takes in a “standard location schema” and returns a calculation of the number of piano tuners at that location. Later when someone queries Wikipedia for a town, it will recognize that that town has data on [Wikidata](https://www.wikidata.org/wiki/Wikidata:Main_Page) , which can be easily converted into the necessary schema.

|

||||||

|

|

||||||

|

## Next Steps

|

||||||

|

Right now I’m the only active developer of Squiggle. My work is split between Squiggle, writing blog posts and content, and other administrative and organizational duties for QURI.

|

||||||

|

|

||||||

|

My first plan is to add some documentation, clean up the internals, and begin writing short programs for personal and group use. If things go well and we could find a good developer to hire, I would be excited to see what we could do after a year or two.

|

||||||

|

|

||||||

|

Ambitious versions of Squiggle would be a *lot* of work (as in, 50 to 5000+ engineer years work), so I want to take things one step at a time. I would hope that if progress is sufficiently exciting, it would be possible to either raise sufficient funding or encourage other startups and companies to attempt their own similar solutions.

|

||||||

|

|

||||||

|

## Footnotes

|

||||||

|

|

||||||

|

[1] The main challenge comes from having a language that represents symbolic mathematics and programming statements. Both of these independently seem challenging, and I have yet to find a great way to combine them. If you read this and have suggestions for learning about making mathematical languages (like Wolfram), please do let me know.

|

||||||

|

|

||||||

|

[2] I have a distaste for JSON in cases that are primarily written and read by users. JSON was really optimized for simplicity for programming, not people. My guess is that it was a mistake to have so many modern configuration systems be in JSON instead of TOML or similar.

|

||||||

5

docs/blog/authors.yml

Normal file

|

|

@ -0,0 +1,5 @@

|

||||||

|

ozzie:

|

||||||

|

name: Ozzie Gooen

|

||||||

|

title: QURI President

|

||||||

|

url: https://forum.effectivealtruism.org/users/oagr

|

||||||

|

image_url: https://avatars.githubusercontent.com/u/377065?v=4

|

||||||

7

docs/docs/Javscript-library.md

Normal file

|

|

@ -0,0 +1,7 @@

|

||||||

|

---

|

||||||

|

sidebar_position: 3

|

||||||

|

---

|

||||||

|

|

||||||

|

# Javascript Library

|

||||||

|

|

||||||

|

|

||||||

38

docs/docs/Language.md

Normal file

|

|

@ -0,0 +1,38 @@

|

||||||

|

---

|

||||||

|

sidebar_position: 2

|

||||||

|

---

|

||||||

|

|

||||||

|

# Squiggle Language

|

||||||

|

|

||||||

|

## Distributions

|

||||||

|

```js

|

||||||

|

normal(a,b)

|

||||||

|

uniform(a,b)

|

||||||

|

lognormal(a,b)

|

||||||

|

lognormalFromMeanAndStdDev(mean, stdev)

|

||||||

|

beta(a,b)

|

||||||

|

exponential(a)

|

||||||

|

triangular(a,b,c)

|

||||||

|

mm(a,b,c, [1,2,3])

|

||||||

|

cauchy() //todo

|

||||||

|

pareto() //todo

|

||||||

|

```

|

||||||

|

|

||||||

|

## Functions

|

||||||

|

```js

|

||||||

|

trunctate() //todo

|

||||||

|

leftTrunctate() //todo

|

||||||

|

rightTrunctate()//todo

|

||||||

|

```

|

||||||

|

|

||||||

|

## Functions

|

||||||

|

```js

|

||||||

|

pdf(distribution, float)

|

||||||

|

inv(distribution, float)

|

||||||

|

cdf(distribution, float)

|

||||||

|

mean(distribution)

|

||||||

|

sample(distribution)

|

||||||

|

scaleExp(distribution, float)

|

||||||

|

scaleMultiply(distribution, float)

|

||||||

|

scaleLog(distribution, float)

|

||||||

|

```

|

||||||

101

docs/docusaurus.config.js

Normal file

|

|

@ -0,0 +1,101 @@

|

||||||

|

// @ts-check

|

||||||

|

// Note: type annotations allow type checking and IDEs autocompletion

|

||||||

|

|

||||||

|

const lightCodeTheme = require('prism-react-renderer/themes/github');

|

||||||

|

const darkCodeTheme = require('prism-react-renderer/themes/dracula');

|

||||||

|

|

||||||

|

/** @type {import('@docusaurus/types').Config} */

|

||||||

|

const config = {

|

||||||

|

title: 'Squiggle',

|

||||||

|

tagline: 'A programming language for probabilistic estimation',

|

||||||

|

url: 'https://your-docusaurus-test-site.com',

|

||||||

|

baseUrl: '/',

|

||||||

|

onBrokenLinks: 'throw',

|

||||||

|

onBrokenMarkdownLinks: 'warn',

|

||||||

|

favicon: 'img/favicon.ico',

|

||||||

|

organizationName: 'facebook', // Usually your GitHub org/user name.

|

||||||

|

projectName: 'docusaurus', // Usually your repo name.

|

||||||

|

|

||||||

|

presets: [

|

||||||

|

[

|

||||||

|

'classic',

|

||||||

|

/** @type {import('@docusaurus/preset-classic').Options} */

|

||||||

|

({

|

||||||

|

docs: {

|

||||||

|

sidebarPath: require.resolve('./sidebars.js'),

|

||||||

|

// Please change this to your repo.

|

||||||

|

editUrl: 'https://github.com/facebook/docusaurus/tree/main/packages/create-docusaurus/templates/shared/',

|

||||||

|

},

|

||||||

|

blog: {

|

||||||

|

showReadingTime: true,

|

||||||

|

// Please change this to your repo.

|

||||||

|

editUrl:

|

||||||

|

'https://github.com/facebook/docusaurus/tree/main/packages/create-docusaurus/templates/shared/',

|

||||||

|

},

|

||||||

|

theme: {

|

||||||

|

customCss: require.resolve('./src/css/custom.css'),

|

||||||

|

},

|

||||||

|

}),

|

||||||

|

],

|

||||||

|

],

|

||||||

|

|

||||||

|

themeConfig:

|

||||||

|

/** @type {import('@docusaurus/preset-classic').ThemeConfig} */

|

||||||

|

({

|

||||||

|

navbar: {

|

||||||

|

title: 'Squiggle',

|

||||||

|

logo: {

|

||||||

|

alt: 'Squiggle Logo',

|

||||||

|

src: 'img/logo.svg',

|