diff --git a/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk/index.md b/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk/index.md

index 6a04350..b3b3536 100644

--- a/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk/index.md

+++ b/blog/2023/01/23/my-highly-personal-skepticism-braindump-on-existential-risk/index.md

@@ -130,11 +130,11 @@ One particular dynamic that I’ve seen some gung-ho AI risk people mention is t

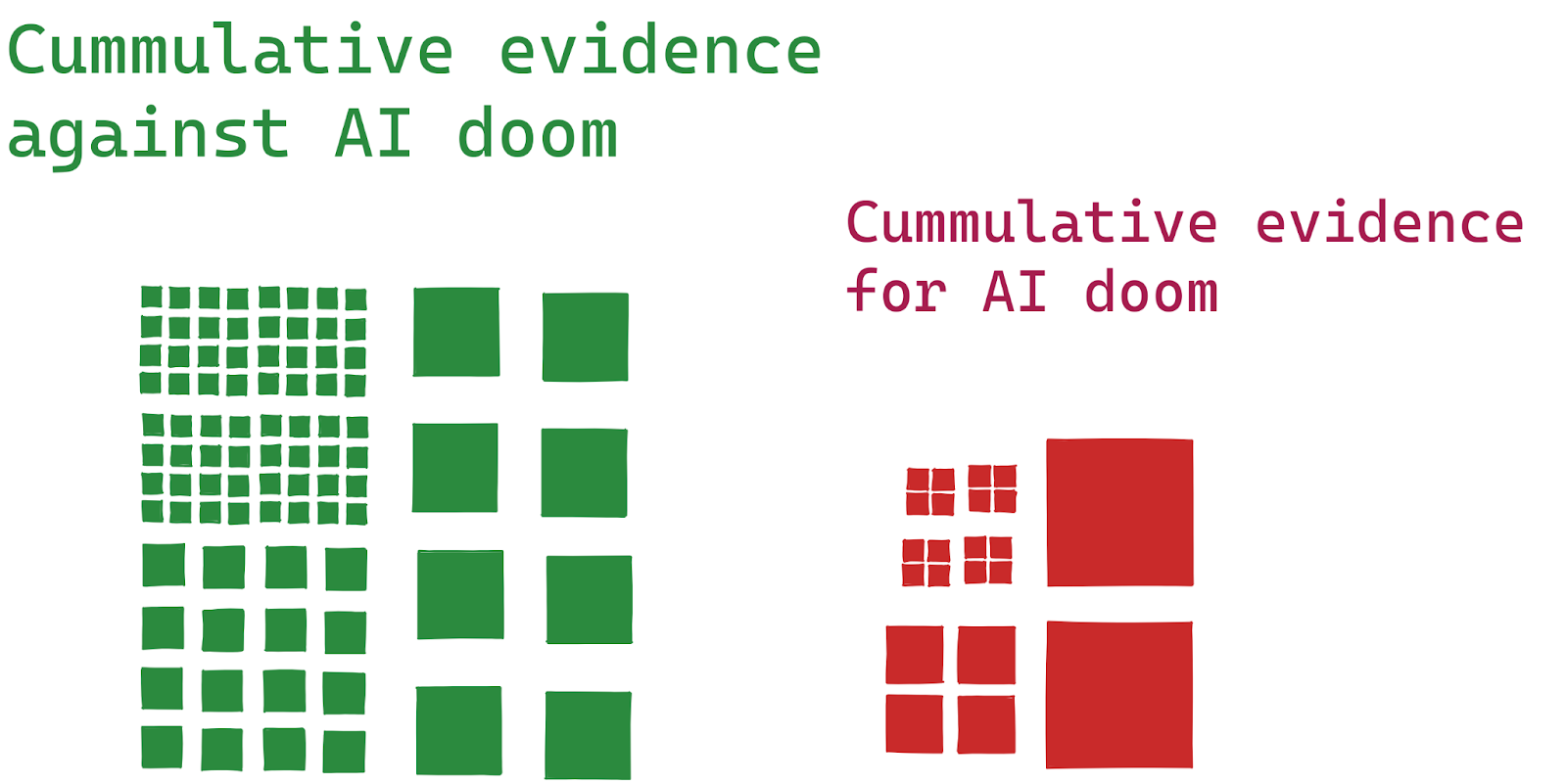

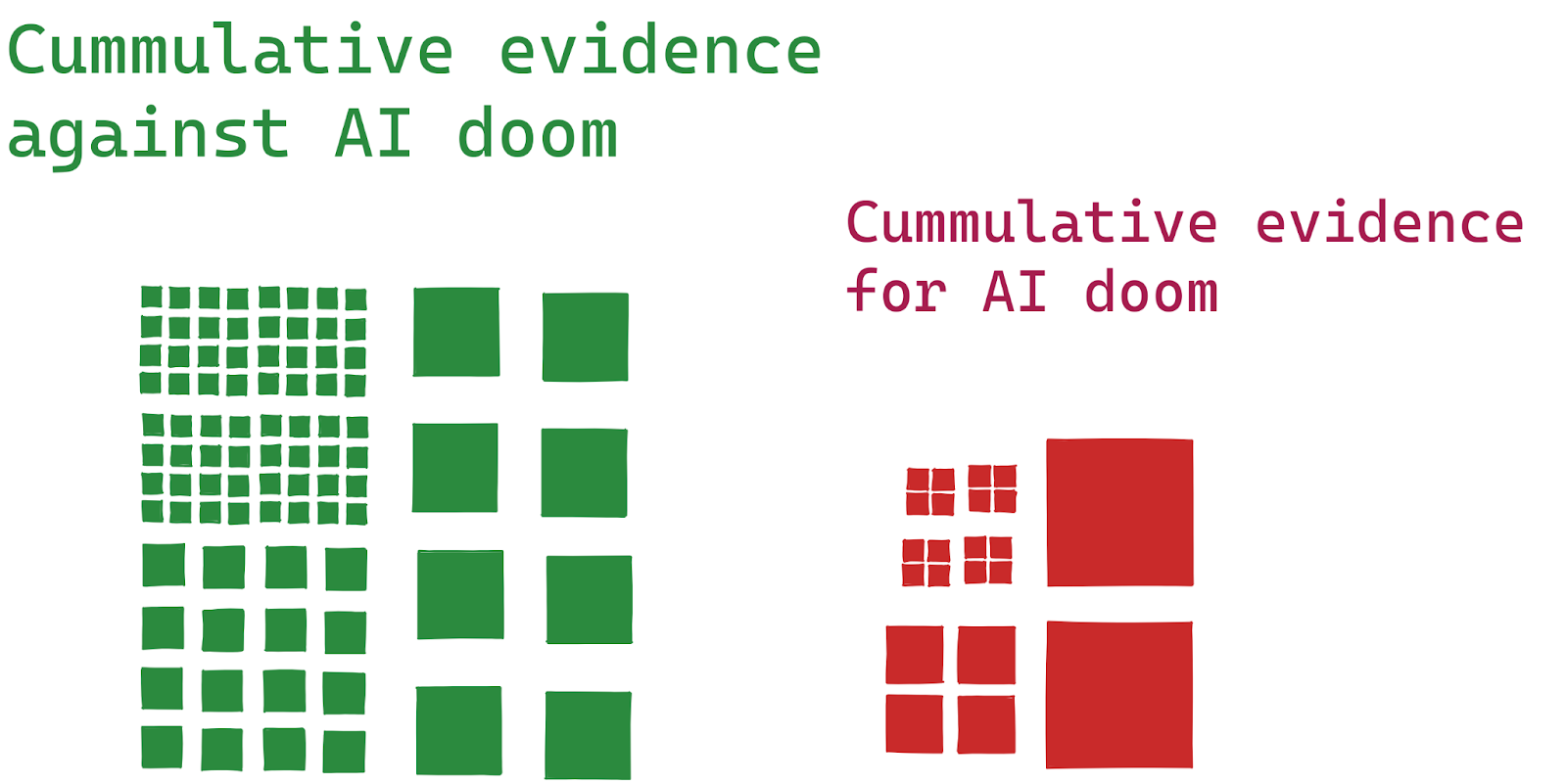

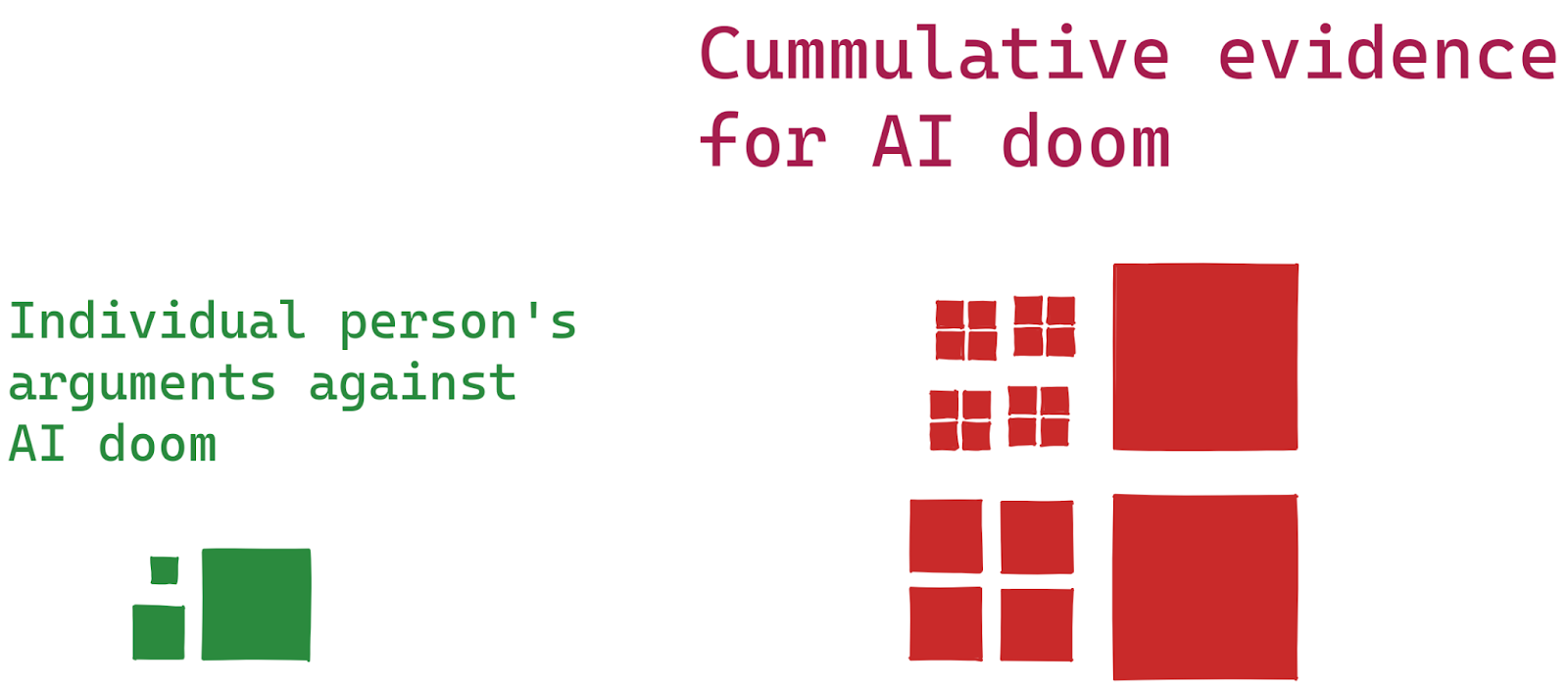

So, in illustration, the overall balance could look something like:

- +

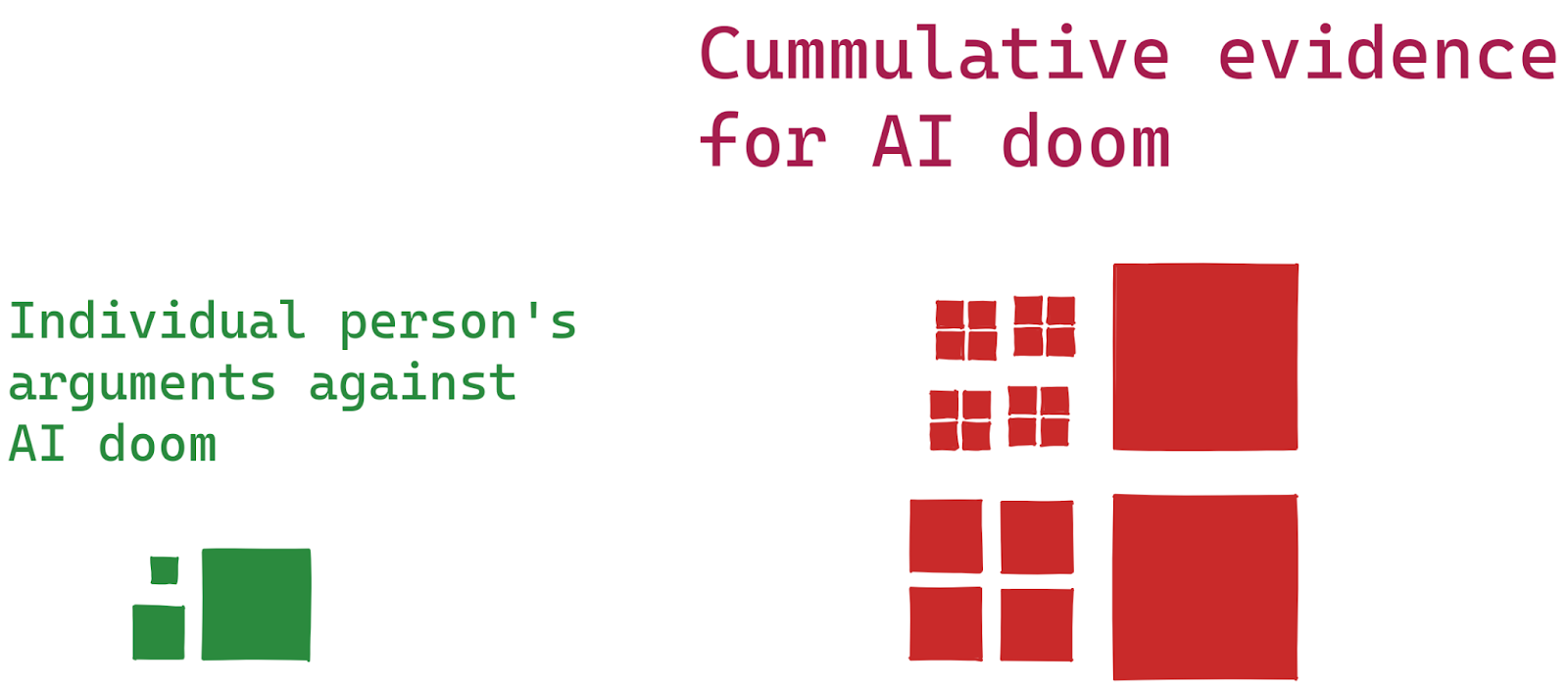

+ Whereas the individual matchup could look something like:

-

Whereas the individual matchup could look something like:

- +

+ And so you would expect the natural belief dynamics stemming from that type of matchup.

@@ -272,7 +272,7 @@ This is all.

# Acknowledgements

-

And so you would expect the natural belief dynamics stemming from that type of matchup.

@@ -272,7 +272,7 @@ This is all.

# Acknowledgements

- +

+ I am grateful to the [Samotsvety](https://samotsvety.org/) forecasters that have discussed this topic with me, and to Ozzie Gooen for comments and review. The above post doesn't necessarily represent the views of other people at the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/), which nonetheless supports my research.

diff --git a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

index 95a66c2..42635fc 100644

--- a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

+++ b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

@@ -15,7 +15,7 @@ The dataset I’m using can be seen [here](https://docs.google.com/spreadsheets

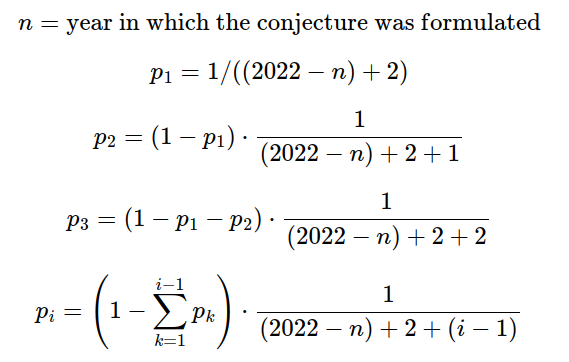

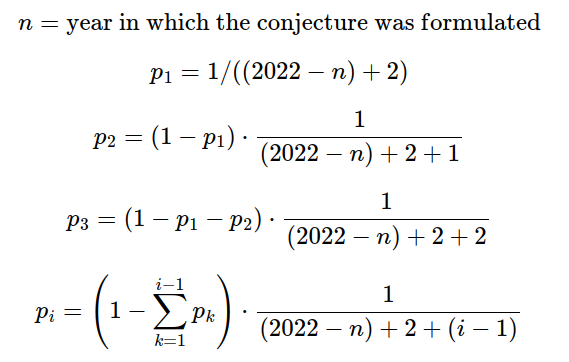

I estimate the probability that a randomly chosen conjecture will be solved as follows:

-

I am grateful to the [Samotsvety](https://samotsvety.org/) forecasters that have discussed this topic with me, and to Ozzie Gooen for comments and review. The above post doesn't necessarily represent the views of other people at the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/), which nonetheless supports my research.

diff --git a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

index 95a66c2..42635fc 100644

--- a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

+++ b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

@@ -15,7 +15,7 @@ The dataset I’m using can be seen [here](https://docs.google.com/spreadsheets

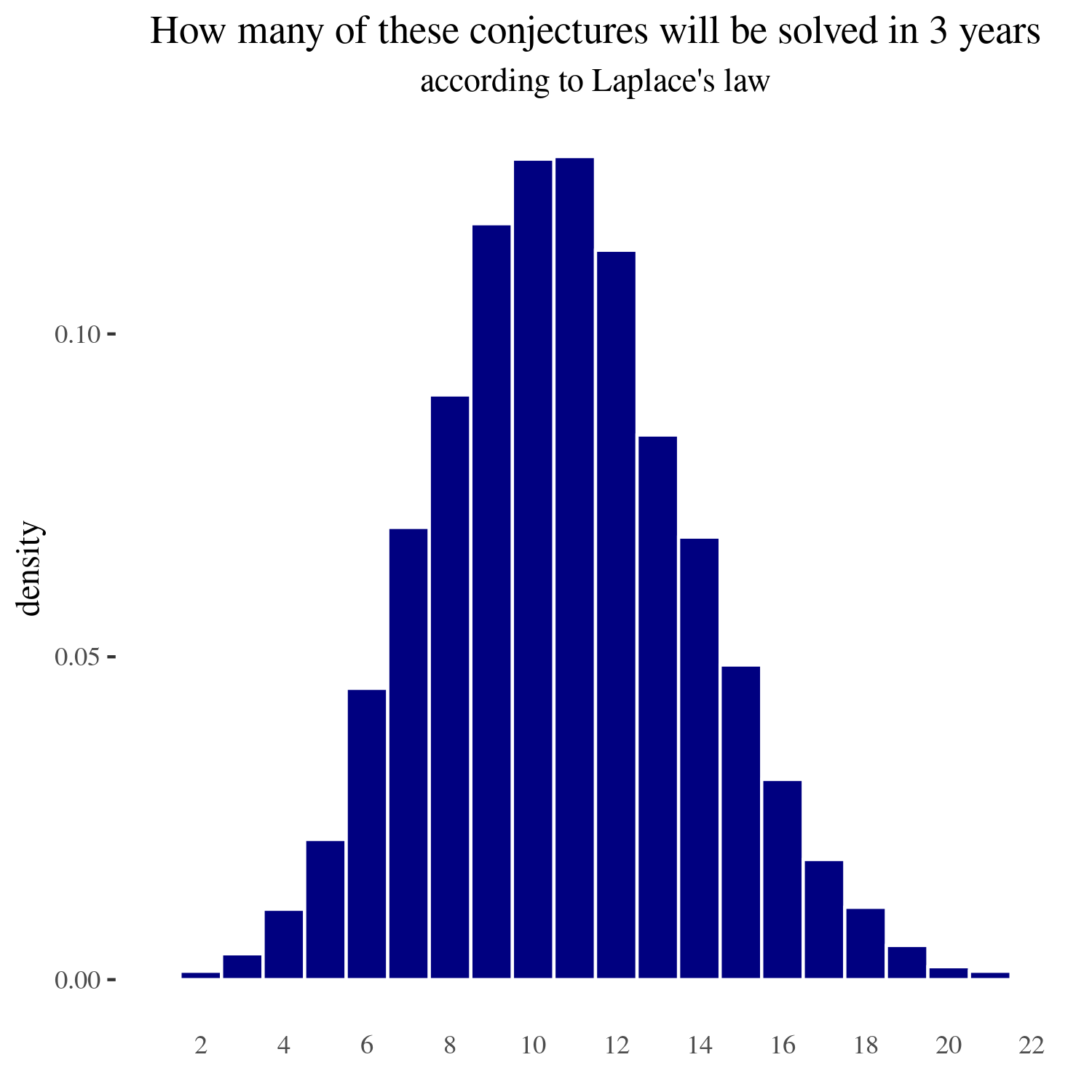

I estimate the probability that a randomly chosen conjecture will be solved as follows:

- +

+ That is, the probability that the conjecture will first be solved in the year _n_ is the probability given by Laplace conditional on it not having been solved any year before.

@@ -29,19 +29,19 @@ Using the above probabilities, we can, through sampling, estimate the number of

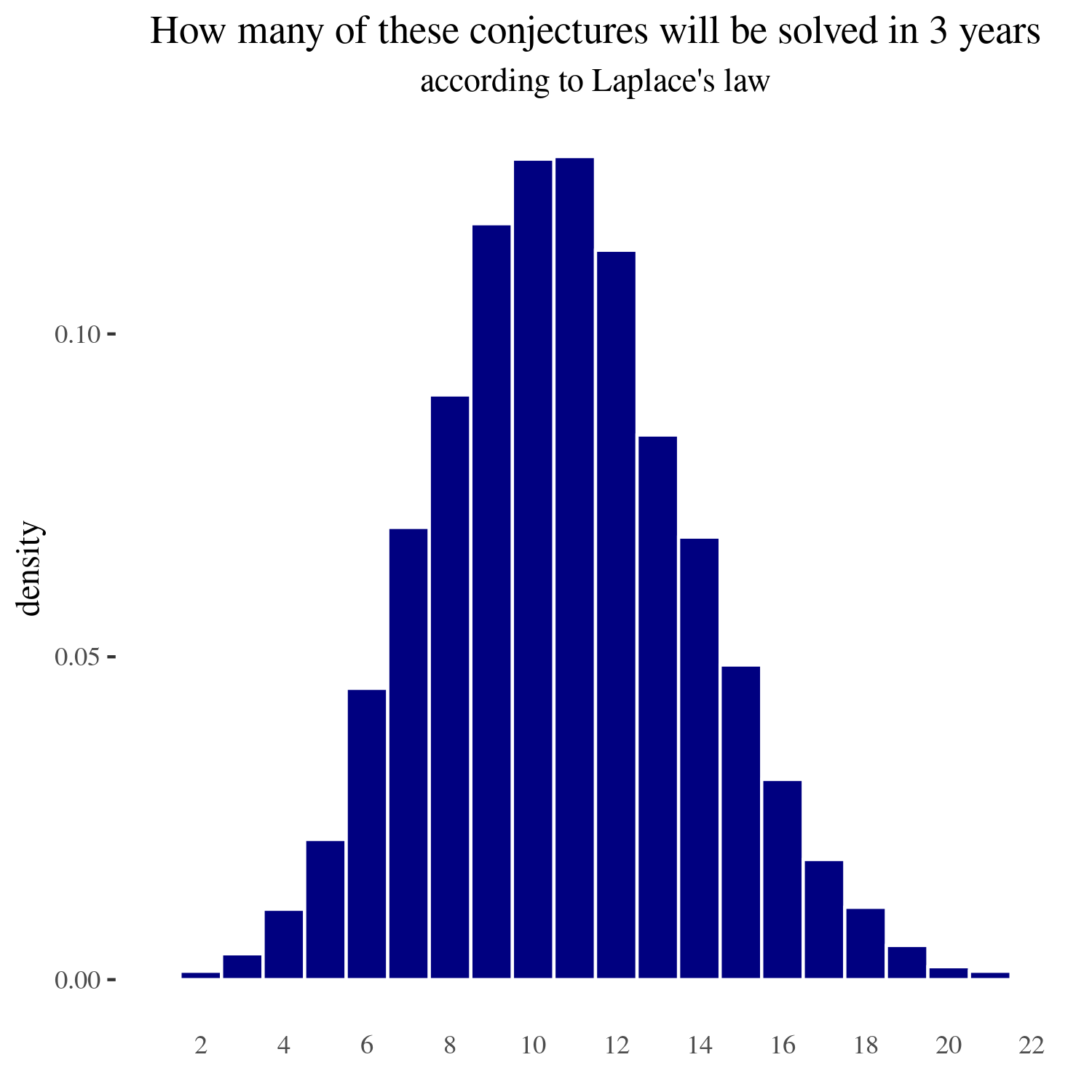

### For three years

-**

That is, the probability that the conjecture will first be solved in the year _n_ is the probability given by Laplace conditional on it not having been solved any year before.

@@ -29,19 +29,19 @@ Using the above probabilities, we can, through sampling, estimate the number of

### For three years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (6 to 16) and (4 to 18) problems solved in the next three years.

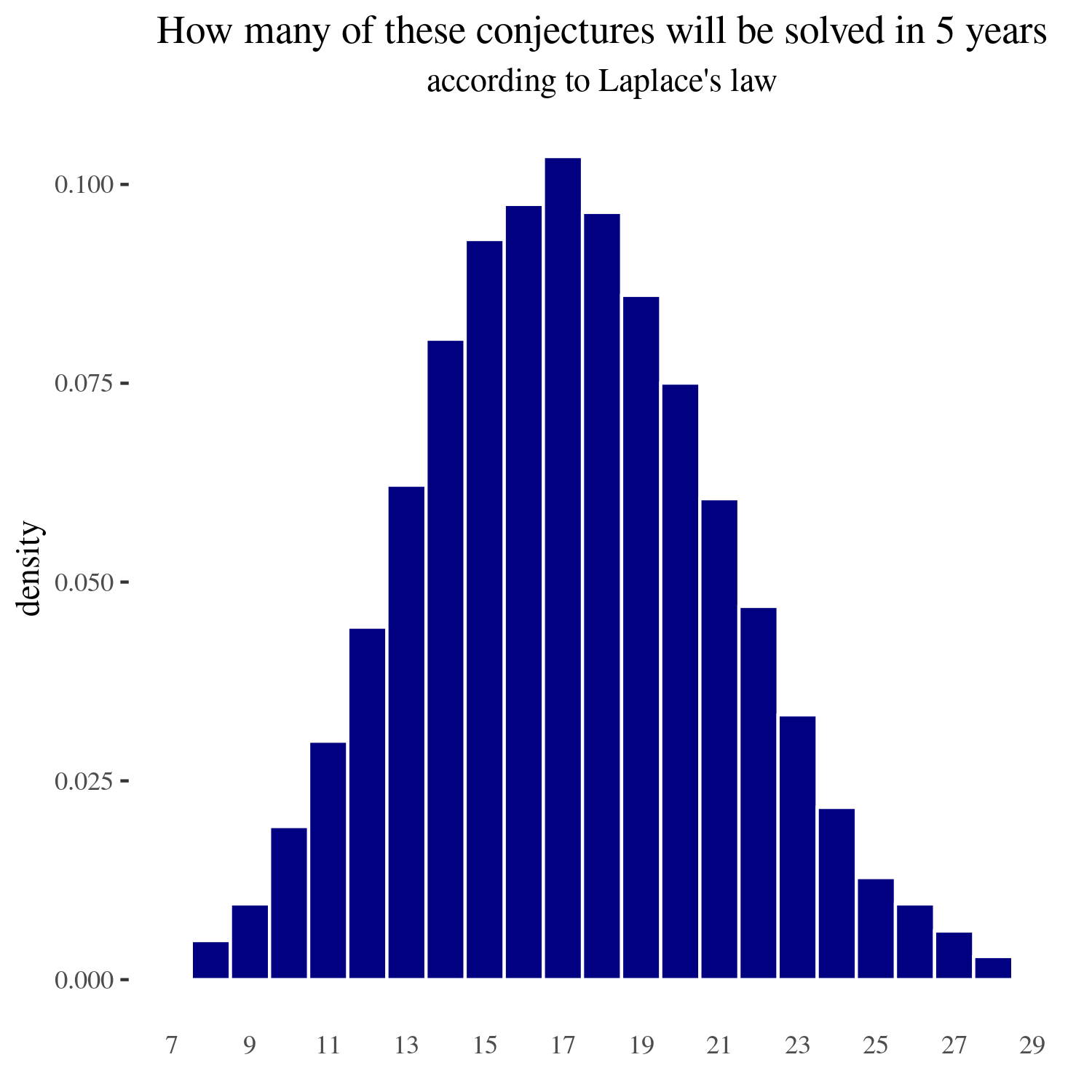

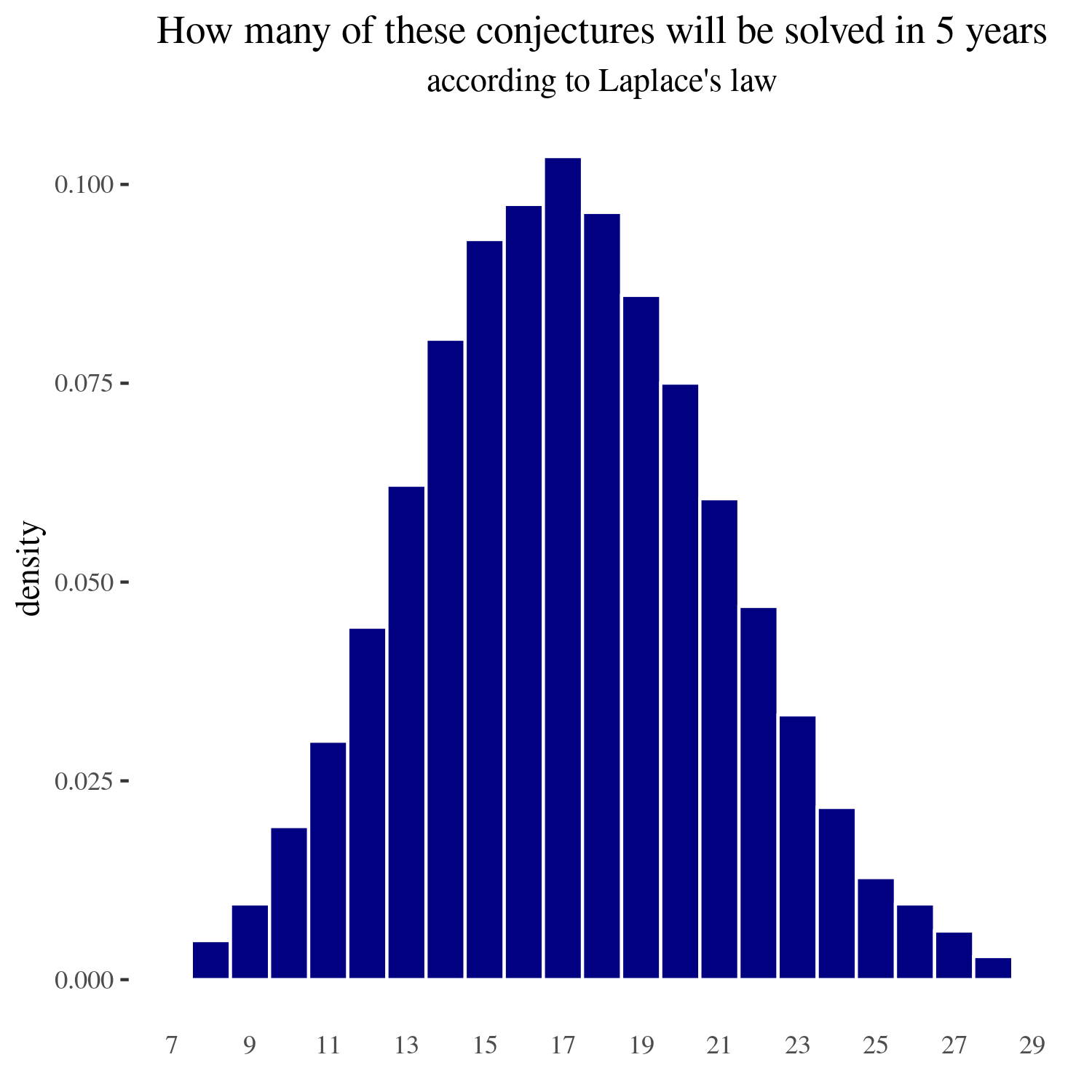

### For five years

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (6 to 16) and (4 to 18) problems solved in the next three years.

### For five years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (11 to 24) and (9 to 27) problems solved in the next five years.

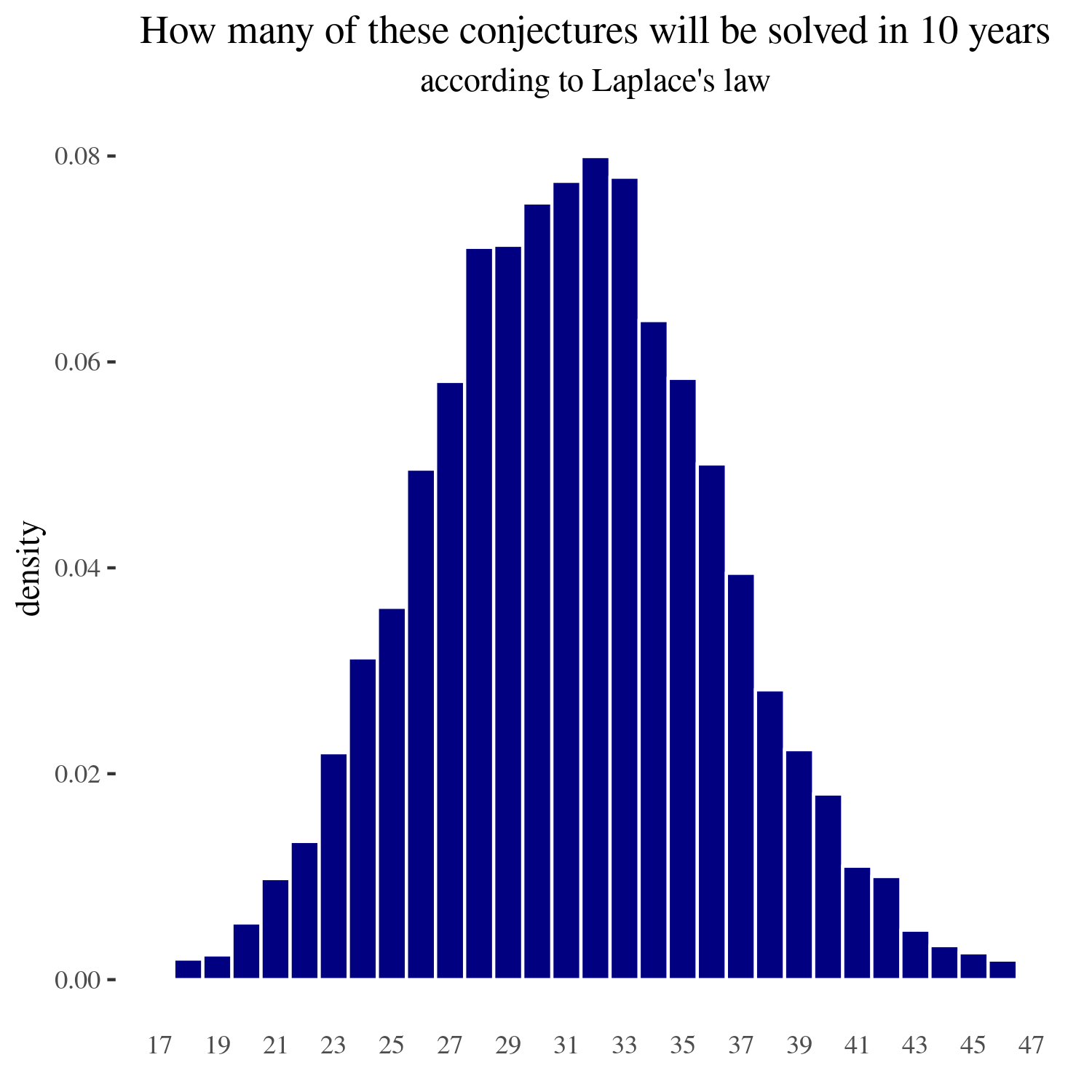

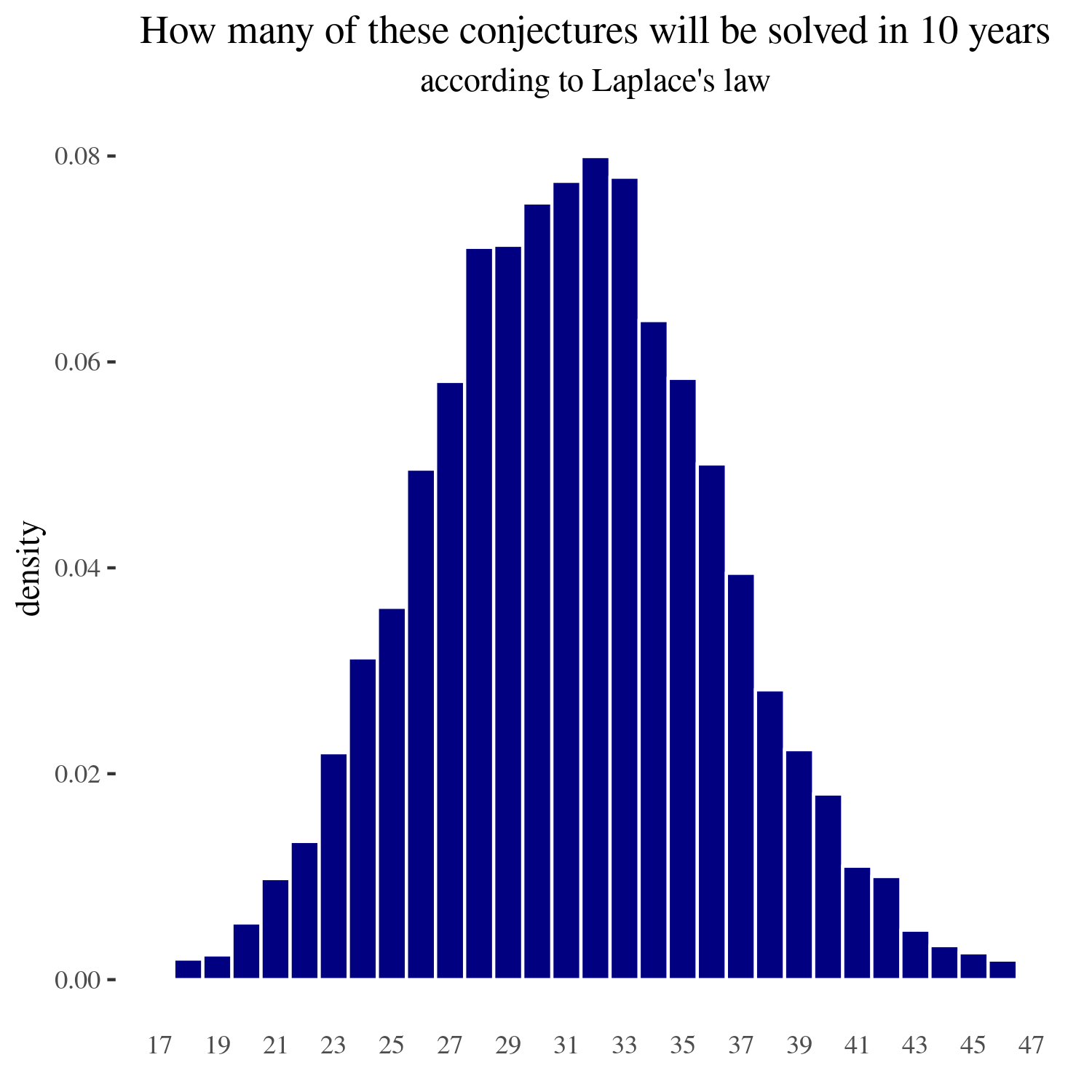

### For ten years

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (11 to 24) and (9 to 27) problems solved in the next five years.

### For ten years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (23 to 40) and (20 to 43) problems solved in the next five years.

@@ -75,7 +75,7 @@ The reason why I didn’t do this myself is that step 2. would be fairly time in

## Acknowledgements

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (23 to 40) and (20 to 43) problems solved in the next five years.

@@ -75,7 +75,7 @@ The reason why I didn’t do this myself is that step 2. would be fairly time in

## Acknowledgements

-** **

+**

**

+** **

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/) (QURI). Thanks to Ozzie Gooen and Nics Olayres for giving comments and suggestions.

diff --git a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

index 7e859d4..faeb9ee 100644

--- a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

+++ b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

@@ -5,7 +5,7 @@ In early 2022, the Effective Altruism movement was triumphant. Sam Bankman-Fried

Now the situation looks different. Samo Burja has this interesting book on [Great Founder Theory][0] , from which I’ve gotten the notion of an “expanding empire”. In an expanding empire, like a startup, there are new opportunities and land to conquer, and members can be rewarded with parts of the newly conquered land. The optimal strategy here is _unity_ . EA in 2022 was just that, a united social movement playing together against the cruelty of nature and history.

-

+

*

**

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/) (QURI). Thanks to Ozzie Gooen and Nics Olayres for giving comments and suggestions.

diff --git a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

index 7e859d4..faeb9ee 100644

--- a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

+++ b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

@@ -5,7 +5,7 @@ In early 2022, the Effective Altruism movement was triumphant. Sam Bankman-Fried

Now the situation looks different. Samo Burja has this interesting book on [Great Founder Theory][0] , from which I’ve gotten the notion of an “expanding empire”. In an expanding empire, like a startup, there are new opportunities and land to conquer, and members can be rewarded with parts of the newly conquered land. The optimal strategy here is _unity_ . EA in 2022 was just that, a united social movement playing together against the cruelty of nature and history.

-

+

*

Imagine the Spanish empire, without the empire.*

My sense is that the tendency for EA in 2023 and going forward will be less like that. Funding is now more limited, not only because the FTX empire collapsed, but also because the stock market collapsed, which means that Open Philanthropy—now the main funder once again—also has less money. With funding drying, EA will now have to economize and prioritize between different causes. And with economizing in the background, internecine fights become more worth it, because the EA movement isn’t trying to grow the pie together, but rather each part will be trying to defend its share of the pie. Fewer shared offices will exist all over the place, fewer regrantors to fund moonshots. More frugality. So EA will become more like a bureaucracy and less like a startup. You get the idea.

+

+ Whereas the individual matchup could look something like:

-

Whereas the individual matchup could look something like:

- +

+ And so you would expect the natural belief dynamics stemming from that type of matchup.

@@ -272,7 +272,7 @@ This is all.

# Acknowledgements

-

And so you would expect the natural belief dynamics stemming from that type of matchup.

@@ -272,7 +272,7 @@ This is all.

# Acknowledgements

- +

+ I am grateful to the [Samotsvety](https://samotsvety.org/) forecasters that have discussed this topic with me, and to Ozzie Gooen for comments and review. The above post doesn't necessarily represent the views of other people at the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/), which nonetheless supports my research.

diff --git a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

index 95a66c2..42635fc 100644

--- a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

+++ b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

@@ -15,7 +15,7 @@ The dataset I’m using can be seen [here](https://docs.google.com/spreadsheets

I estimate the probability that a randomly chosen conjecture will be solved as follows:

-

I am grateful to the [Samotsvety](https://samotsvety.org/) forecasters that have discussed this topic with me, and to Ozzie Gooen for comments and review. The above post doesn't necessarily represent the views of other people at the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/), which nonetheless supports my research.

diff --git a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

index 95a66c2..42635fc 100644

--- a/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

+++ b/blog/2023/01/30/an-in-progress-experiment-to-test-how-laplace-s-rule-of/index.md

@@ -15,7 +15,7 @@ The dataset I’m using can be seen [here](https://docs.google.com/spreadsheets

I estimate the probability that a randomly chosen conjecture will be solved as follows:

- +

+ That is, the probability that the conjecture will first be solved in the year _n_ is the probability given by Laplace conditional on it not having been solved any year before.

@@ -29,19 +29,19 @@ Using the above probabilities, we can, through sampling, estimate the number of

### For three years

-**

That is, the probability that the conjecture will first be solved in the year _n_ is the probability given by Laplace conditional on it not having been solved any year before.

@@ -29,19 +29,19 @@ Using the above probabilities, we can, through sampling, estimate the number of

### For three years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (6 to 16) and (4 to 18) problems solved in the next three years.

### For five years

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (6 to 16) and (4 to 18) problems solved in the next three years.

### For five years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (11 to 24) and (9 to 27) problems solved in the next five years.

### For ten years

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (11 to 24) and (9 to 27) problems solved in the next five years.

### For ten years

-** **

+**

**

+** **

If we calculate the 90% and the 98% confidence intervals, these are respectively (23 to 40) and (20 to 43) problems solved in the next five years.

@@ -75,7 +75,7 @@ The reason why I didn’t do this myself is that step 2. would be fairly time in

## Acknowledgements

-**

**

If we calculate the 90% and the 98% confidence intervals, these are respectively (23 to 40) and (20 to 43) problems solved in the next five years.

@@ -75,7 +75,7 @@ The reason why I didn’t do this myself is that step 2. would be fairly time in

## Acknowledgements

-** **

+**

**

+** **

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/) (QURI). Thanks to Ozzie Gooen and Nics Olayres for giving comments and suggestions.

diff --git a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

index 7e859d4..faeb9ee 100644

--- a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

+++ b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

@@ -5,7 +5,7 @@ In early 2022, the Effective Altruism movement was triumphant. Sam Bankman-Fried

Now the situation looks different. Samo Burja has this interesting book on [Great Founder Theory][0] , from which I’ve gotten the notion of an “expanding empire”. In an expanding empire, like a startup, there are new opportunities and land to conquer, and members can be rewarded with parts of the newly conquered land. The optimal strategy here is _unity_ . EA in 2022 was just that, a united social movement playing together against the cruelty of nature and history.

-

+

*

**

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/) (QURI). Thanks to Ozzie Gooen and Nics Olayres for giving comments and suggestions.

diff --git a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

index 7e859d4..faeb9ee 100644

--- a/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

+++ b/blog/2023/01/30/ea-no-longer-expanding-empire/index.md

@@ -5,7 +5,7 @@ In early 2022, the Effective Altruism movement was triumphant. Sam Bankman-Fried

Now the situation looks different. Samo Burja has this interesting book on [Great Founder Theory][0] , from which I’ve gotten the notion of an “expanding empire”. In an expanding empire, like a startup, there are new opportunities and land to conquer, and members can be rewarded with parts of the newly conquered land. The optimal strategy here is _unity_ . EA in 2022 was just that, a united social movement playing together against the cruelty of nature and history.

-

+

*