reorg after imgur tweaks

This commit is contained in:

parent

056da744a8

commit

c7abc480fa

|

|

@ -1,163 +0,0 @@

|

||||||

<h1>Just-in-time Bayesianism</h1>

|

|

||||||

|

|

||||||

<h2>Summary</h2>

|

|

||||||

|

|

||||||

<p>I propose a variant of subjective Bayesianism that I think captures some important aspects of how humans<sup id="fnref:1"><a href="#fn:1" rel="footnote">1</a></sup> reason in practice given that Bayesian inference is normally too computationally expensive. I compare it to some theories in the philosophy of science and briefly mention possible alternatives. In conjuction with Laplace’s law, I claim that it might be able to explain some aspects of <a href="https://astralcodexten.substack.com/p/trapped-priors-as-a-basic-problem">trapped priors</a>.</p>

|

|

||||||

|

|

||||||

<h2>A motivating problem in subjective Bayesianism</h2>

|

|

||||||

|

|

||||||

<script src="https://polyfill.io/v3/polyfill.min.js?features=es6"></script>

|

|

||||||

|

|

||||||

|

|

||||||

<script id="MathJax-script" async src="https://cdn.jsdelivr.net/npm/mathjax@3/es5/tex-mml-chtml.js"></script>

|

|

||||||

|

|

||||||

|

|

||||||

<!-- Note: to correctly render this math, compile this markdown with

|

|

||||||

/usr/bin/markdown -f fencedcode -f ext -f footnote -f latex $1

|

|

||||||

where /usr/bin/markdown is the discount markdown binary

|

|

||||||

https://github.com/Orc/discount

|

|

||||||

http://www.pell.portland.or.us/~orc/Code/discount/

|

|

||||||

-->

|

|

||||||

|

|

||||||

|

|

||||||

<p>Bayesianism as an epistemology has elegance and parsimony, stemming from its inevitability as formalized by <a href="https://en.wikipedia.org/wiki/Cox's_theorem">Cox’s</a> <a href="https://nunosempere.com/blog/2022/08/31/on-cox-s-theorem-and-probabilistic-induction/">theorem</a>. For this reason, it has a certain magnetism as an epistemology.</p>

|

|

||||||

|

|

||||||

<p>However, consider the following example: a subjective Bayesian which has only two hypothesis about a coin:</p>

|

|

||||||

|

|

||||||

<p>\[

|

|

||||||

\begin{cases}

|

|

||||||

\text{it has bias } 2/3\text{ tails }1/3 \text{ heads }\\

|

|

||||||

\text{it has bias } 1/3\text{ tails }2/3 \text{ heads }

|

|

||||||

\end{cases}

|

|

||||||

\]</p>

|

|

||||||

|

|

||||||

<p>Now, as that subjective Bayesian observes a sequence of coin tosses, he might end up very confused. For instance, if he only observes tails, he will end up assigning almost all of his probability to the first hypothesis. Or if he observes 50% tails and 50% heads, he will end up assigning equal probability to both hypotheses. But in neither case are his hypotheses a good representation of reality.</p>

|

|

||||||

|

|

||||||

<p>Now, this could be fixed by adding more hypotheses, for instance some probability density to each possible bias. This would work for the example of a coin toss, but might not work for more complex real-life examples: representing many hypothesis about the war in Ukraine or about technological progress in their fullness would be too much for humans.<sup id="fnref:2"><a href="#fn:2" rel="footnote">2</a></sup></p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/vqc48uT.png" alt="" />

|

|

||||||

<strong>Original subjective Bayesianism</strong></p>

|

|

||||||

|

|

||||||

<p>So on the one hand, if our set of hypothesis is too narrow, we risk not incorporating a hypothesis that reflects the real world. But on the other hand, if we try to incorporate too many hypothesis, our mind explodes because it is too tiny. Whatever shall we do?</p>

|

|

||||||

|

|

||||||

<h2>Just-in-time Bayesianism by analogy to just-in-time compilation</h2>

|

|

||||||

|

|

||||||

<p><a href="https://en.wikipedia.org/wiki/Just-in-time_compilation">Just-in-time compilation</a> refers to a method of executing programs such that their instructions are translated to machine code not at the beginning, but rather as the program is executed.</p>

|

|

||||||

|

|

||||||

<p>By analogy, I define just-in-time Bayesianism as a variant of subjective Bayesian where inference is initially performed over a limited number of hypothesis, but if and when these hypothesis fail to be sufficiently predictive of the world, more are searched for and past Bayesian inference is recomputed.</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/bptVgcS.png" alt="" />

|

|

||||||

<strong>Just-in-time Bayesianism</strong></p>

|

|

||||||

|

|

||||||

<p>I intuit that this method could be used to run a version of Solomonoff induction that converges to the correct hypothesis that describes a computable phenomenon in a finite (but still enormous) amount of time. More generally, I intuit that just-in-time Bayesianism will have some nice convergence guarantees.</p>

|

|

||||||

|

|

||||||

<h2>As this relates to the philosophy of science</h2>

|

|

||||||

|

|

||||||

<p>The <a href="https://en.wikipedia.org/wiki/Strong_programme">strong programme</a> in the sociology of science aims to explain science only with reference to the sociological conditionst that bring it about. There are also various accounts of science which aim to faithfully describe how science is actually practiced.</p>

|

|

||||||

|

|

||||||

<p>Well, I’m more attracted to trying to explain the workings of science with reference to the ideal mechanism from which they fall short. And I think that just-In-Time Bayesianism parsimoniously explains some aspects with reference to:</p>

|

|

||||||

|

|

||||||

<ol>

|

|

||||||

<li>Bayesianism as the optimal/rational procedure for assigning degrees of belief to statements.</li>

|

|

||||||

<li>necessary patches which result from the lack of infinite computational power.</li>

|

|

||||||

</ol>

|

|

||||||

|

|

||||||

|

|

||||||

<p>As a result, just-in-time Bayesianism not only does well in the domains in which normal Bayesianism does well:

|

|

||||||

- It smoothly processes the distinction between background knowledge and new revelatory evidence

|

|

||||||

- It grasps that both confirmatory and falsificatory evidence are important—which inductionism/confirmationism and naïve forms of falsificationism both fail at

|

|

||||||

- It parsimoniously dissolves the problem of induction: one never reaches certainty, and instead accumulates Bayesian evidence.</p>

|

|

||||||

|

|

||||||

<p>But it is also able to shed some light in some phenomena where alternative theories of science have traditionally fared better:</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>It interprets the difference between scientific revolutions (where the paradigm changes) and normal science (where the implications of the paradigm are fleshd out) as a result of finite computational power</li>

|

|

||||||

<li>It does a bit better at explaining the problem of priors, where the priors are just the hypothesis that humanity has had enough computing power to generate.</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>Though it is still not perfect</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>the “problem of priors” is still not really dissolved to a nice degree of satisfaction.</li>

|

|

||||||

<li>the step of acquiring more hypotheses is not really explained, and it is also a feature of other philosophies of science, so it’s unclear that this is that much of a win for just-in-time Bayesianism.</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>So anyways, in philosophy of science the main advantages that just-in-time Bayesianism has is being able to keep some of the more compelling features of Bayesianism, while at the same time also being able to explain some features that other philosophy of science theories have.</p>

|

|

||||||

|

|

||||||

<h2>As it relates to ignoring small probabilities</h2>

|

|

||||||

|

|

||||||

<p><a href="https://philpapers.org/archive/KOSTPO-18.pdf">Kosonen 2022</a> explores a setup in which an agent ignores small probabilities of vast value, in the context of trying to deal with the “fanaticism” of various ethical theories.</p>

|

|

||||||

|

|

||||||

<p>Here is my perspective on this dilemma:</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>On the one hand, neglecting small probabilities has the benefit of making expected calculations computationally tractable: if we didn’t ignore at least some probabilities, we would never finish these calculations.</li>

|

|

||||||

<li>But on the other hand, the various available methods for ignoring small probabilities are not robust. For example, they are not going to be robust to situations in which these probabilities shift (see p. 181, “The Independence Money Pump”, <a href="https://philpapers.org/archive/KOSTPO-18.pdf">Kosonen 2022</a>)

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>For example, one could have been very sure that the Sun orbits the Earth, which could have some theological and moral implications. In fact, one could be so sure that one could assign some very small—if not infinitesimal—probability to the Earth orbitting the sun instead. But if one ignores very small probabilities ex-ante, one might not able to update in the face of new evidence.</li>

|

|

||||||

</ul>

|

|

||||||

</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>Just-in-time Bayesianism might solve this problem by indeed ignoring small probabilities at the beginning, but expanding the search for hypotheses if current hypotheses aren’t very predictive of the world we observe.</p>

|

|

||||||

|

|

||||||

<h2>Some other related theories and alternatives.</h2>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>Non-Bayesian epistemology: e.g., falsificationism, positivism, etc.</li>

|

|

||||||

<li><a href="https://www.alignmentforum.org/posts/Zi7nmuSmBFbQWgFBa/infra-bayesianism-unwrapped">Infra-Bayesianism</a>, a theory of Bayesianism which, amongst other things, is robust to adversaries filtering evidence</li>

|

|

||||||

<li><a href="https://intelligence.org/files/LogicalInduction.pdf">Logical induction</a>, which also seems uncomputable on account of considering all hypotheses, but which refines itself in finite time</li>

|

|

||||||

<li>Predictive processing, in which an agent changes the world so that it conforms to its internal model.</li>

|

|

||||||

<li>etc.</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<h2>As this relates to the trapped problem of priors</h2>

|

|

||||||

|

|

||||||

<p>In <a href="https://astralcodexten.substack.com/p/trapped-priors-as-a-basic-problem">Trapped Priors As A Basic Problem Of Rationality</a>, Scott Alexander considers the case of a man who was previously unafraid of dogs, and then had a scary experience related to a dog—for our purposes imagine that they were bitten by a dog.</p>

|

|

||||||

|

|

||||||

<p>Just-in-time Bayesianism would explain this as follows.</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>At the beginning, the man had just one hypothesis, which is “dogs are fine”</li>

|

|

||||||

<li>The man is bitten by a dog. Society claims that this was a freak accident, but this doesn’t explain the man’s experiences. So the man starts a search for new hypotheses</li>

|

|

||||||

<li>After the search, the new hypotheses and their probabilities might be something like:</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>\[

|

|

||||||

\begin{cases}

|

|

||||||

\text{Dogs are fine, this was just a freak accident }\\

|

|

||||||

\text{Society is lying. Dogs are not fine, but rather they bite with a frequency of } \frac{2}{n+2}\text{, where n is the number of total encounters the man has had}

|

|

||||||

\end{cases}

|

|

||||||

\]</p>

|

|

||||||

|

|

||||||

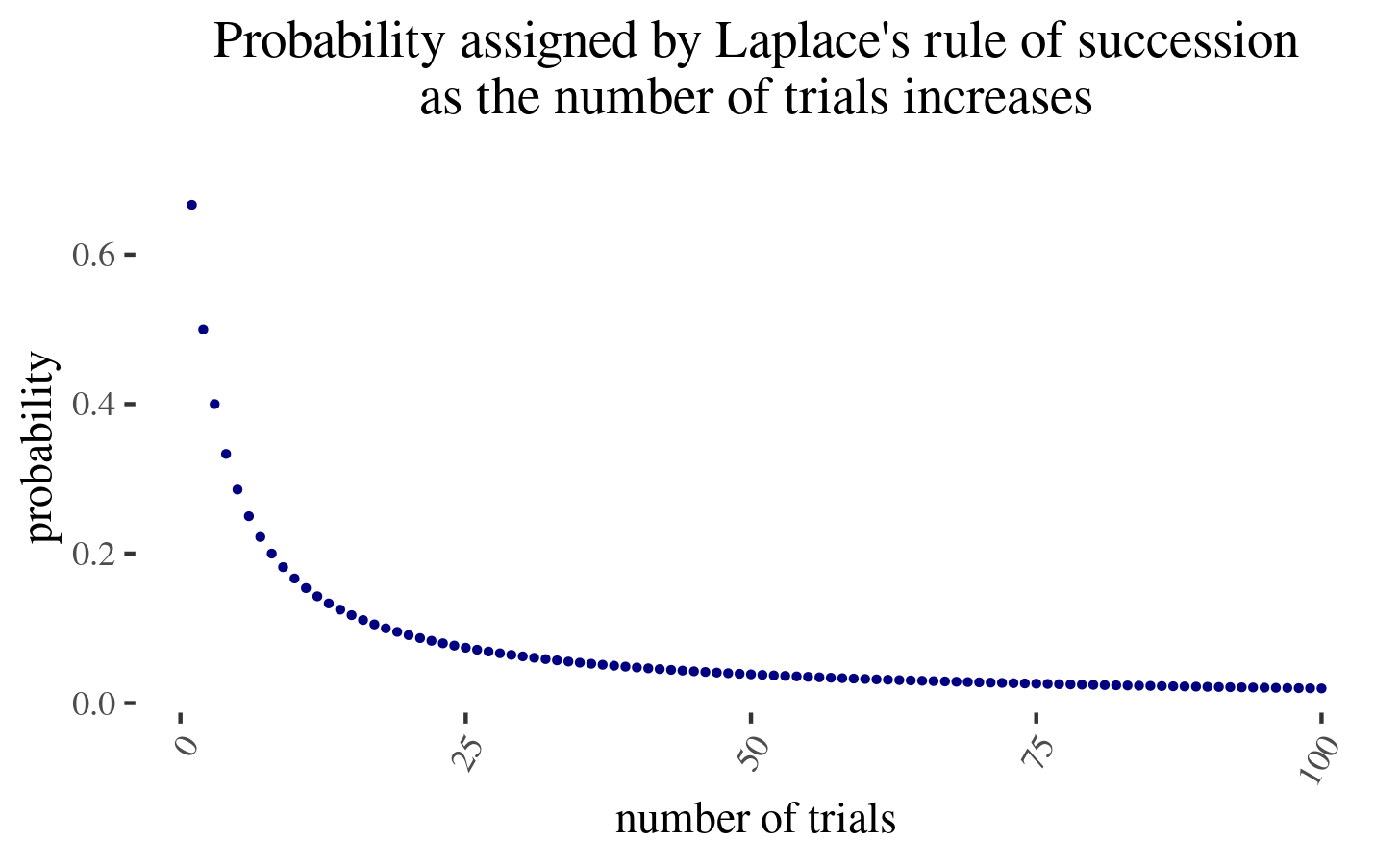

<p>The second estimate is the estimate produced by <a href="https://en.wikipedia.org/wiki/Rule_of_succession">Laplace’s law</a>—an instance of Bayesian reasoning given an ignorance prior—given one “success” (a dog biting a human) and \(n\) “failures” (a dog not biting a human).</p>

|

|

||||||

|

|

||||||

<p>Now, because the first hypothesis assigns very low probability to what the man has experienced, most of the probability goes to the second hypothesis.</p>

|

|

||||||

|

|

||||||

<p>But now, with more and more encounters, the probability assigned by the second hypothesis, will be as \(\frac{2}{n+2}\), where \(n\) is the number of times the man interacts with a dog. But this goes down very slowly:</p>

|

|

||||||

|

|

||||||

<p><img src="https://imgur.com/nIbnexh.png" alt="" /></p>

|

|

||||||

|

|

||||||

<p>In particular, you need to experience around as many interactions as you previously have without a dog for \(p(n) =\frac{2}{n+2}\) to halve. But note that this in expectation produces another dog bite! Hence the trapped priors.</p>

|

|

||||||

|

|

||||||

<h2>Conclusion</h2>

|

|

||||||

|

|

||||||

<p>In conclusion, I sketched a simple variation of subjective Bayesianism that is able to deal with limited computing power. I find that it sheds some clarity in various fields, and considered cases in the philosophy of science, discounting small probabilities in moral philosophy, and the applied rationality community.</p>

|

|

||||||

<div class="footnotes">

|

|

||||||

<hr/>

|

|

||||||

<ol>

|

|

||||||

<li id="fn:1">

|

|

||||||

I think that the model has more explanatory power when applied to groups of humans that can collectively<a href="#fnref:1" rev="footnote">↩</a></li>

|

|

||||||

<li id="fn:2">

|

|

||||||

In the limit, we would arrive at Solomonoff induction, a model of perfect inductive inference that assigns a probability to all computable hypothesis. <a href="http://www.vetta.org/documents/legg-1996-solomonoff-induction.pdf">Here</a> is an explanation of Solomonoff induction<sup id="fnref:3"><a href="#fn:3" rel="footnote">3</a></sup>.<a href="#fnref:2" rev="footnote">↩</a></li>

|

|

||||||

<li id="fn:3">

|

|

||||||

The author appears to be the <a href="https://en.wikipedia.org/wiki/Shane_Legg">cofounder of DeepMind</a>.<a href="#fnref:3" rev="footnote">↩</a></li>

|

|

||||||

</ol>

|

|

||||||

</div>

|

|

||||||

|

|

||||||

|

|

@ -30,7 +30,7 @@ Now, as that subjective Bayesian observes a sequence of coin tosses, he might en

|

||||||

|

|

||||||

Now, this could be fixed by adding more hypotheses, for instance some probability density to each possible bias. This would work for the example of a coin toss, but might not work for more complex real-life examples: representing many hypothesis about the war in Ukraine or about technological progress in their fullness would be too much for humans.[^2]

|

Now, this could be fixed by adding more hypotheses, for instance some probability density to each possible bias. This would work for the example of a coin toss, but might not work for more complex real-life examples: representing many hypothesis about the war in Ukraine or about technological progress in their fullness would be too much for humans.[^2]

|

||||||

|

|

||||||

<img src="https://i.imgur.com/vqc48uT.png" alt="pictorial depiction of the Bayesian algorithm" style="display: block; margin-left: auto; margin-right: auto; width: 30%;" >

|

<img src="https://images.nunosempere.com/blog/2023/02/04/just-in-time-bayesianism/bayes-1.png" alt="pictorial depiction of the Bayesian algorithm" style="display: block; margin-left: auto; margin-right: auto; width: 30%;" >

|

||||||

|

|

||||||

So on the one hand, if our set of hypothesis is too narrow, we risk not incorporating a hypothesis that reflects the real world. But on the other hand, if we try to incorporate too many hypothesis, our mind explodes because it is too tiny. Whatever shall we do?

|

So on the one hand, if our set of hypothesis is too narrow, we risk not incorporating a hypothesis that reflects the real world. But on the other hand, if we try to incorporate too many hypothesis, our mind explodes because it is too tiny. Whatever shall we do?

|

||||||

|

|

||||||

|

|

@ -40,7 +40,7 @@ So on the one hand, if our set of hypothesis is too narrow, we risk not incorpor

|

||||||

|

|

||||||

By analogy, I define just-in-time Bayesianism as a variant of subjective Bayesian where inference is initially performed over a limited number of hypothesis, but if and when these hypothesis fail to be sufficiently predictive of the world, more are searched for and past Bayesian inference is recomputed. This would look as follows:

|

By analogy, I define just-in-time Bayesianism as a variant of subjective Bayesian where inference is initially performed over a limited number of hypothesis, but if and when these hypothesis fail to be sufficiently predictive of the world, more are searched for and past Bayesian inference is recomputed. This would look as follows:

|

||||||

|

|

||||||

<img src="https://i.imgur.com/CwLA5EG.png" alt="pictorial depiction of the JIT Bayesian algorithm" style="display: block; margin-left: auto; margin-right: auto; width: 50%;" >

|

<img src="https://images.nunosempere.com/blog/2023/02/04/just-in-time-bayesianism/bayes-jit.png" alt="pictorial depiction of the JIT Bayesian algorithm" style="display: block; margin-left: auto; margin-right: auto; width: 50%;" >

|

||||||

|

|

||||||

I intuit that this method could be used to run a version of Solomonoff induction that converges to the correct hypothesis that describes a computable phenomenon in a finite (but still enormous) amount of time. More generally, I intuit that just-in-time Bayesianism will have some nice convergence guarantees.

|

I intuit that this method could be used to run a version of Solomonoff induction that converges to the correct hypothesis that describes a computable phenomenon in a finite (but still enormous) amount of time. More generally, I intuit that just-in-time Bayesianism will have some nice convergence guarantees.

|

||||||

|

|

||||||

|

|

@ -84,7 +84,7 @@ Now, because the first hypothesis assigns very low probability to what the man h

|

||||||

|

|

||||||

But now, with more and more encounters, the probability assigned by the second hypothesis, will be as \(\frac{2}{n+2}\), where \(n\) is the number of times the man interacts with a dog. But this goes down very slowly:

|

But now, with more and more encounters, the probability assigned by the second hypothesis, will be as \(\frac{2}{n+2}\), where \(n\) is the number of times the man interacts with a dog. But this goes down very slowly:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

In particular, you need to double the amount of interactions with a dog and then condition on them going positively (no bites) for \(p(n) =\frac{2}{n+2}\) to halve. But note that this in expectation approximately produces another two dog bites[^4]! Hence the optimal move might be to avoid encountering new evidence (because the chance of another dog bite is now too large), hence the trapped priors.

|

In particular, you need to double the amount of interactions with a dog and then condition on them going positively (no bites) for \(p(n) =\frac{2}{n+2}\) to halve. But note that this in expectation approximately produces another two dog bites[^4]! Hence the optimal move might be to avoid encountering new evidence (because the chance of another dog bite is now too large), hence the trapped priors.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -16,7 +16,7 @@ At the end of 2023, will Vladimir Putin be President of Russia? [Yes/No]

|

||||||

|

|

||||||

Then we can compare the relative probabilities of completion to the “Yes,” “yes,” “No” and “no” tokens. This requires a bit of care. Note that we are not making the same query 100 times and looking at the frequencies, but rather asking for the probabilities directly:

|

Then we can compare the relative probabilities of completion to the “Yes,” “yes,” “No” and “no” tokens. This requires a bit of care. Note that we are not making the same query 100 times and looking at the frequencies, but rather asking for the probabilities directly:

|

||||||

|

|

||||||

<img src="https://i.imgur.com/oNcbTGR.png" class='.img-medium-center'>

|

<img src="https://images.nunosempere.com/blog/2023/02/09/straightforwardly-eliciting-probabilities-from-gpt-3/8068e241ee54d2338d0c92616c6b688a9bf5927a.png" class='.img-medium-center'>

|

||||||

|

|

||||||

You can see a version of this strategy implemented [here](https://github.com/quantified-uncertainty/gpt-predictions/blob/master/src/prediction-methods/predict-logprobs.js).

|

You can see a version of this strategy implemented [here](https://github.com/quantified-uncertainty/gpt-predictions/blob/master/src/prediction-methods/predict-logprobs.js).

|

||||||

|

|

||||||

|

|

@ -97,13 +97,13 @@ You can see the first two strategies applied to SlateStarCodex in [this Google

|

||||||

|

|

||||||

Overall, the probabilities outputted by GPT appear to be quite mediocre as of 2023-02-06, and so I abandoned further tweaks.

|

Overall, the probabilities outputted by GPT appear to be quite mediocre as of 2023-02-06, and so I abandoned further tweaks.

|

||||||

|

|

||||||

<img src="https://i.imgur.com/jNrnGdU.png" class='.img-medium-center'>

|

<img src="https://images.nunosempere.com/blog/2023/02/09/straightforwardly-eliciting-probabilities-from-gpt-3/ce57a6365ad20e549c52913ee9f20cc866d0cdb5.png" class='.img-medium-center'>

|

||||||

|

|

||||||

In the above image, I think that we are in the first orange region, where the returns to fine-tuning and tweaking just aren’t that exciting. Though it is also possible that having tweaks and tricks ready might help us identify that the curve is turning steeper a bit earlier.

|

In the above image, I think that we are in the first orange region, where the returns to fine-tuning and tweaking just aren’t that exciting. Though it is also possible that having tweaks and tricks ready might help us identify that the curve is turning steeper a bit earlier.

|

||||||

|

|

||||||

### Acknowledgements

|

### Acknowledgements

|

||||||

|

|

||||||

<img src="https://i.imgur.com/3uQgbcw.png" style="width: 20%;">

|

<img src="https://images.nunosempere.com/quri/logo.png" style="width: 20%;">

|

||||||

<br>

|

<br>

|

||||||

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/). Thanks to Ozzie Gooen and Adam Papineau for comments and suggestions.

|

This is a project of the [Quantified Uncertainty Research Institute](https://quantifieduncertainty.org/). Thanks to Ozzie Gooen and Adam Papineau for comments and suggestions.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,229 +0,0 @@

|

||||||

<h1>A Bayesian Adjustment to Rethink Priorities' Welfare Range Estimates</h1>

|

|

||||||

|

|

||||||

<p>I was meditating on <a href="https://forum.effectivealtruism.org/posts/Qk3hd6PrFManj8K6o/rethink-priorities-welfare-range-estimates">Rethink Priorities’ Welfare Range Estimates</a>:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/zJ2JqXE.jpg" alt="" /></p>

|

|

||||||

|

|

||||||

<p>Something didn’t feel right. As I was meditating on that feeling, suddenly, an apparition of E. T. Jaynes manifested itself, and exclaimed:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/yUAG6oD.png" alt="" /></p>

|

|

||||||

|

|

||||||

<p>The way was clear. I should:</p>

|

|

||||||

|

|

||||||

<ol>

|

|

||||||

<li>Come up with a prior over welfare estimates</li>

|

|

||||||

<li>Come up with an estimate of how likely Rethink Priority’s estimates are at each point in the prior</li>

|

|

||||||

<li>Make a Bayesian update</li>

|

|

||||||

</ol>

|

|

||||||

|

|

||||||

|

|

||||||

<h3>Three shortcuts on account of my laziness</h3>

|

|

||||||

|

|

||||||

<p>To lighten my load, I took certain methodological shortcuts. First, I decided to use probabilities, rather than probability densities.</p>

|

|

||||||

|

|

||||||

<p>Taking probabilities instead of limits means that there is some additional clarity. Like, I can deduce Bayes Theorem from:</p>

|

|

||||||

|

|

||||||

<script src="https://polyfill.io/v3/polyfill.min.js?features=es6"></script>

|

|

||||||

|

|

||||||

|

|

||||||

<script id="MathJax-script" async src="https://cdn.jsdelivr.net/npm/mathjax@3/es5/tex-mml-chtml.js"></script>

|

|

||||||

|

|

||||||

|

|

||||||

<!-- Note: to correctly render this math, compile this markdown with

|

|

||||||

/usr/bin/markdown -f fencedcode -f ext -f footnote -f latex $1

|

|

||||||

where /usr/bin/markdown is the discount markdown binary

|

|

||||||

https://github.com/Orc/discount

|

|

||||||

http://www.pell.portland.or.us/~orc/Code/discount/

|

|

||||||

-->

|

|

||||||

|

|

||||||

|

|

||||||

<p>$$ P(A \& B) = P(A) \cdot P(\text{B given A}) $$

|

|

||||||

$$ P(A \& B) = P(B) \cdot P(\text{A given B}) $$</p>

|

|

||||||

|

|

||||||

<p>and therefore</p>

|

|

||||||

|

|

||||||

<p>$$ P(A) \cdot P(\text{B given A}) = P(B) \cdot P(\text{A given B}) $$</p>

|

|

||||||

|

|

||||||

<p>$$ P(\text{B given A}) = P(B) \cdot \frac{P(\text{A given B})}{P(A) } $$</p>

|

|

||||||

|

|

||||||

<p>But for probability densities, is it the case that</p>

|

|

||||||

|

|

||||||

<p>$$ d(A \& B) = d(A) \cdot d(\text{B given A})\text{?} $$</p>

|

|

||||||

|

|

||||||

<p>Well, yes, but I have to think about limits, wherein, as everyone knows, lie the workings of the devil. So I decided to use a probability distribution over 10,000 possible welfare points, rather than a probability density distribution.</p>

|

|

||||||

|

|

||||||

<p>The second shortcut I am taking is to interpret Rethink Priorities’s estimates as estimates of the relative value of humans and each species of animal—that is, to take their estimates as saying “a human is X times more valuable than a pig/chicken/shrimp/etc”. But RP explicitly notes that they are not that, they are just estimates of the range that welfare can take, from the worst experience to the best experience. You’d still have to adjust according to what proportion of that range is experienced, e.g., according to how much suffering a chicken in a factory farm experiences as a proportion of its maximum suffering.</p>

|

|

||||||

|

|

||||||

<p>And thirdly, I decided to do the Bayesian estimate based on RP’s point estimates, rather than on their 90% confidence intervals. I do feel bad about this, because I’ve been pushing for more distributions, so it feels a shame to ignore RP’s confidence intervals, which could be fitted into e.g., a beta or a lognormal distribution. At the end of this post, I revisit this shortcut.</p>

|

|

||||||

|

|

||||||

<p>So in short, my three shortcuts are:</p>

|

|

||||||

|

|

||||||

<ol>

|

|

||||||

<li>use probabilities instead of probability densities</li>

|

|

||||||

<li>wrongly interpret RP’s estimate as giving the tradeoff value between human and animals, rather than their welfare ranges.</li>

|

|

||||||

<li>use RP’s point estimates rather than fitting a distribution to their 90% confidence intervals.</li>

|

|

||||||

</ol>

|

|

||||||

|

|

||||||

|

|

||||||

<p>These shortcuts mean that you can’t literally take this Bayesian estimate seriously. Rather, it’s an illustration of how a Bayesian adjustmetn would work. If you are making an important decision which depends on these estimates—e.g., if you are Open Philanthropy or Animal Charity Evaluators and trying to estimate the value of charities corresponding to the amount of animal suffering they prevent—then you should probably commission a complete version of this analysis.</p>

|

|

||||||

|

|

||||||

<h3>Focusing on chickens</h3>

|

|

||||||

|

|

||||||

<p>For the rest of the post, I will focus on chickens. Updates for other animals should be similar, and focusing on one example lighten my loads.</p>

|

|

||||||

|

|

||||||

<p>Coming back to Rethink Priorities' estimates:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/iExw3mP.jpg" alt="" /></p>

|

|

||||||

|

|

||||||

<p>Chickens have a welfare range 0.332, i.e., 33.2% as wide as that of humans, according to RP’s estimate.</p>

|

|

||||||

|

|

||||||

<p>Remember that RP has wide confidence intervals here, so that number in isolation produces a somewhat misleading impression:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/nNbYFOp.png" alt="" /></p>

|

|

||||||

|

|

||||||

<h3>Constructing a prior</h3>

|

|

||||||

|

|

||||||

<p>So, when I think about how I disvalue chickens' suffering in comparison to how I value human flourishing, moment to moment, I come to something like the following:</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>a 40% that I don’t care about chicken suffering at all</li>

|

|

||||||

<li>a 60% that I care about chicken suffering some relatively small amount, e.g., that I care about a chicken suffering \(\frac{1}{5000} \text{ to } \frac{1}{100}\)th as much as I care about a human being content, moment for moment</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>To those specifications, my prior thus looks like this:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/cQKRZBb.png" alt="Prior over human vs chicken relative values" />

|

|

||||||

<strong>The lone red point is the probability I assign to 0 value</strong></p>

|

|

||||||

|

|

||||||

<p>Zooming in into the blue points, they look like this:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/1LQwt2A.png" alt="Prior over human vs chicken relative values -- zoomed in" /></p>

|

|

||||||

|

|

||||||

<p>As I mention before, note that I am not using a probability density, but rather lots of points. In particular, for this simulation, I’m using 50,000 points. This will become relevant later.</p>

|

|

||||||

|

|

||||||

<h3>Constructing the Bayes factor</h3>

|

|

||||||

|

|

||||||

<p>Now, by \(x\) let me represent the point “chickens are worth \( x \) as much as human,”, and by \(h\) let me represent “Rethink Priorities' investigation estimates that chickens are worth \(h\) times as much as humans”</p>

|

|

||||||

|

|

||||||

<p>Per Bayes' theorem:</p>

|

|

||||||

|

|

||||||

<p>$$ P(\text{x given h}) = P(x) \cdot \frac{P(\text{h given x})}{P(h) } $$</p>

|

|

||||||

|

|

||||||

<p>As a brief note, I’ve been writting “\( P( \text{A given B})\)” to make this more accessible to some readers. But in what follows I’ll use the normal notation “\( P(A|B) \)”, to mean the same thing, i.e., the probability of A given that we know that B is the case.</p>

|

|

||||||

|

|

||||||

<p>So now, I want to estimate \( P(\text{h given x}) \), or \( P(\text{h | x})\). I will divide this estimate in two parts, conditioning on RP’s research having gone wrong (\(W\)), and on it having not gone wrong (\( \overline{W} \)):</p>

|

|

||||||

|

|

||||||

<p>$$ P(h|x) = P(h | xW) \cdot P(W) + P(h | x \overline{W}) \cdot P(\overline{W}) $$

|

|

||||||

$$ P(h|x) = P(h | xW) \cdot P(W) + P(h | x \overline{W}) \cdot (1-P(W)) $$</p>

|

|

||||||

|

|

||||||

<p>What’s the prior probability that RP’s research has gone wrong? I don’t know, it’s a tricky domain with a fair number of moving pieces. On the other hand, RP is generally competent. I’m going to say 50%. Note that this is the prior probability, before seing the output of the research. Ideally I’d have prerecorded this.</p>

|

|

||||||

|

|

||||||

<p>What’s \( P(h | xW) \), the probability of getting \( h \) if RP’s research has gone wrong? Well, I’m using 50,000 possible welfare values, so \( \frac{1}{50000} \)</p>

|

|

||||||

|

|

||||||

<p>Conversely, what is, \( P(h | x\overline{W} ) \) the probability of getting \( h \) if RP’s research has gone right? Well, this depends on how far away \( h \) is from \( x \).</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>I’m going to say that if RP’s research had gone right, then \( h \) should be within one order of magnitude to either side of \( x \). If there are \( n(x) \) welfare values within one order of magnitude of \(x\), and \(h\) is amongst them, then \(P(h|x\overline{W}) = \frac{1}{n(x)}\)</li>

|

|

||||||

<li>Otherwise, if \( h \) is far away from \( x \), and we are conditioning both on \( x \) being the correct moral value and on RP’s research being correct, then \(P(h | x\overline{W}) \approx 0\).</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>With this, we can construct \( P(h | x) \). It looks as follows:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/ALZ8SO4.png" alt="probability of h conditional on x, for various xs" /></p>

|

|

||||||

|

|

||||||

<p>The wonky increase at the end is because those points don’t have as many other points within one order of magnitude of their position. And the sudden drop off is at 0.0332, which is more than one order of magnitude away from the 0.332 estimate. To avoid this, I could have used a function smoother than “one order of magnitude away”, and I could have used a finer grained mesh, not just 50k points evenly distributed. But I don’t think this ends up mattering much.</p>

|

|

||||||

|

|

||||||

<p>Now, to complete the <del>death star</del> Bayesian update, we just need \( P(h) \). We can easily get it through</p>

|

|

||||||

|

|

||||||

<p>$$ P(h) = \sum P(h | x) \cdot P(x) = 1.005309 \cdot 10^{-5} $$</p>

|

|

||||||

|

|

||||||

<p>That is, our original probability that RP would end up with an estimate of 0.332, as opposed to any of the other 50,000 values we are modelling, was \( \approx 1.005309\cdot 10^{-5} \). This would be a neat point to sanity check.</p>

|

|

||||||

|

|

||||||

<h3>Applying the Bayesian update</h3>

|

|

||||||

|

|

||||||

<p>With \(P(x)\) and \( \frac{P(h|x)}{P(h)} \) in hand, we can now construct \( P(x|h) \), and it looks as follows:</p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/cy3cTPH.png" alt="posterior" /></p>

|

|

||||||

|

|

||||||

<p><img src="https://i.imgur.com/OFhGen3.png" alt="posterior -- zoomed in" /></p>

|

|

||||||

|

|

||||||

<h3>Getting a few indicators.</h3>

|

|

||||||

|

|

||||||

<p>So for example, the probability assigned to 0 value moves from 40% to 39.788…%.</p>

|

|

||||||

|

|

||||||

<p>We can also calculate our prior and posterior average relative values, as</p>

|

|

||||||

|

|

||||||

<p>$$ \text{Prior expected relative value} = \sum x \cdot P(x) \approx 0.00172 $$

|

|

||||||

$$ \text{Posterior expected relative value} = \sum x \cdot P(x | h) \approx 0.00196 $$</p>

|

|

||||||

|

|

||||||

<p>We can also calculate the posterior probability that RP’s analysis is wrong. But, wrong in what sense? Well, wrong in the sense of incorrect at describing my, Nuño’s, values. But it may well be correct in terms of the tradition and philosophical assumptions that RP is working with, e.g,. some radical anti-speciesist hedonism that I don’t share.</p>

|

|

||||||

|

|

||||||

<p>So anyways, we can calculate</p>

|

|

||||||

|

|

||||||

<p>$$ P(W | h) = \sum P(x) \cdot P(w | hx) $$</p>

|

|

||||||

|

|

||||||

<p>And we can calculate \( P(w|hx \) from Bayes:</p>

|

|

||||||

|

|

||||||

<p>$$ P(w|hx) = P(W) \cdot \frac{P(h|wx)}{P(h)} $$</p>

|

|

||||||

|

|

||||||

<p>But we have all the factors: \( P(W) = 0.5 \), \( P(h | wx) = \frac{1}{50000}\) and \( P(h) = 1.005309 \cdot 10^{-5} \). Note that these factors are constant, so we don’t have to actually calculate the sum.</p>

|

|

||||||

|

|

||||||

<p>Anyways, in any case, it turns out that</p>

|

|

||||||

|

|

||||||

<p>$$ P(W|h) = 0.5 \cdot \frac{\frac{1}{50000}}{1.005309 \cdot 10^{-5}} = 0.9947.. \approx 99.5\%$$</p>

|

|

||||||

|

|

||||||

<p>Note that I am in fact abusing RP’s estimates, because they are welfare ranges, not relative values. So it <em>should</em> pop out that they are wrong, because I didn’t go to the trouble of interpreting them correctly.</p>

|

|

||||||

|

|

||||||

<p>In any case, according to the initial prior I constructed, I end up fairly confident that RP’s estimate doesn’t correctly capture my values. One downside here is that I constructed a fairly confident prior. Am I really 97.5% confident (the left tail of a 95% confidence interval) that the moment-to-moment value of a chicken is below 1% of the moment-to-moment value of a human, according to my values? Well, yes. But if I wasn’t, maybe I could mix my initial lognormal prior with a uniform distribution.</p>

|

|

||||||

|

|

||||||

<h3>Takeaways</h3>

|

|

||||||

|

|

||||||

<p>So, in conclusion, I presented a Bayesian adjustment to RP’s estimates of the welfare of different animals. The three moving pieces are:</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>My prior</li>

|

|

||||||

<li>My likelihood that RP is making a mistake in its analysis</li>

|

|

||||||

<li>RP’s estimate</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>In its writeup, RP writes:</p>

|

|

||||||

|

|

||||||

<blockquote><p>“I don’t share this project’s assumptions. Can’t I just ignore the results?”</p>

|

|

||||||

|

|

||||||

<p>We don’t think so. First, if unitarianism is false, then it would be reasonable to discount our estimates by some factor or other. However, the alternative—hierarchicalism, according to which some kinds of welfare matter more than others or some individuals’ welfare matters more than others’ welfare—is very hard to defend. (To see this, consider the many reviews of the most systematic defense of hierarchicalism, which identify deep problems with the proposal.)</p>

|

|

||||||

|

|

||||||

<p>Second, and as we’ve argued, rejecting hedonism might lead you to reduce our non-human animal estimates by ~⅔, but not by much more than that. This is because positively and negatively valenced experiences are very important even on most non-hedonist theories of welfare.</p>

|

|

||||||

|

|

||||||

<p>Relatedly, even if you reject both unitarianism and hedonism, our estimates would still serve as a baseline. A version of the Moral Weight Project with different philosophical assumptions would build on the methodology developed and implemented here—not start from scratch.</p></blockquote>

|

|

||||||

|

|

||||||

<p>So my main takeaway is that that section is mostly wrong, you can totally ignore these results if you either:</p>

|

|

||||||

|

|

||||||

<ul>

|

|

||||||

<li>have a strong prior that you don’t care about certain animals</li>

|

|

||||||

<li>assign a pretty high probability that RP’s analysis has gone wrong at some point</li>

|

|

||||||

</ul>

|

|

||||||

|

|

||||||

|

|

||||||

<p>But to leave on a positive note, I see making a Bayesian adjustment like the above as a necessary final step, but one that is very generic and that doesn’t require the deep expertise and time-intensive effort that RP has been putting into its welfare estimates. So RP has still been producing some very informative estimates, that I hope will influence decisions on this topic.</p>

|

|

||||||

|

|

||||||

<h3>Future steps</h3>

|

|

||||||

|

|

||||||

<p>I imagine that at some point in its work, RP will post numerical estimates of human vs animal values, of which their welfare ranges are but a component. If so, I’ll probably redo this analysis with those factors.</p>

|

|

||||||

|

|

||||||

<p>Besides that, it would also be neat to fit RP’s 90% confidence interval to a distribution, and update on that distribution, not only on their point estimate.</p>

|

|

||||||

|

|

||||||

<h3>Acknowledgements</h3>

|

|

||||||

|

|

||||||

<p>Thanks to Rethink Priorities for publishing their estimates so that I and others can play around with them. Generally, I’m able to do research on account of being employed by the <a href="https://quantifieduncertainty.org/">Quantified Uncertainty Research Institute</a>, which however wasn’t particularly involved in these estimates.</p>

|

|

||||||

|

|

||||||

<p>

|

|

||||||

<section id='isso-thread'>

|

|

||||||

<noscript>Javascript needs to be activated to view comments.</noscript>

|

|

||||||

</section>

|

|

||||||

</p>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Loading…

Reference in New Issue

Block a user